Convolutional Operations

Padding

Why Padding is Needed

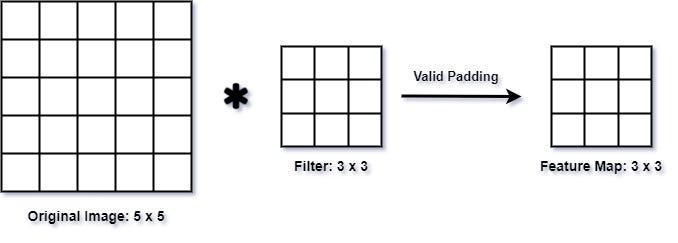

When applying convolution, the output image shrinks unless we pad it. This is a problem when building deep networks where spatial dimensions shrink after each convolution.

Without Padding:

Where:

- : input size

- : filter size

Example

In this image:

- : input size =

- : filter size =

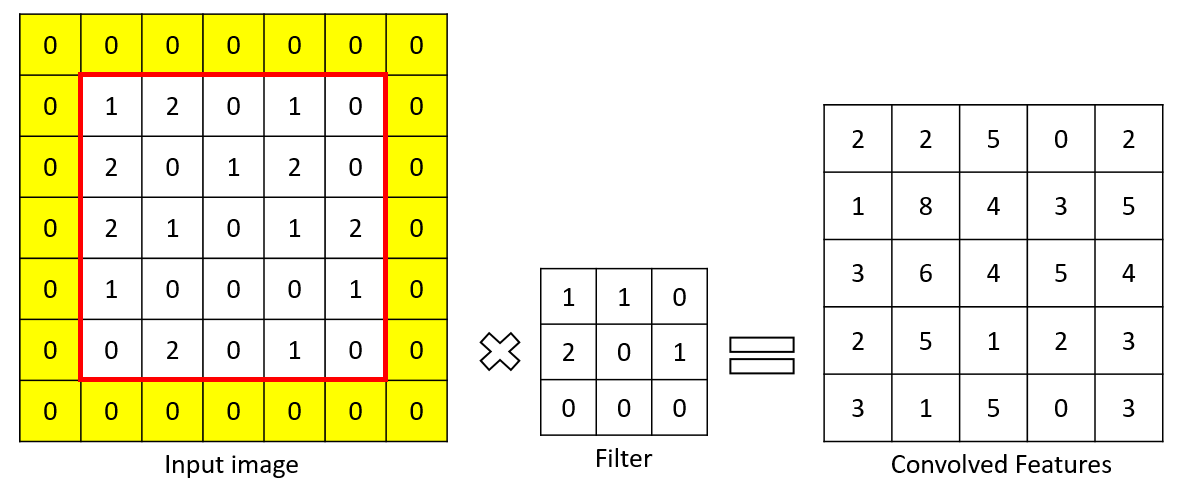

With Padding ():

Where:

- : input size

- : filter size

- : padding size

Example

In this image:

- : input size =

- : filter size =

- : padding size =

Types of Padding

- Valid Padding (no padding): Output is smaller.

- Same Padding (zero padding): Output size equals input size.

Real-World Analogy

Imagine scanning a photo with a magnifying glass: without padding, you can’t examine the borders. Padding extends the image so that every pixel gets equal attention.

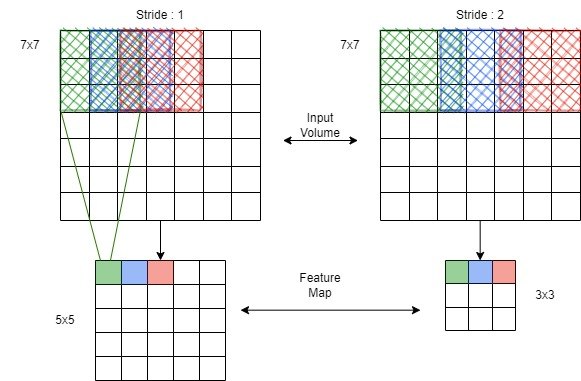

Strided Convolutions

What is Stride?

Stride is the number of pixels the filter moves at each step.

- Stride = 1: Normal convolution (moves 1 pixel at a time)

- Stride = 2: Downsampling (moves 2 pixels at a time)

Output Size Formula

Where:

- : input size

- : filter size

- : stride

- : padding

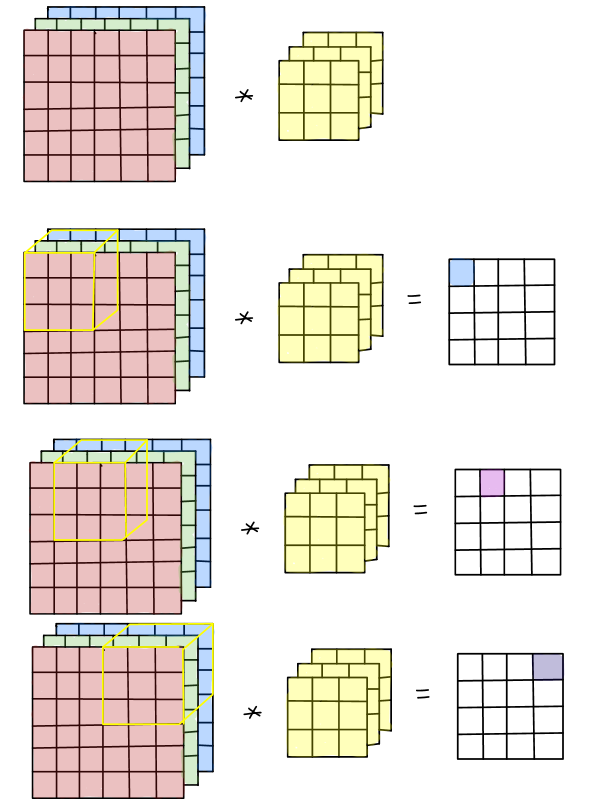

Visual Example

If stride = 2, the filter skips every alternate pixel, effectively reducing the spatial size of the output.

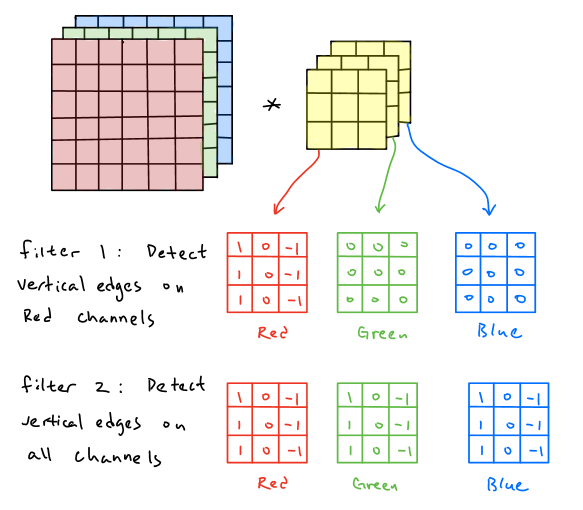

Convolutions Over Volume

From 2D to 3D

In RGB images, we have 3 channels: Red, Green, and Blue. Thus, a convolutional layer operates over 3D volumes.

Input Dimensions:

- : Height

- : Width

- : Channels (e.g., 3 for RGB)

Filter Dimensions:

- Number of filters:

Output Volume:

- Each filter creates a 2D activation map, stacked together to form the output volume.

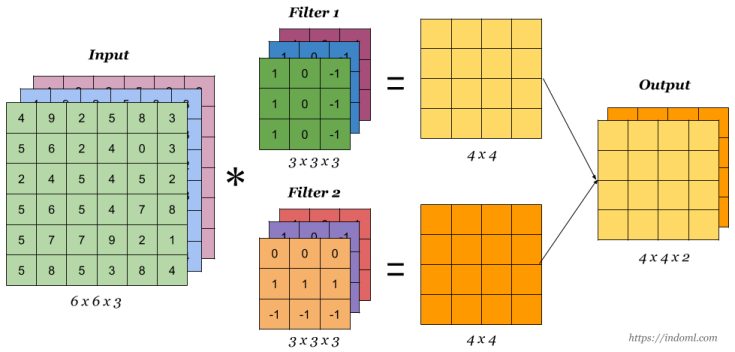

Practical Example

Let’s say you have a (6, 6, 3) image, and you apply 2 filters of size (3, 3, 3):

- Output shape: (4, 4, 2) (assuming valid padding, stride=1)