Welcome to your-notes.

∴

lorem ipsum dolor sit amet

— your-name

Welcome to Machine Learning notes.

∴

I completed the Machine Learning Specialization Course by taking detailed notes and summarizing critical concepts for future reference.

University of Stanford & DeepLearning.AI

— emreaslan —

Supervised and Unsupervised Machine Learning

Introduction

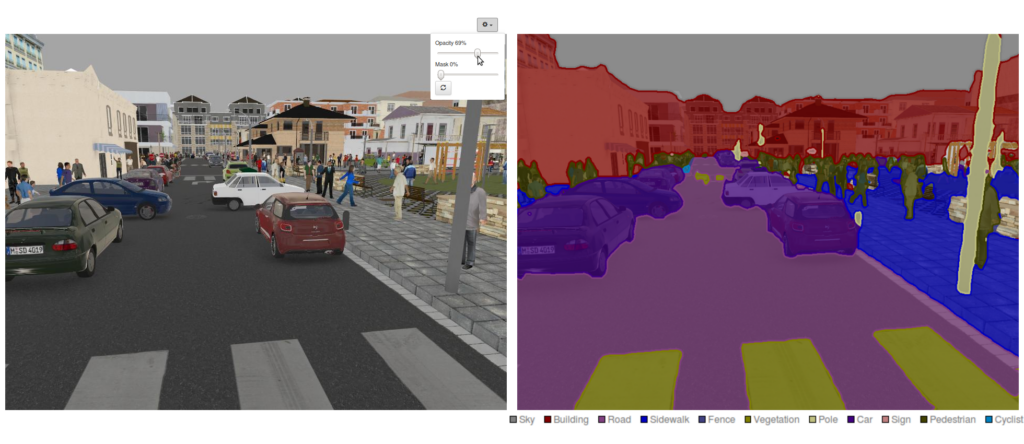

Machine learning is a branch of artificial intelligence that allows systems to learn and make predictions or decisions without explicit programming. Two main types of machine learning are Supervised Learning and Unsupervised Learning. Below is a summary of their characteristics, subfields, along with a visual representation for clarity.

graph TD

A[Machine Learning] --> B[Supervised Learning]

A --> C[Unsupervised Learning]

B --> D[Regression]

B --> E[Classification]

C --> F[Clustering]

C --> G[Association]

C --> H[Dimensionality Reduction]

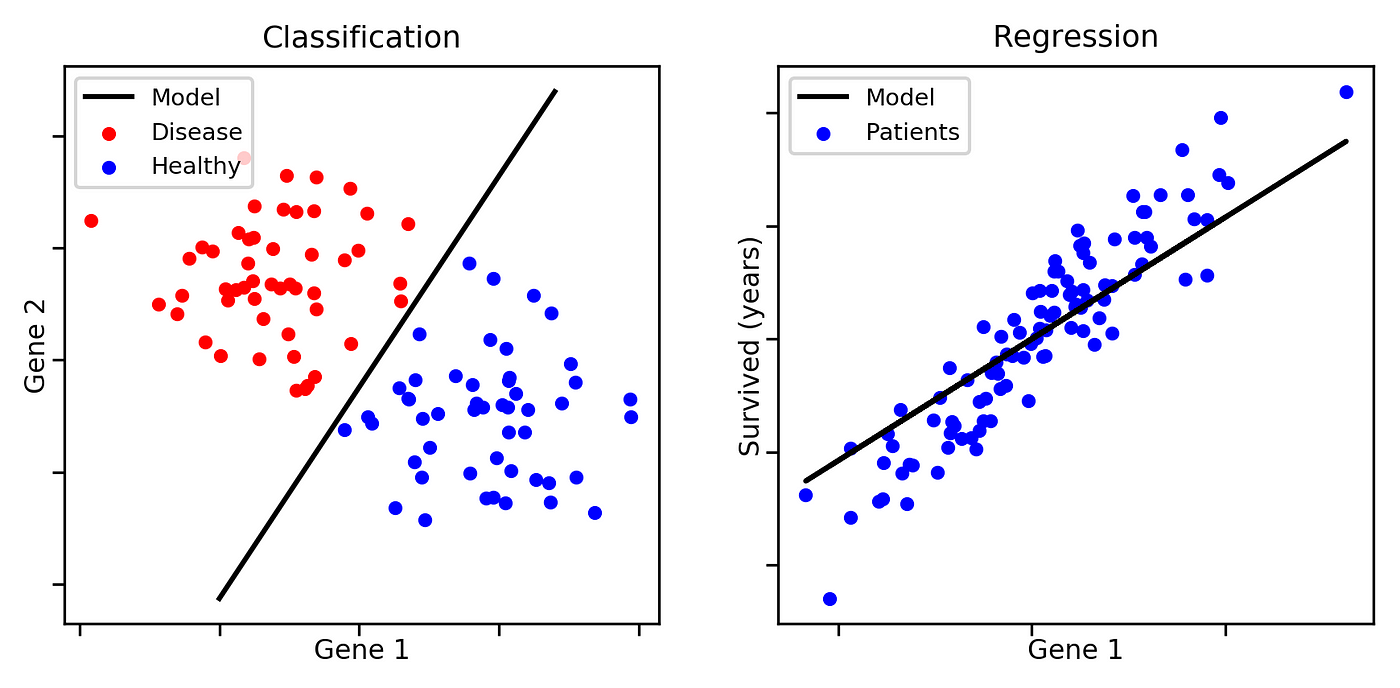

Supervised Learning

Supervised learning is a type of machine learning where the model is trained on labeled data. Labeled data means that each input has a corresponding output (or target) already provided. The goal is for the model to learn the relationship between the inputs and outputs so that it can make predictions for new, unseen data.

Key Characteristics

- Input and Output: The training data contains both input features (X) and target labels (Y).

- Goal: Predict the output (Y) for a given input (X).

Subfields

- Regression: Predicting continuous values (e.g., predicting rent prices based on apartment size).

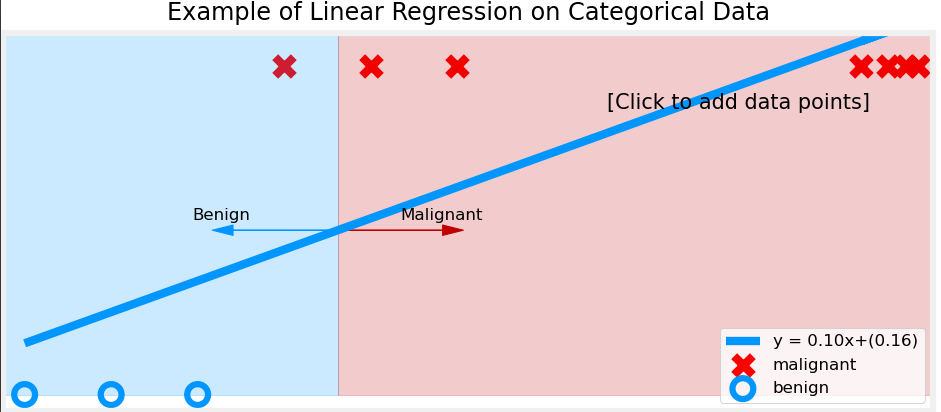

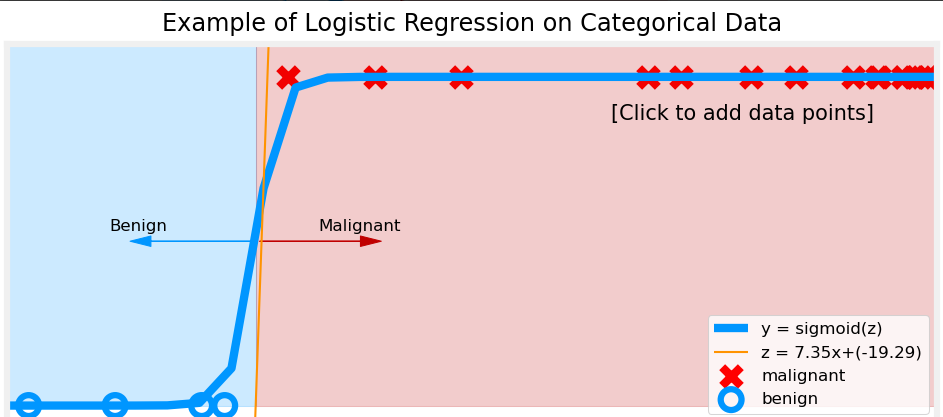

- Classification: Assigning inputs to discrete categories (e.g., diagnosing cancer as benign or malignant).

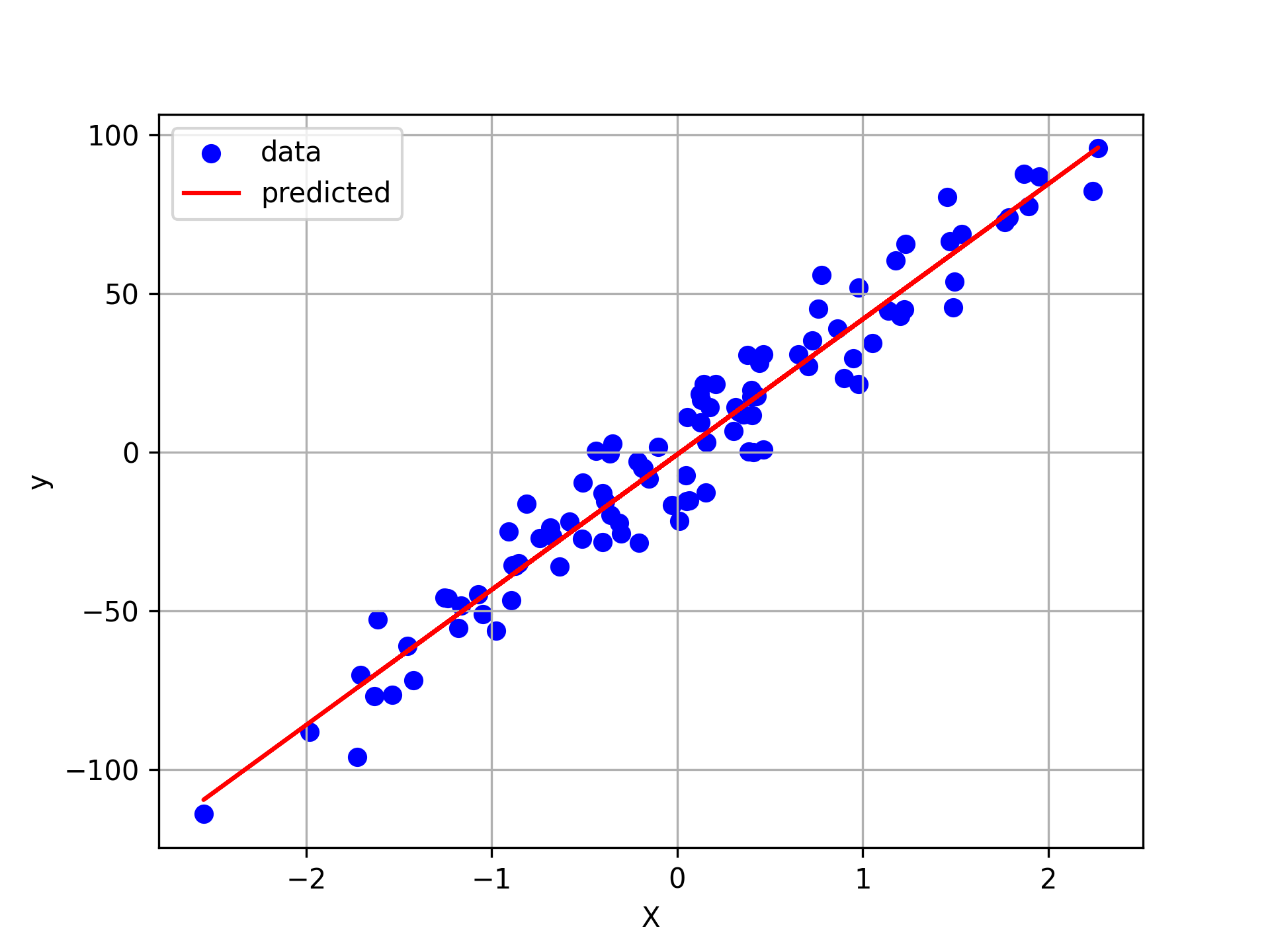

Example: Regression

- Scenario: Predicting rent prices based on apartment size (in m²).

- Details:

- Input features (X): Apartment size, number of rooms, neighborhood, etc.

- Target variable (Y): Rent price (e.g., $ per month).

- Model's Job: Learn the relationship between apartment features and rent prices, then predict the rent for a new apartment.

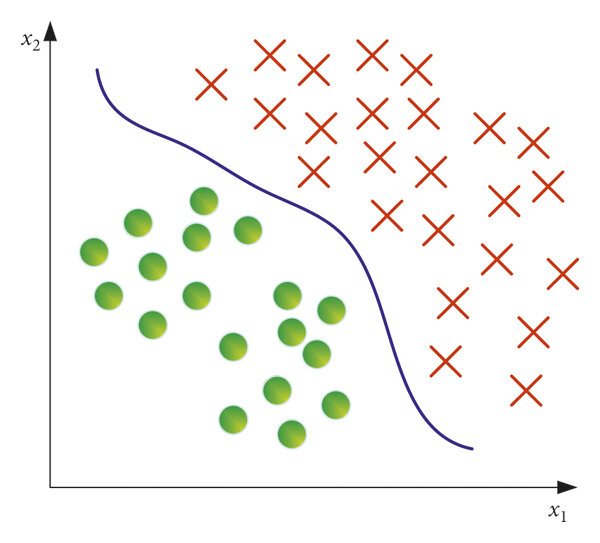

Example: Classification

- Scenario: Diagnosing cancer (e.g., benign or malignant tumor).

- Details:

- Input features (X): Measurements like tumor size, texture, cell shape, etc.

- Target variable (Y): Class label (e.g., "Benign" or "Malignant").

- Model's Job: Classify a new tumor as benign or malignant based on input features.

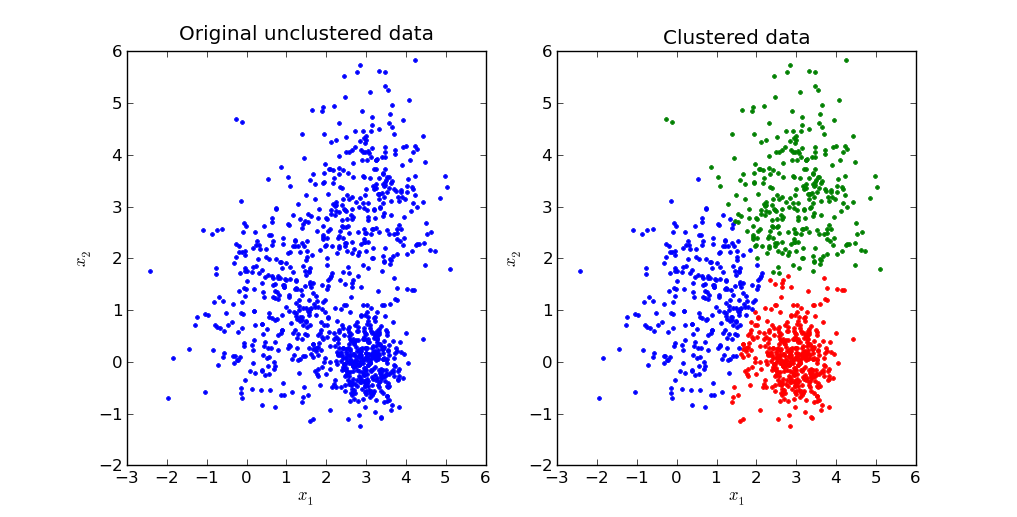

Unsupervised Learning

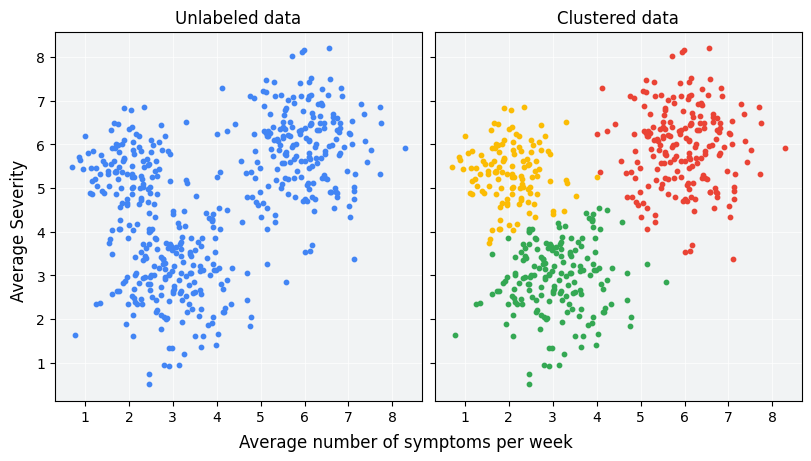

Unsupervised learning deals with unlabeled data. The model tries to find patterns, structures, or relationships within the data without any predefined labels or targets. It’s often used for exploratory data analysis.

Key Characteristics

- Input Only: The data contains only input features (X), with no target labels (Y).

- Goal: Discover hidden patterns or groupings in the data.

Subfields

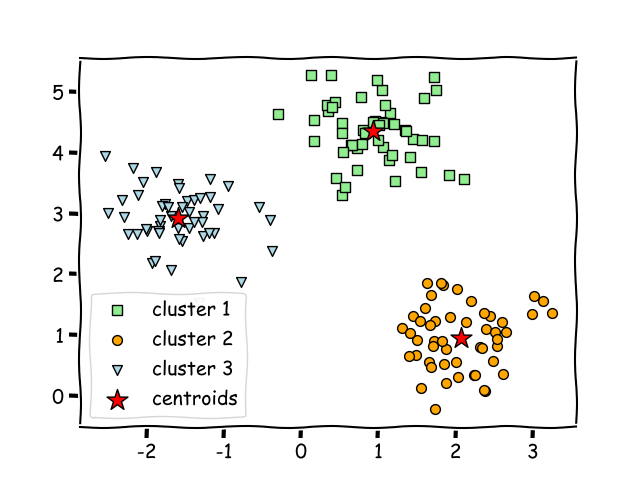

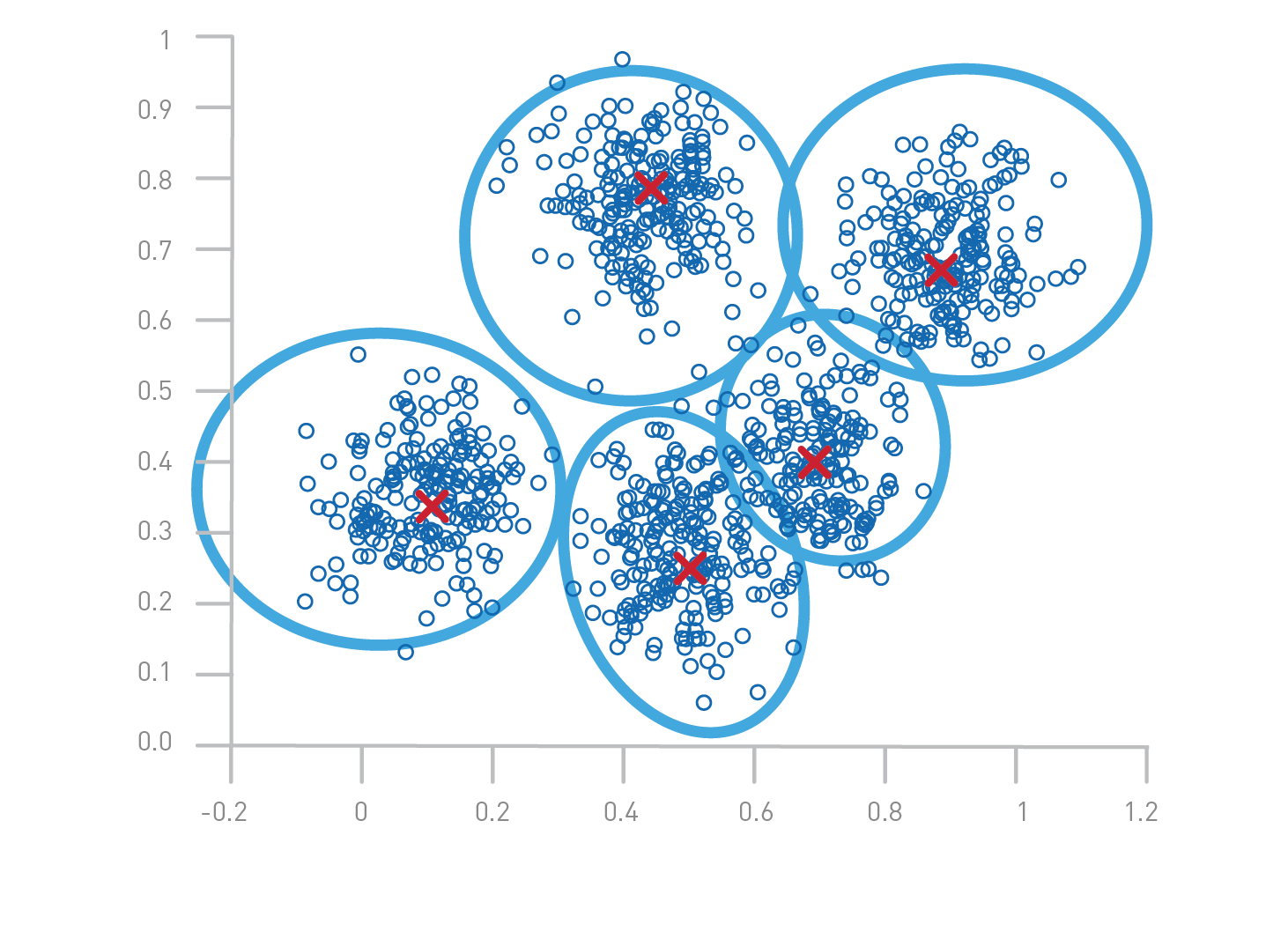

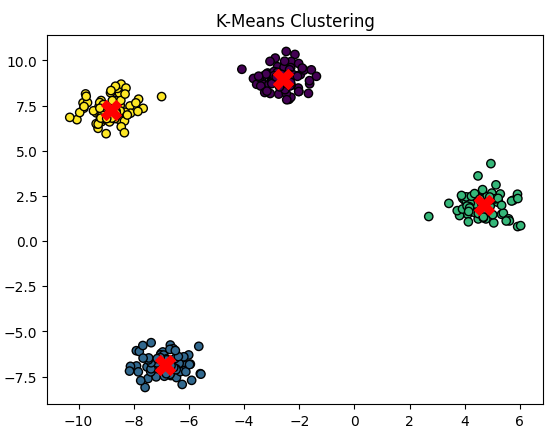

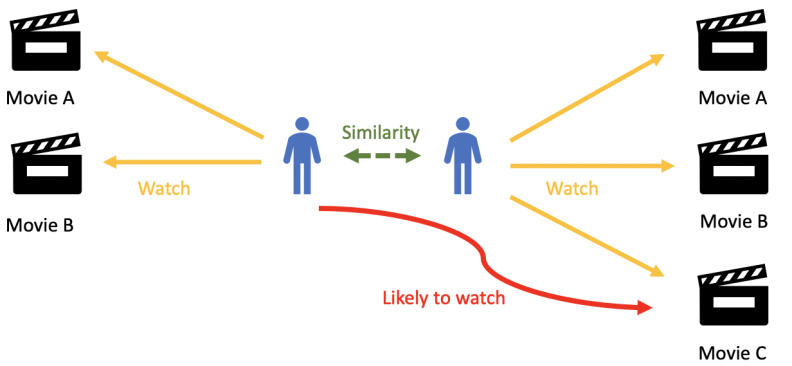

- Clustering: Grouping similar data points into clusters (e.g., customer segmentation).

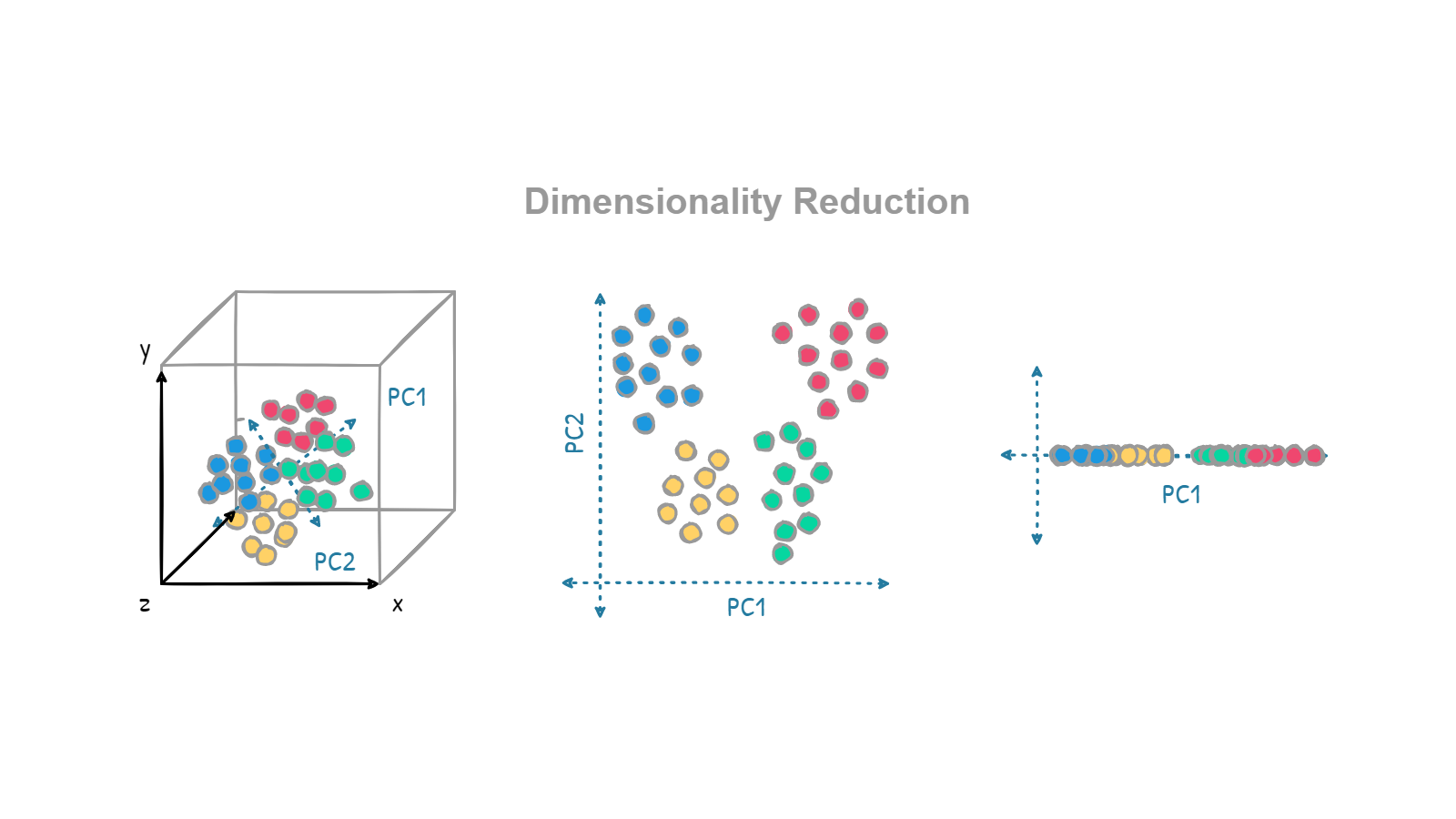

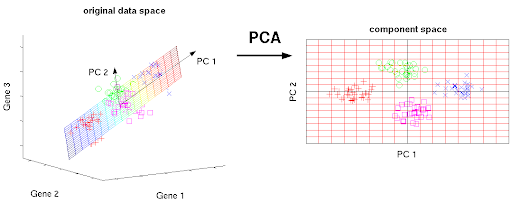

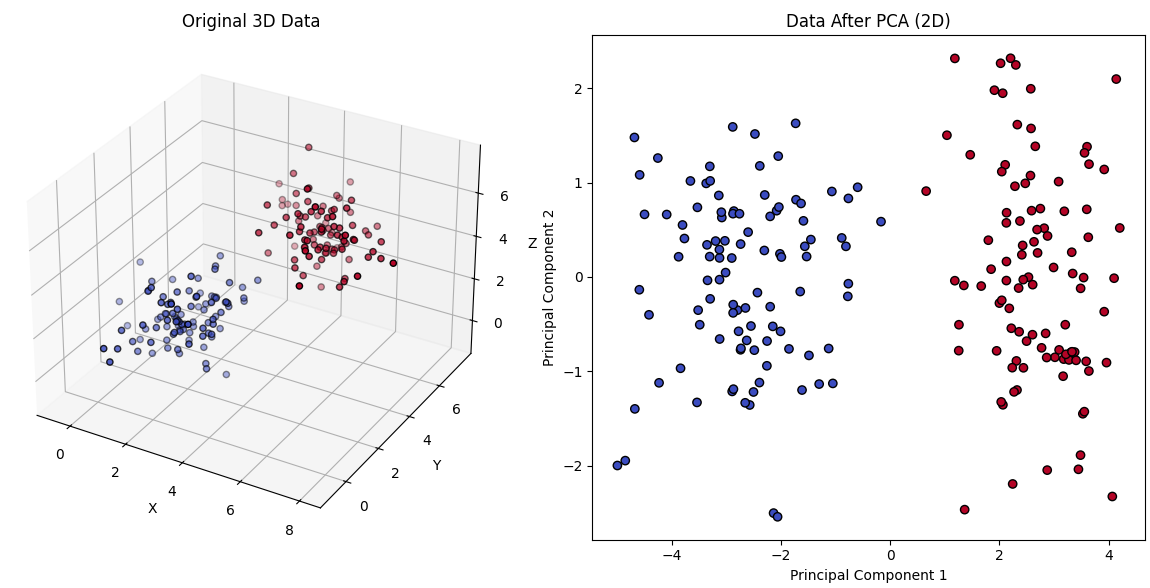

- Dimensionality Reduction: Reducing the number of features in the dataset while preserving important information (e.g., PCA).

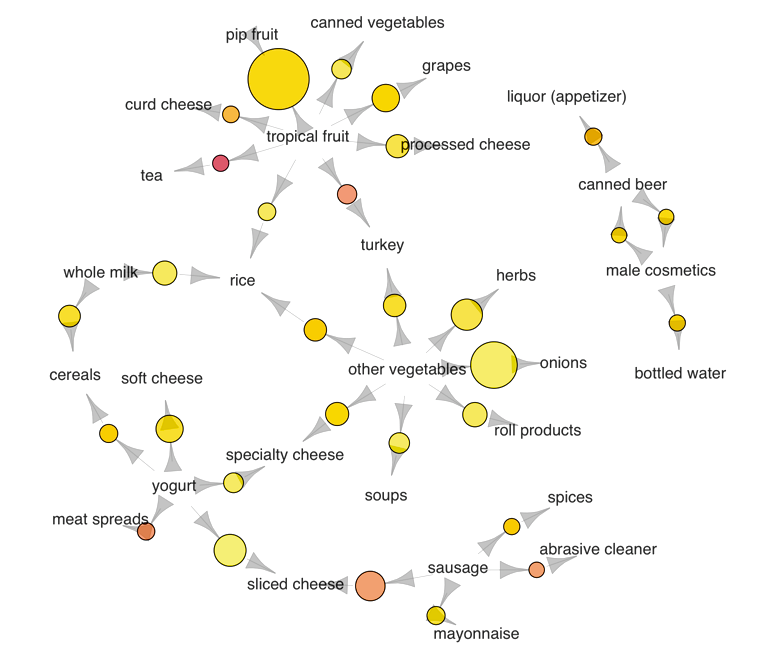

- Association: Discovering relationships or associations between variables in large datasets (e.g., market basket analysis).

Example: Clustering

- Scenario: Grouping customers for targeted marketing.

- Details:

- Input features (X): Customer age, income, purchase history, location, etc.

- No predefined labels (Y).

- Model's Job: Identify clusters of customers (e.g., "High-spenders," "Budget-conscious buyers").

Example: Dimensionality Reduction

- Scenario: Visualizing high-dimensional data.

- Details:

- Imagine you have a dataset with 100+ features (e.g., sensor data from a factory).

- Dimensionality reduction (e.g., PCA) helps reduce it to 2D or 3D for easier visualization.

- Model's Job: Keep the important structure of the data while reducing complexity.

Example: Association

- Scenario: Market basket analysis to identify product associations.

- Details:

- Input features (X): Transaction data showing items purchased together.

- No predefined labels (Y).

- Model's Job: Identify rules like "If a customer buys bread, they are likely to buy butter."

- Use Case: Recommendation systems, inventory planning.

Comparison Table

| Feature | Supervised Learning | Unsupervised Learning |

|---|---|---|

| Data Type | Labeled data (X, Y) | Unlabeled data (X only) |

| Goal | Predict outcomes | Find patterns or structures |

| Key Techniques | Regression, Classification | Clustering, Dimensionality Reduction, Assocation |

| Examples | Fraud detection, Stock price prediction | Market segmentation, Image compression |

Key Takeaways

- Supervised Learning requires labeled data and is commonly used for prediction tasks like regression and classification.

- Unsupervised Learning works with unlabeled data and focuses on finding hidden patterns through clustering or dimensionality reduction.

- Each technique has specific applications and is chosen based on the problem and the data available.

- Linear Regression and Cost Function

Linear Regression and Cost Function

1. Introduction

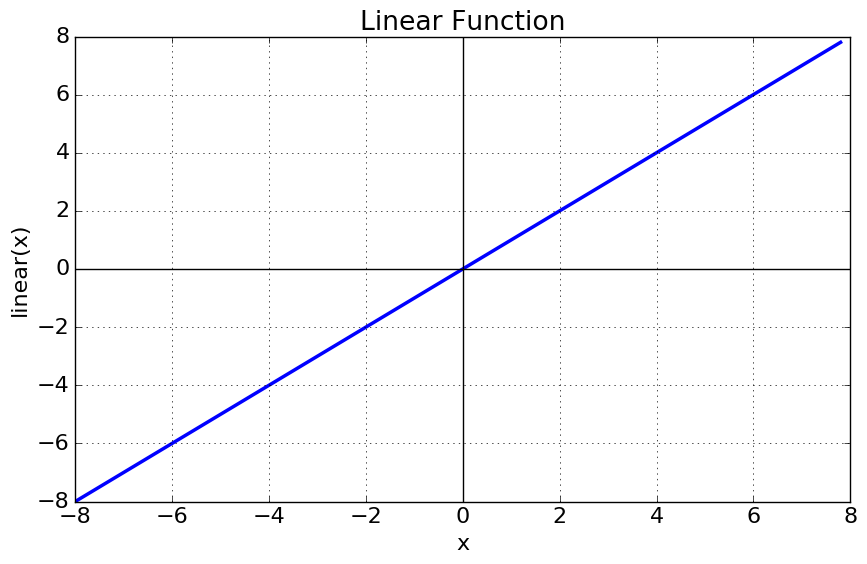

Linear regression is one of the fundamental algorithms in machine learning. It is widely used for predictive modeling, especially when the relationship between the input and output variables is assumed to be linear. The primary goal is to find the best-fitting line that minimizes the error between predicted values and actual values.

Why Linear Regression?

Linear regression is simple yet powerful for many real-world applications. Some common use cases include:

- Predicting house prices based on features like size, number of rooms, and location.

- Estimating salaries based on experience, education level, and industry.

- Understanding trends in various fields like finance, healthcare, and economics.

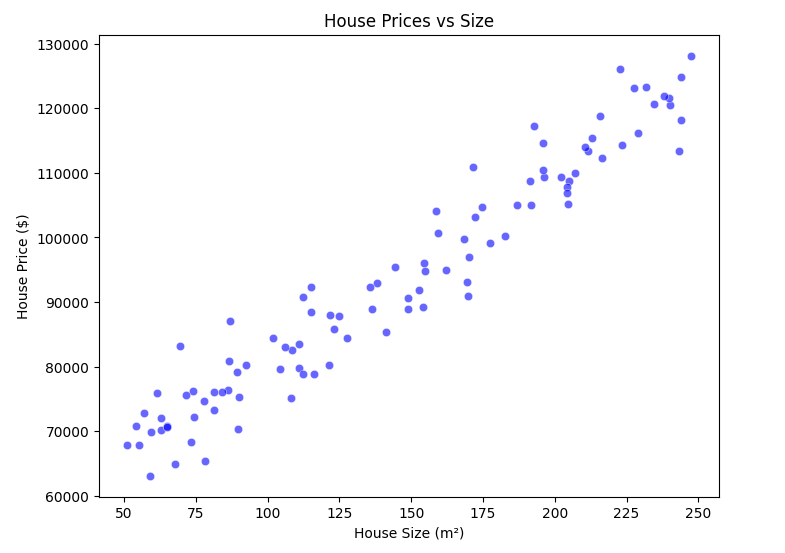

Real-World Example: Housing Prices

Consider predicting house prices based on the size of the house (in square meters). A simple linear relationship can be assumed: larger houses tend to have higher prices. This assumption is the foundation of our linear regression model.

2. Mathematical Representation

A simple linear regression model assumes a linear relationship between the input (house size in square meters) and the output (house price). It is represented as:

where:

- is the predicted house price.

- (intercept) and (slope) are the parameters of the model.

- is the house size.

- is the actual house price.

2.1 Understanding the Linear Model

But what does this equation really mean?

-

(intercept): The price of a house when its size is 0 m².

-

(slope): The increase in house price for every additional square meter.

For example, if:

-

and ,

-

A 100 m² house would cost:

-

A 200 m² house would cost:

We can visualize this relationship using a regression line.

3. Implementing Linear Regression Step by Step

To make the theoretical concepts clearer, let's implement the regression model step by step using Python.

3.1 Import Necessary Libraries

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

3.2 Generate Sample Data

np.random.seed(42)

x = 50 + 200 * np.random.rand(100, 1) # House sizes in m² (50 to 250)

y = 50000 + 300 * x + np.random.randn(100, 1) * 5000 # House prices with noise

Here, we create a dataset with 100 samples, where:

-

represents house sizes (random values between and m²).

-

represents house prices, following a linear relation but with some noise.

3.3 Visualizing the Data

plt.figure(figsize=(8,6))

sns.scatterplot(x=x.flatten(), y=y.flatten(), color='blue', alpha=0.6)

plt.xlabel('House Size (m²)')

plt.ylabel('House Price ($)')

plt.title('House Prices vs Size')

plt.show()

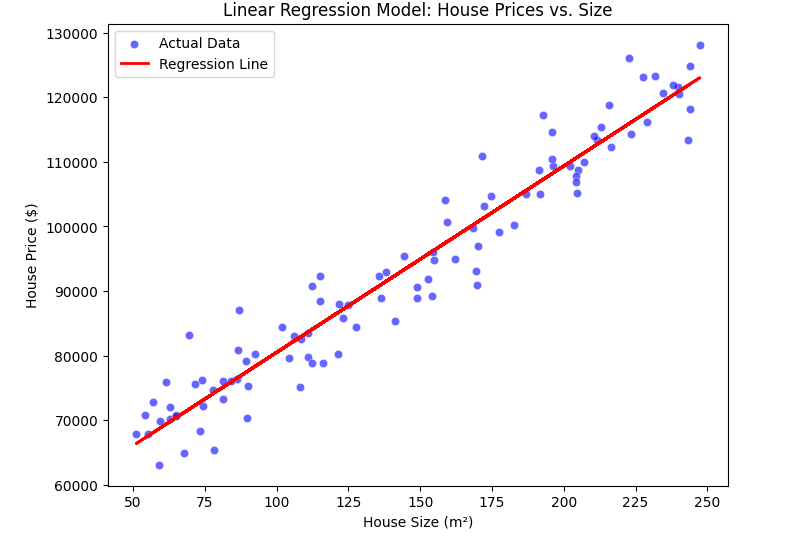

3.4 Plotting the Regression Line

Before moving to cost function, let's fit a simple regression line to our data and visualize it.

In real-world applications, we don't manually compute these parameters. Instead, we use libraries like scikit-learn to perform linear regression efficiently.

3.4.1 Compute the Slope ()

theta_1 = np.sum((x - np.mean(x)) * (y - np.mean(y))) / np.sum((x - np.mean(x))**2)

Here, we compute the slope () using the least squares method.

3.4.2 Compute the Intercept ()

theta_0 = np.mean(y) - theta_1 * np.mean(x)

This calculates the intercept (), ensuring that our regression line passes through the mean of the data.

3.5 Plotting the Regression Line

y_pred = theta_0 + theta_1 * x # Compute predicted values

plt.figure(figsize=(8,6))

sns.scatterplot(x=x.flatten(), y=y.flatten(), color='blue', alpha=0.6, label='Actual Data')

plt.plot(x, y_pred, color='red', linewidth=2, label='Regression Line')

plt.xlabel('House Size (m²)')

plt.ylabel('House Price ($)')

plt.title('Linear Regression Model: House Prices vs. Size')

plt.legend()

plt.show()

3.6 Interpretation of the Regression Line

Now, what does this line tell us?

✅ If the slope is positive, then larger houses cost more (as expected).

✅ If the intercept is high, it means even the smallest houses have a significant base price.

✅ The steepness of the line shows how much price increases per square meter.

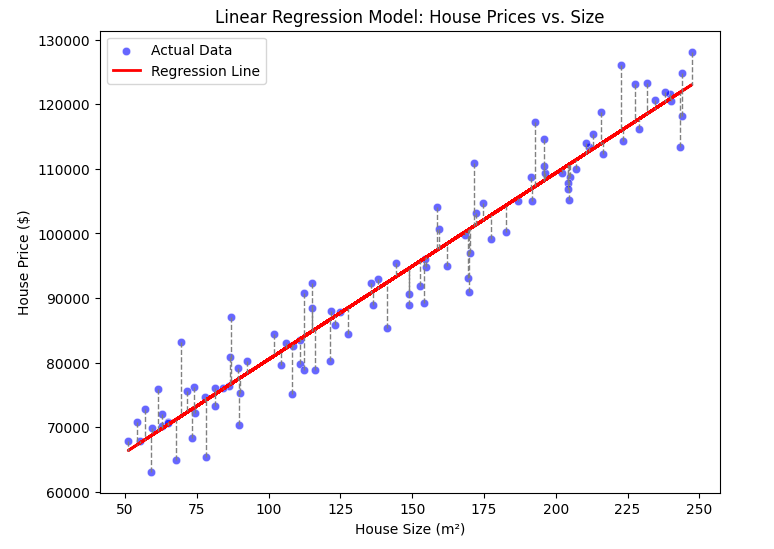

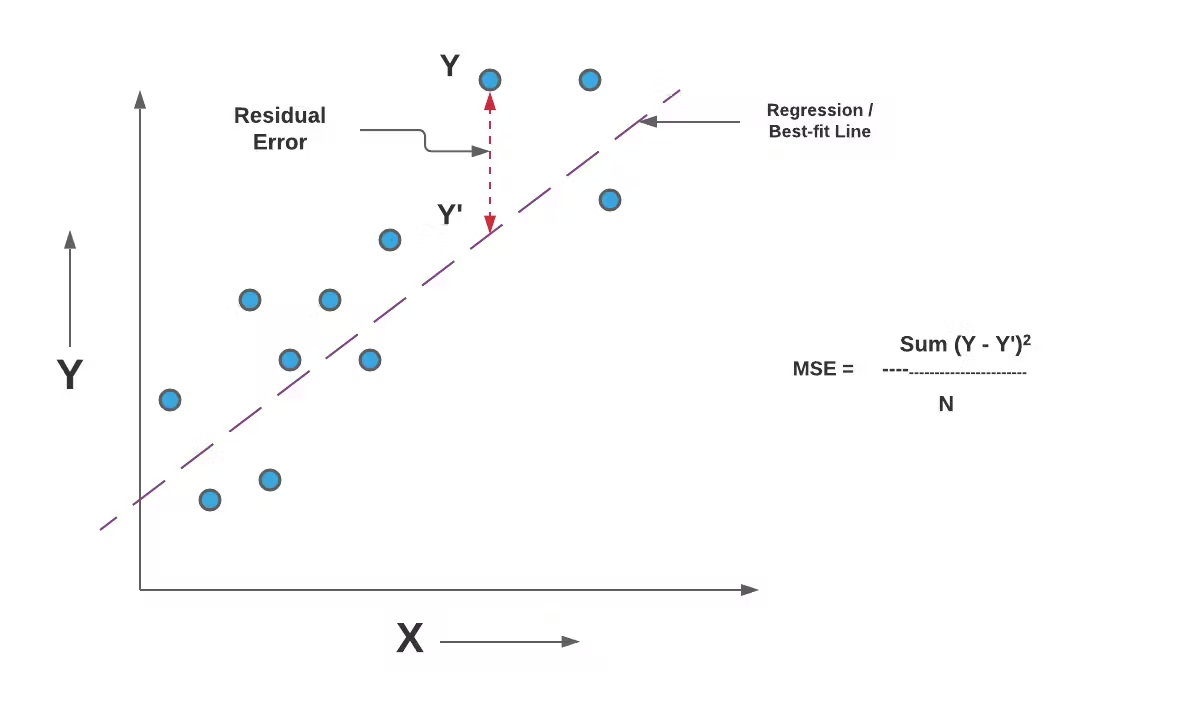

4. Cost Function

To measure how well our model is performing, we use the cost function. The most common cost function for linear regression is the Mean Squared Error (MSE):

where:

- is the number of training examples.

- is the predicted price for the house.

- is the actual price.

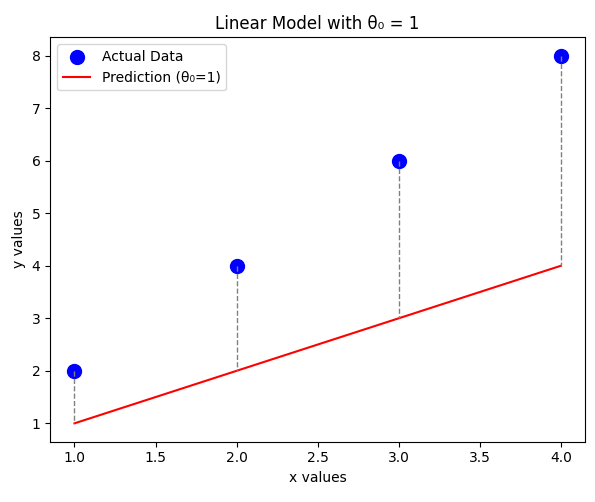

Any dashed line indicates an error. In the formula above, we calculated the sum of these, namely .

This function calculates the average squared difference between predicted and actual values, penalizing larger errors more. The goal is to minimize to achieve the best model parameters.

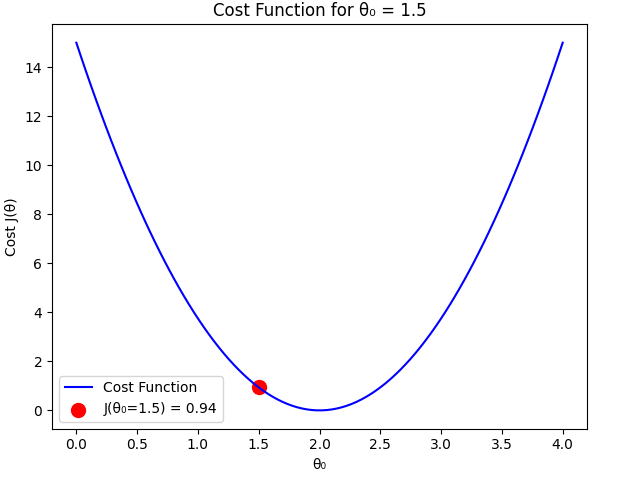

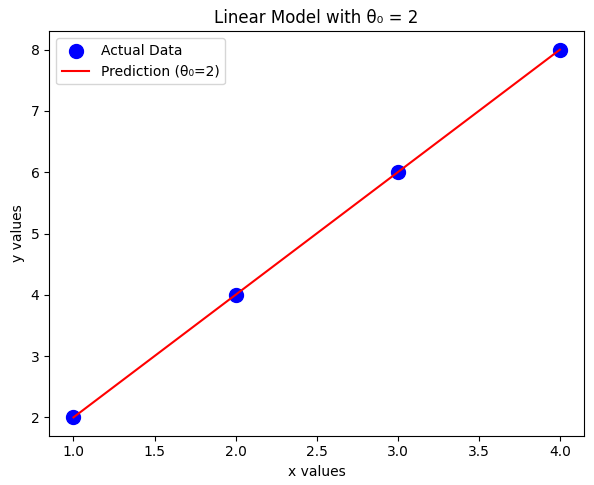

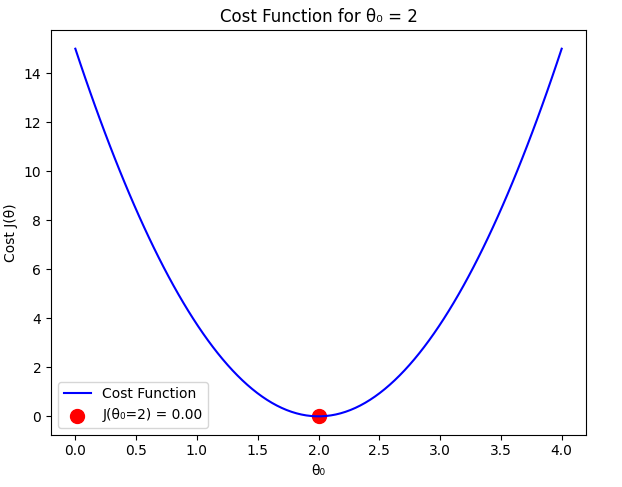

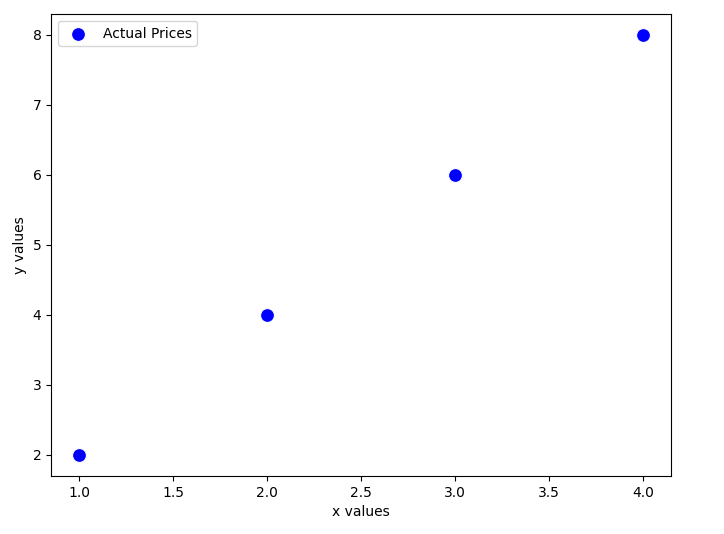

4.1 Example: Assuming

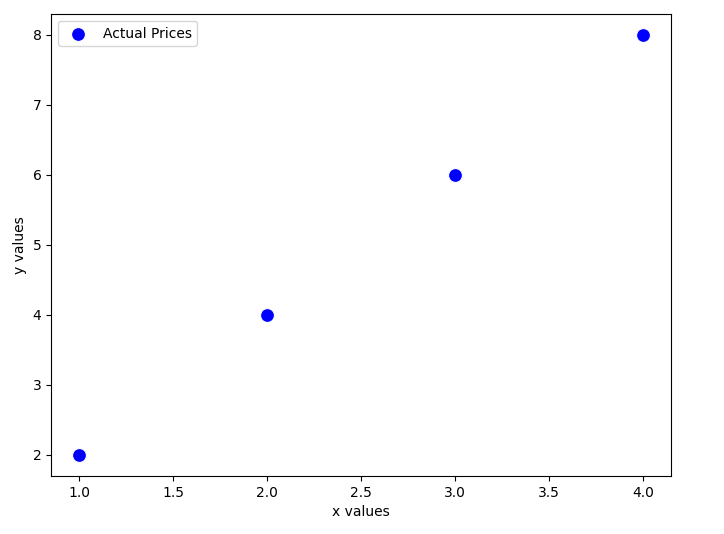

To illustrate how the cost function behaves, let's assume that , meaning our model only depends on . We'll use a small dataset with four x values and y values:

| x values | y values |

|---|---|

| 1 | 2 |

| 2 | 4 |

| 3 | 6 |

| 4 | 8 |

Since we assume , our hypothesis function simplifies to:

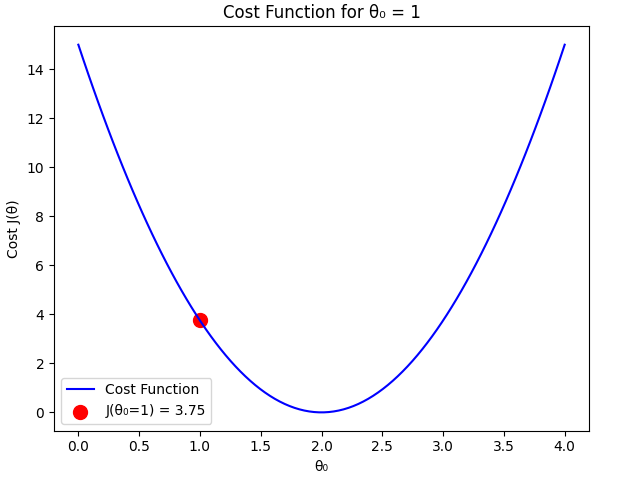

We'll evaluate different values of and compute the corresponding cost function.

Case 1:

For , the predicted values are:

The error values:

Computing the cost function:

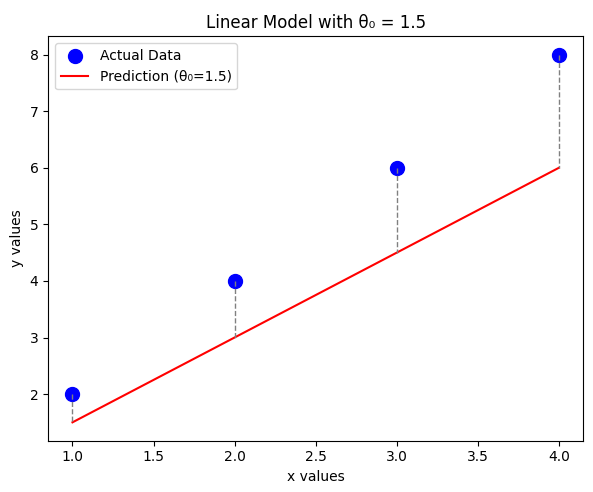

Case 2:

For , the predicted values are:

The error values:

Computing the cost function:

Case 3: (Optimal Case)

For , the predicted values match the actual values:

The error values:

Computing the cost function:

Comparison

From our calculations:

As expected, the cost function is minimized when , which perfectly fits the dataset. Any deviation from this value results in a higher cost.

So how many times can the machine try and find the correct value? How can we teach it this? The answer is in the next topic.

- Introduction to Gradient Descent

- Mathematical Formulation of Gradient Descent

- Learning Rate ()

- Gradient Descent Convergence

- Local Minimum vs Global Minimum

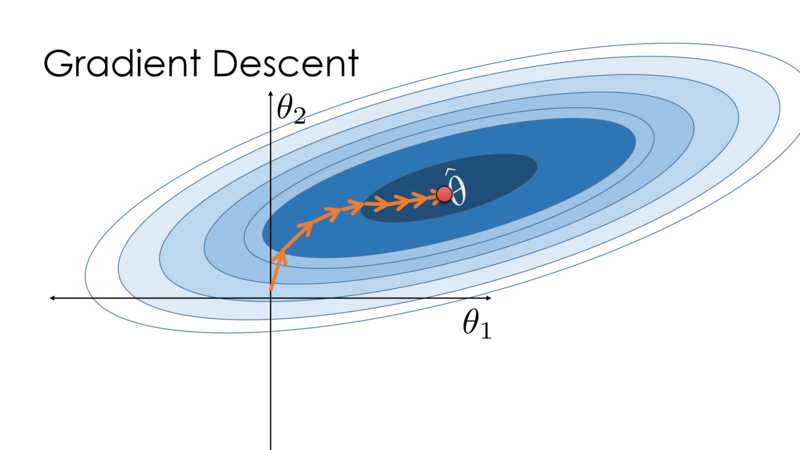

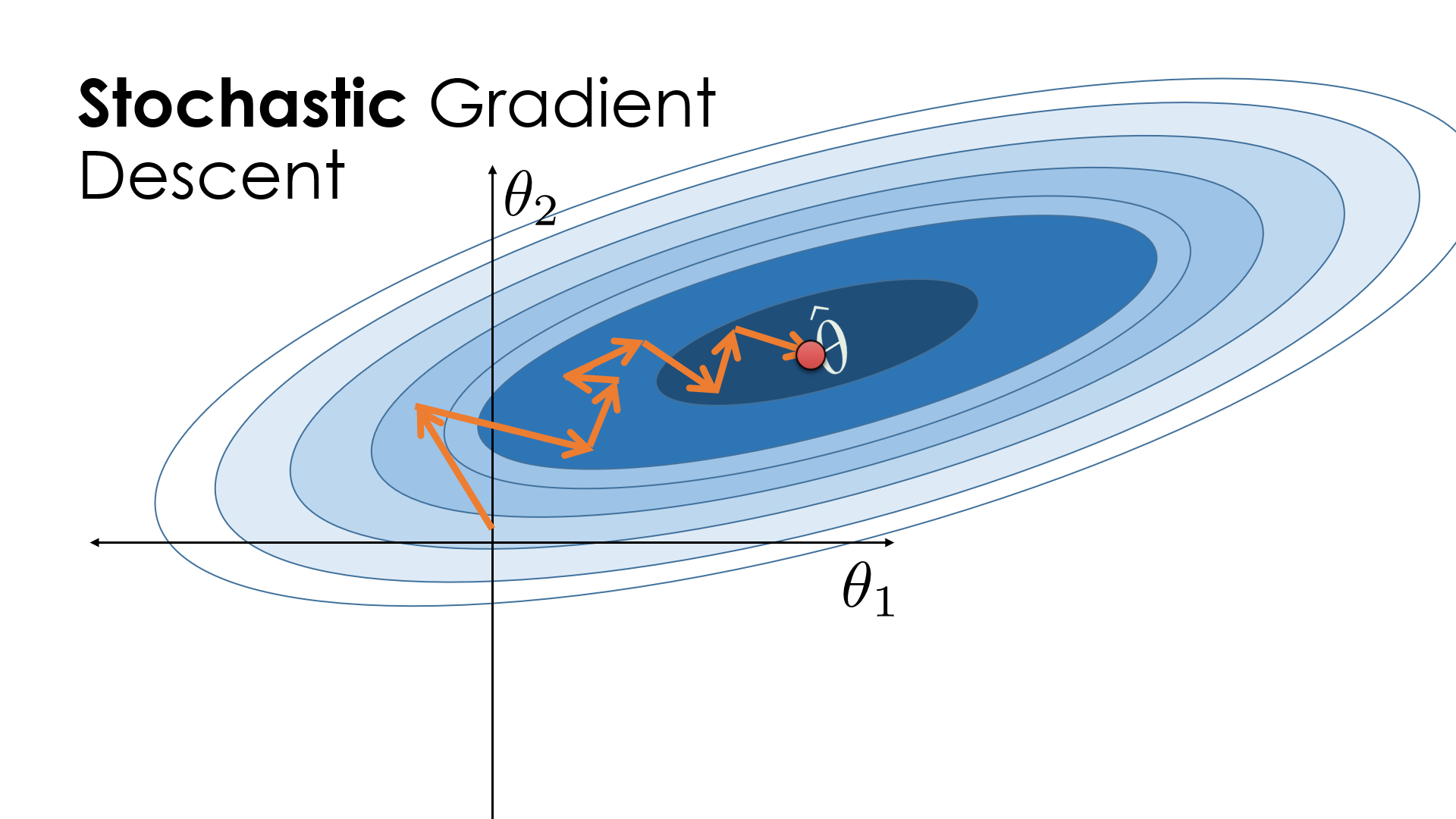

Introduction to Gradient Descent

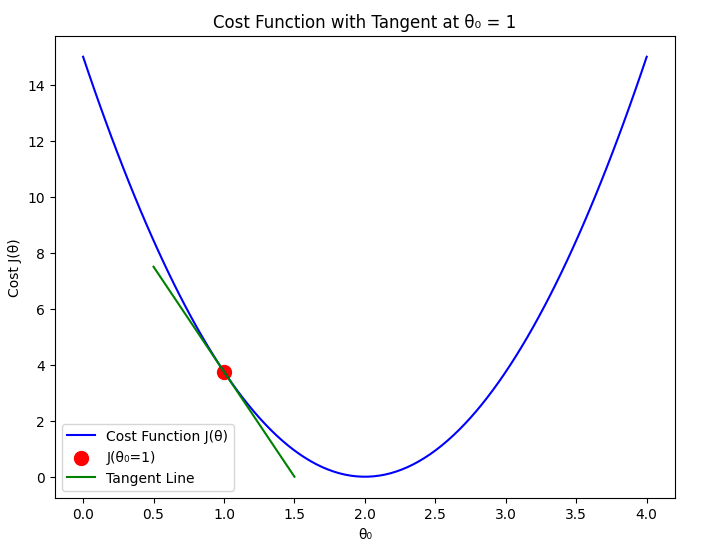

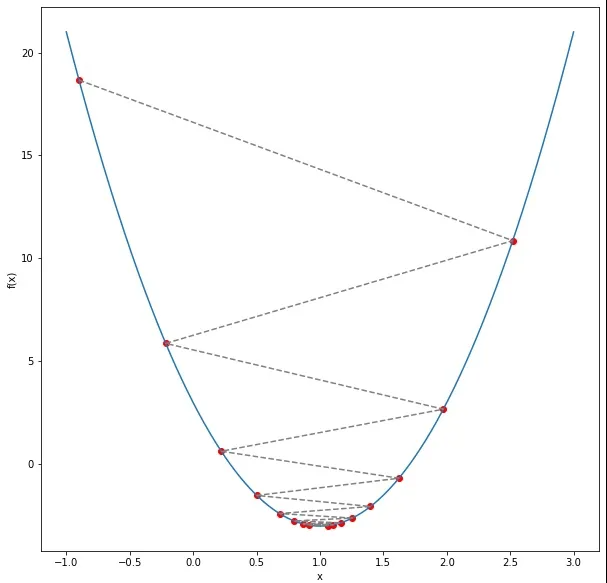

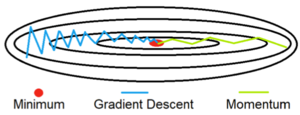

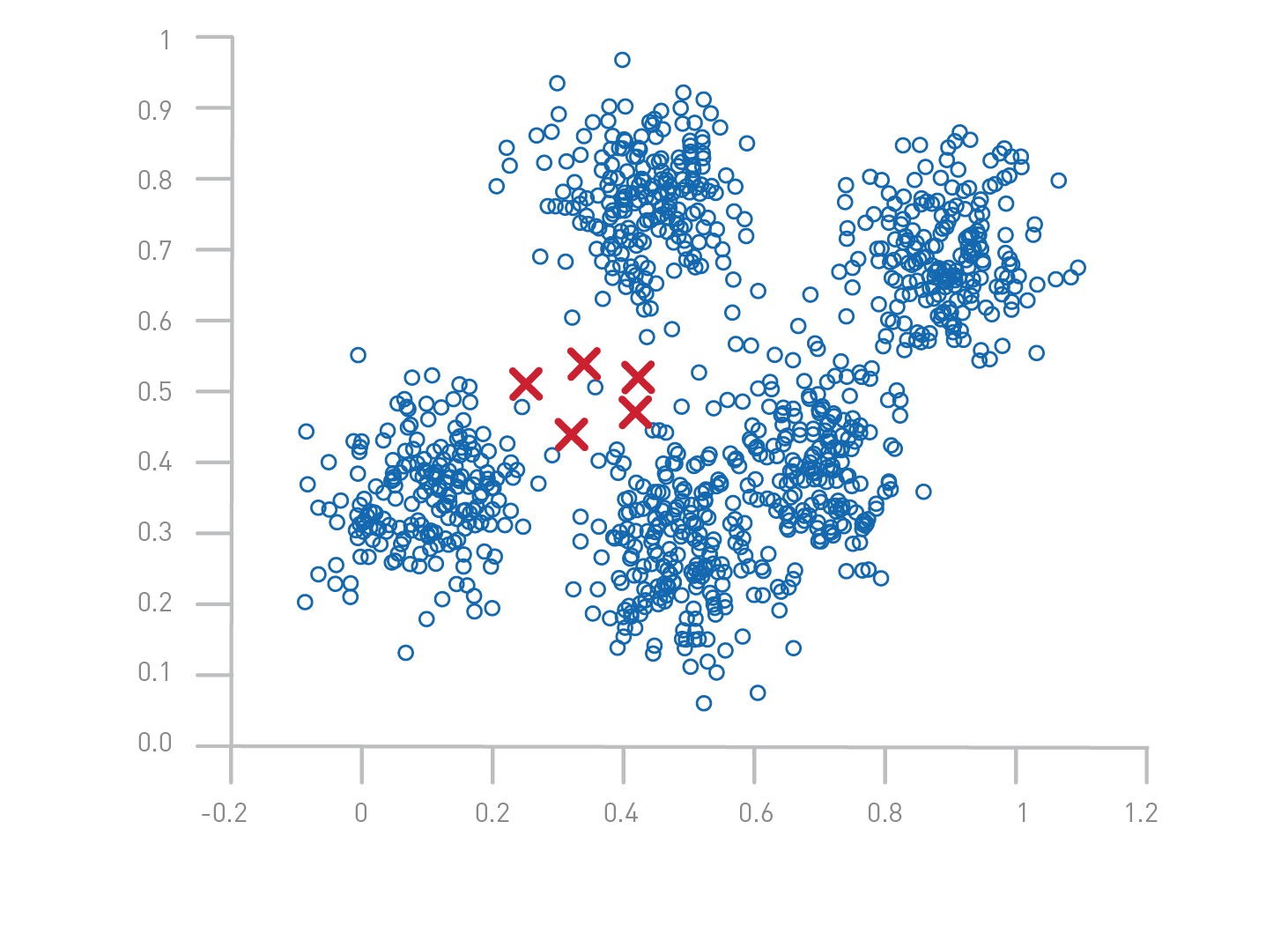

In the previous section, we explored how the cost function behaves when assuming different values of with (To visualize it easily, we give zero to ). Now, we introduce Gradient Descent, an optimization algorithm used to find the best parameters that minimize the cost function .

our hypothesis function simplifies to:

Gradient Descent is an iterative method that updates the parameter step by step in the direction that reduces the cost function. The algorithm helps us find the optimal value of efficiently instead of manually testing different values.

To understand how Gradient Descent works, let's recall our dataset:

| x values | y values |

|---|---|

| 1 | 2 |

| 2 | 4 |

| 3 | 6 |

| 4 | 8 |

We aim to find the best value of that minimizes the error between our predictions and the actual values. Gradient Descent will iteratively adjust to reach the minimum cost.

Mathematical Formulation of Gradient Descent

Gradient Descent is an optimization algorithm used to minimize a function by iteratively updating its parameters in the direction of the steepest descent. In our case, we aim to minimize the cost function:

Where:

- 𝑚 is the number of training examples.

- represents our hypothesis function (predicted values).

- y represents the actual target values.

- Goal: Find the optimal that minimizes .

1. Gradient Descent Update Rule

Gradient Descent uses the derivative of the cost function to determine the direction and magnitude of updates. The general update rule for is:

Where:

- (learning rate) controls the step size of updates.

- is the gradient (derivative) of the cost function with respect to .

Why Do We Use the Derivative?

The derivative tells us the slope of the cost function. If the slope is positive, we need to decrease , and if it is negative, we need to increase , guiding us toward the minimum of . Without derivatives, we wouldn't know which direction to move to minimize the function.

The gradient tells us how steeply the function increases or decreases at a given point.

- If the gradient is positive, is decreased.

- If the gradient is negative, is increased.

This ensures that we move toward the minimum of the cost function.

2. Computing the Gradient

First, recall our hypothesis function:

Now, we compute the derivative of the cost function:

This expression represents the average gradient of the errors multiplied by the input values. Using this gradient, we update in each iteration:

- If the error is large, the update step is bigger.

- If the error is small, the update step is smaller.

This way, the algorithm gradually moves towards the optimal .

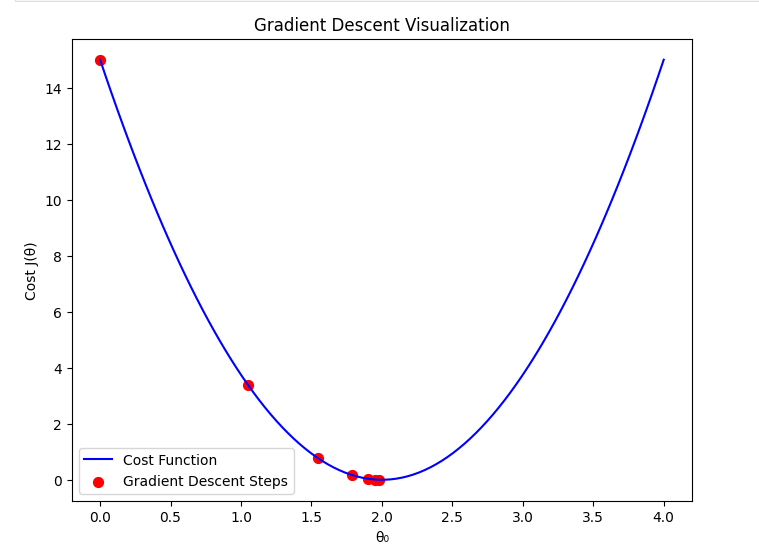

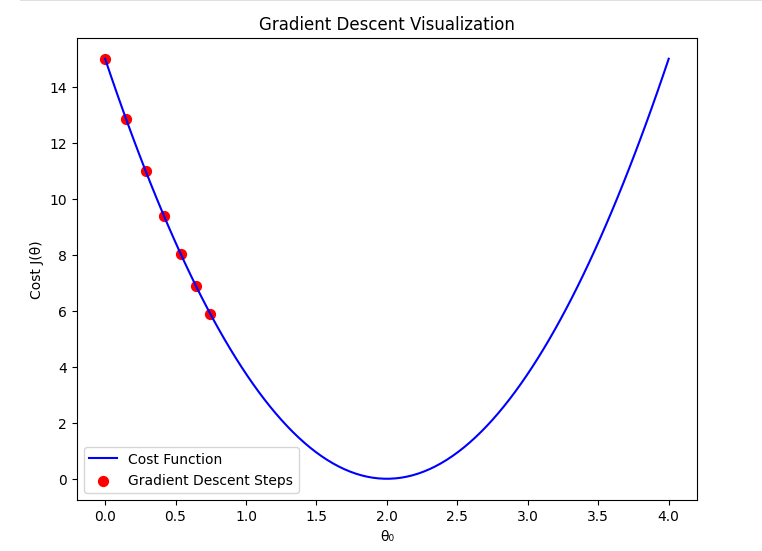

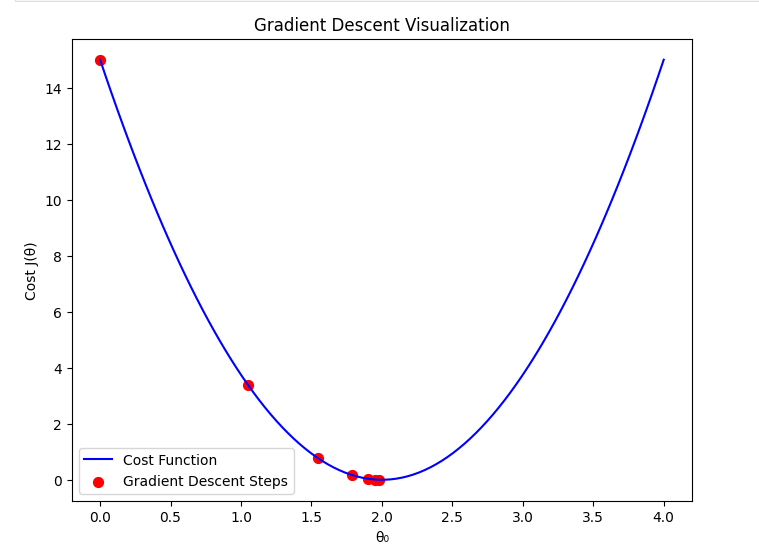

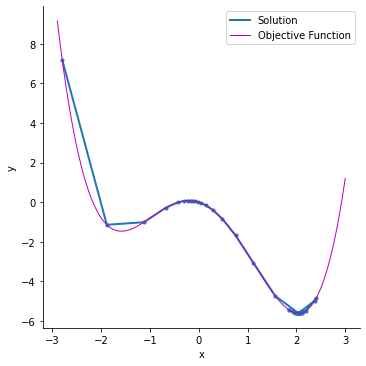

Learning Rate ()

The learning rate is a crucial parameter in the gradient descent algorithm. It determines how large a step we take in the direction of the negative gradient during each iteration. Choosing an appropriate learning rate is essential for ensuring efficient convergence of the algorithm.

If the learning rate is too small, the algorithm will take tiny steps towards the minimum, leading to slow convergence. On the other hand, if the learning rate is too large, the algorithm may overshoot the minimum or even diverge, never reaching an optimal solution.

1. When is Too Small

If the learning rate is set too small:

- Gradient descent will take very small steps in each iteration.

- Convergence to the minimum cost will be extremely slow.

- It may take a large number of iterations to reach a useful solution.

- The algorithm might get stuck in local variations of the cost function, slowing down learning.

Mathematically, the update rule is: When is very small, the change in per step is minimal, making the process inefficient.

2. When is Optimal

If the learning rate is chosen optimally:

- The gradient descent algorithm moves efficiently towards the minimum.

- It balances speed and stability, converging in a reasonable number of iterations.

- The cost function decreases steadily without oscillations or divergence.

A well-chosen ensures that gradient descent follows a smooth and steady path to the minimum.

3. When is Too Large

If the learning rate is set too large:

- Gradient descent may take excessively large steps.

- Instead of converging, it may oscillate around the minimum or diverge entirely.

- The cost function might increase instead of decreasing due to overshooting the optimal .

In extreme cases, the cost function values might increase indefinitely, causing the algorithm to fail to find a minimum.

Summary

Selecting the right learning rate is essential for gradient descent to work efficiently. A well-balanced ensures that the algorithm converges quickly and effectively. In the next section, we will implement gradient descent with different learning rates to visualize their effects.

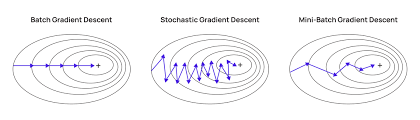

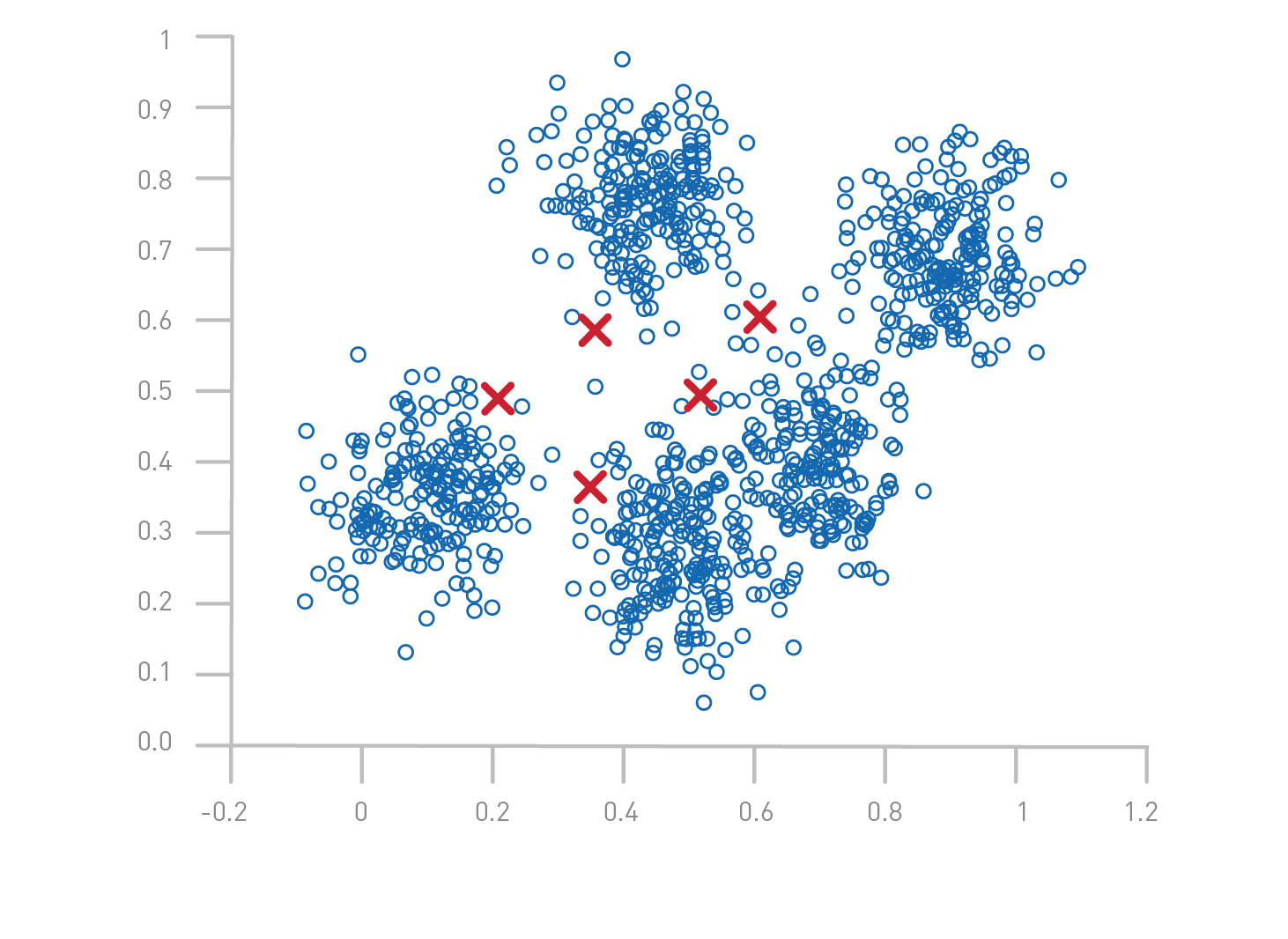

Gradient Descent Convergence

Gradient Descent is an iterative optimization algorithm that minimizes the cost function, J(\theta), by updating parameters step by step. However, we need a proper stopping criterion to determine when the algorithm has converged.

1. Convergence Criteria

The algorithm should stop when one of the following conditions is met:

- Small Gradient: If the derivative (gradient) of the cost function is close to zero, meaning the algorithm is near the optimal point.

- Minimal Cost Change: If the difference in the cost function between iterations is below a predefined threshold ().

- Maximum Iterations: A fixed number of iterations is reached to avoid infinite loops.

2. Choosing the Right Stopping Condition

- Stopping Too Early: If the algorithm stops before reaching the optimal solution, the model may not perform well.

- Stopping Too Late: Running too many iterations may waste computational resources without significant improvement.

- Optimal Stopping: The best condition is when further updates do not significantly change the cost function or parameters.

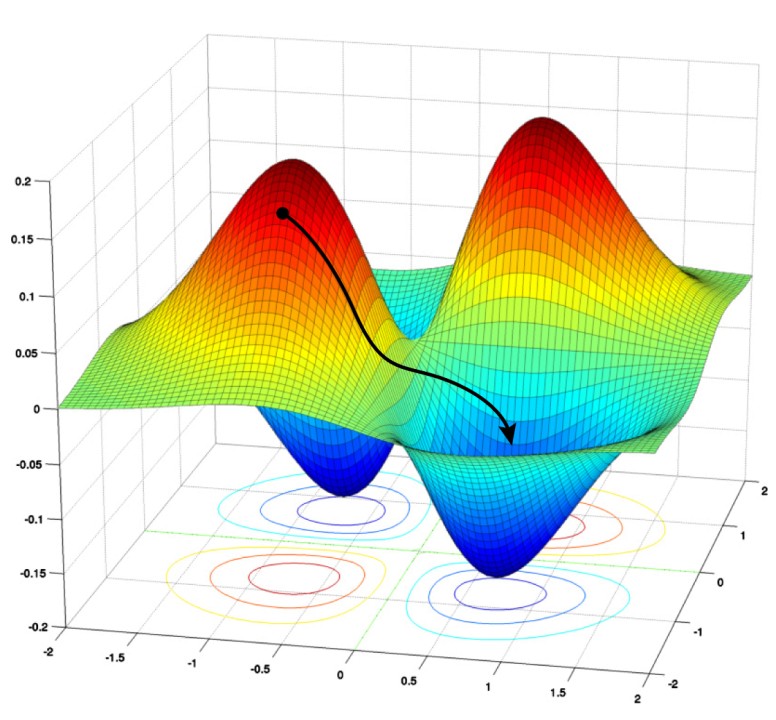

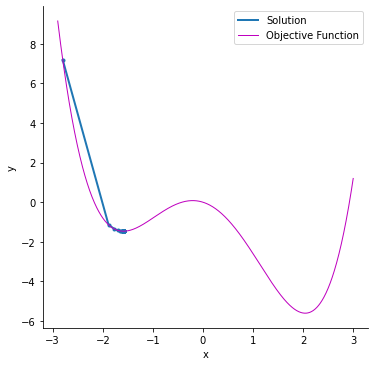

Local Minimum vs Global Minimum

Understanding the Concept

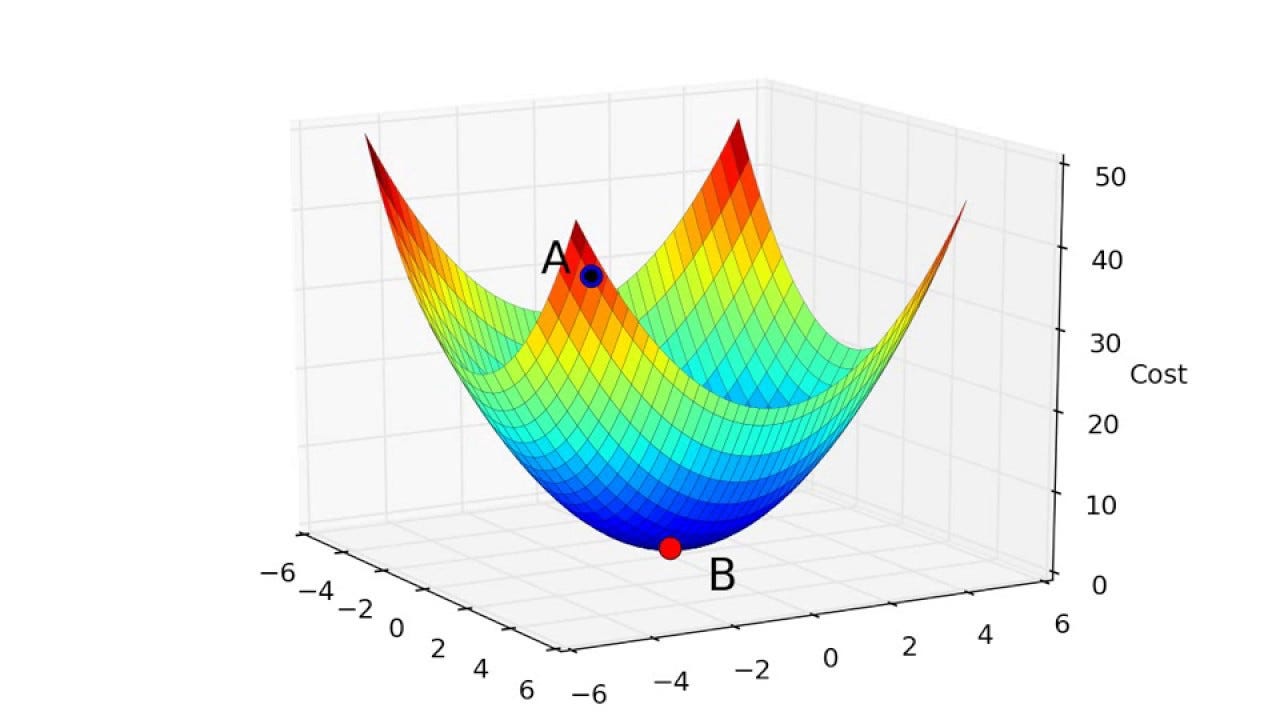

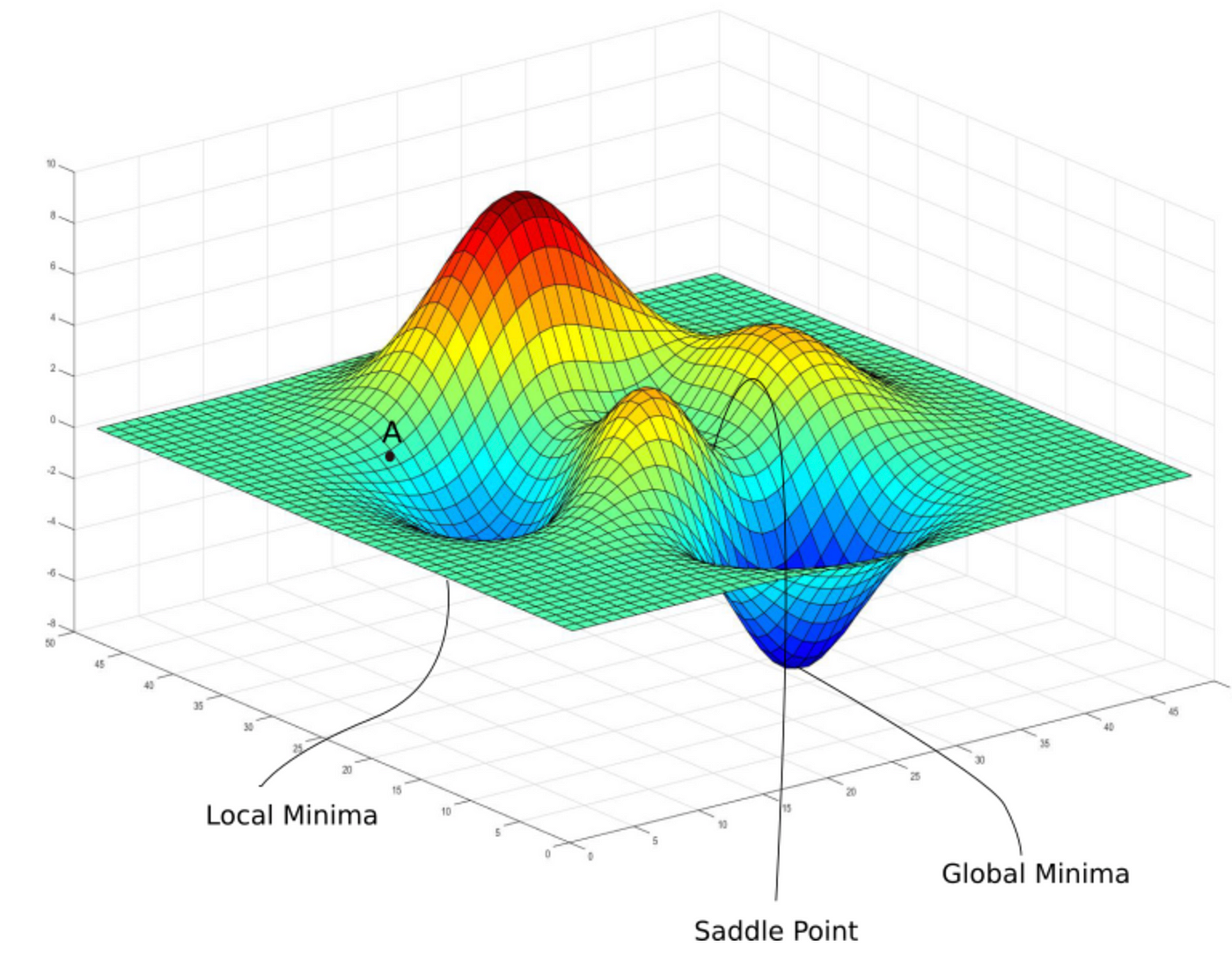

When optimizing a function, we aim to find the point where the function reaches its lowest value. This is crucial in machine learning because we want to minimize the cost function effectively. However, there are two types of minima that gradient descent might encounter:

- Global Minimum: The absolute lowest point of the function. Ideally, gradient descent should converge here.

- Local Minimum: A point where the function has a lower value than nearby points but is not the absolute lowest value.

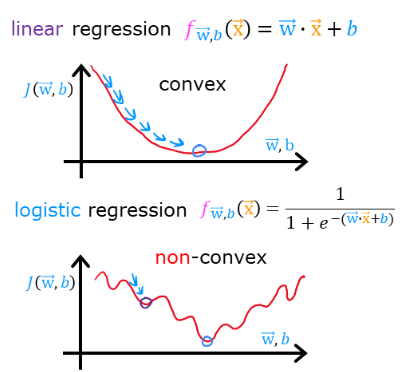

For convex functions (such as our quadratic cost function), gradient descent is guaranteed to reach the global minimum. However, for non-convex functions, the algorithm may get stuck in a local minimum.

Convex vs Non-Convex Cost Functions

- Convex Functions

- The cost function is convex for linear regression.

- This ensures that gradient descent always leads to the global minimum.

- Example: A simple quadratic function like .

- Non-Convex Functions

- More common in deep learning and complex machine learning models.

- There can be multiple local minima.

- Example: Functions with multiple peaks and valleys, such as .

Multiple Features

Introduction

In real-world scenarios, a single feature is often not enough to make accurate predictions. For example, if we want to predict the price of a house, using only its size (square meters) might not be sufficient. Other factors such as the number of bedrooms, location, and age of the house also play an important role.

When we have multiple features, our hypothesis function extends to:

where:

- are the input features,

- are the parameters (weights) we need to learn.

For instance, in a house price prediction model, the hypothesis function could be:

This allows our model to consider multiple factors, improving its accuracy compared to using a single feature.

Vectorization

To optimize computations, we represent our hypothesis function using matrix notation:

where:

is the matrix containing training examples

is the parameter vector

This allows efficient computation using matrix operations instead of looping over individual training examples.

Why Vectorization?

Vectorization is the process of converting operations that use loops into matrix operations. This improves computational efficiency, especially when working with large datasets. Instead of computing predictions one by one using a loop, we leverage linear algebra to perform all calculations simultaneously.

Without vectorization (using a loop):

m = len(X) # Number of training examples

h = []

for i in range(m):

prediction = theta_0 + theta_1 * X[i, 1] + theta_2 * X[i, 2] + ... + theta_n * X[i, n]

h.append(prediction)

With vectorization:

h = np.dot(X, theta) # Compute all predictions at once

This method is significantly faster because it takes advantage of optimized numerical libraries like NumPy that execute matrix operations efficiently.

Vectorized Cost Function

Similarly, our cost function for multiple features is:

Using matrices, this can be written as:

And implemented in Python as:

def compute_cost(X, y, theta):

m = len(y) # Number of training examples

error = np.dot(X, theta) - y # Compute (Xθ - y)

cost = (1 / (2 * m)) * np.dot(error.T, error) # Compute cost function

return cost

By using vectorized operations, we achieve a significant performance boost compared to using explicit loops.

Feature Scaling

When working with multiple features, the range of values across different features can vary significantly. This can negatively affect the performance of gradient descent, causing slow convergence or inefficient updates. Feature scaling is a technique used to normalize or standardize features to bring them to a similar scale, improving the efficiency of gradient descent.

Why Feature Scaling is Important

- Features with large values can dominate the cost function, leading to inefficient updates.

- Gradient descent converges faster when features are on a similar scale.

- Helps prevent numerical instability when computing gradients.

Methods of Feature Scaling

1. Min-Max Scaling (Normalization)

Brings all feature values into a fixed range, typically between 0 and 1:

- Best for cases where the distribution of data is not Gaussian.

- Sensitive to outliers, as extreme values affect the range.

2. Standardization (Z-Score Normalization)

Centers data around zero with unit variance:

where:

-

is the mean of the feature values

-

is the standard deviation

-

Works well when features follow a normal distribution.

-

Less sensitive to outliers compared to min-max scaling.

Example

Consider a dataset with two features: House Size (m²) and Number of Bedrooms.

| House Size (m²) | Bedrooms |

|---|---|

| 2100 | 3 |

| 1600 | 2 |

| 2500 | 4 |

| 1800 | 3 |

Using min-max scaling:

| House Size (scaled) | Bedrooms (scaled) |

|---|---|

| 0.714 | 0.5 |

| 0.0 | 0.0 |

| 1.0 | 1.0 |

| 0.286 | 0.5 |

Feature Scaling in Gradient Descent

After scaling, gradient descent updates will be more balanced across different features, leading to faster and more stable convergence. Feature scaling is a critical preprocessing step in machine learning models involving optimization algorithms like gradient descent.

Feature Engineering and Polynomial Regression

- Feature Engineering

- Polynomial Regression

Feature Engineering

Introduction to Feature Engineering

Feature engineering is the process of transforming raw data into meaningful features that improve the predictive power of machine learning models. It involves creating new features, modifying existing ones, and selecting the most relevant features to enhance model performance.

Why is Feature Engineering Important?

- Improves model accuracy: Well-engineered features help models learn better representations of the data.

- Reduces model complexity: Properly engineered features can make complex models simpler and more interpretable.

- Enhances generalization: Good feature selection prevents overfitting and improves performance on unseen data.

Real-World Example

Consider a house price prediction problem. Instead of using just raw data such as square footage and the number of bedrooms, we can create new features like:

- Price per square foot =

Price / Size - Age of the house =

Current Year - Year Built - Proximity to city center =

Distance in km

These engineered features often provide better insights and improve model performance compared to using raw data alone.

Feature Transformation

Feature transformation involves applying mathematical operations to existing features to make data more suitable for machine learning models.

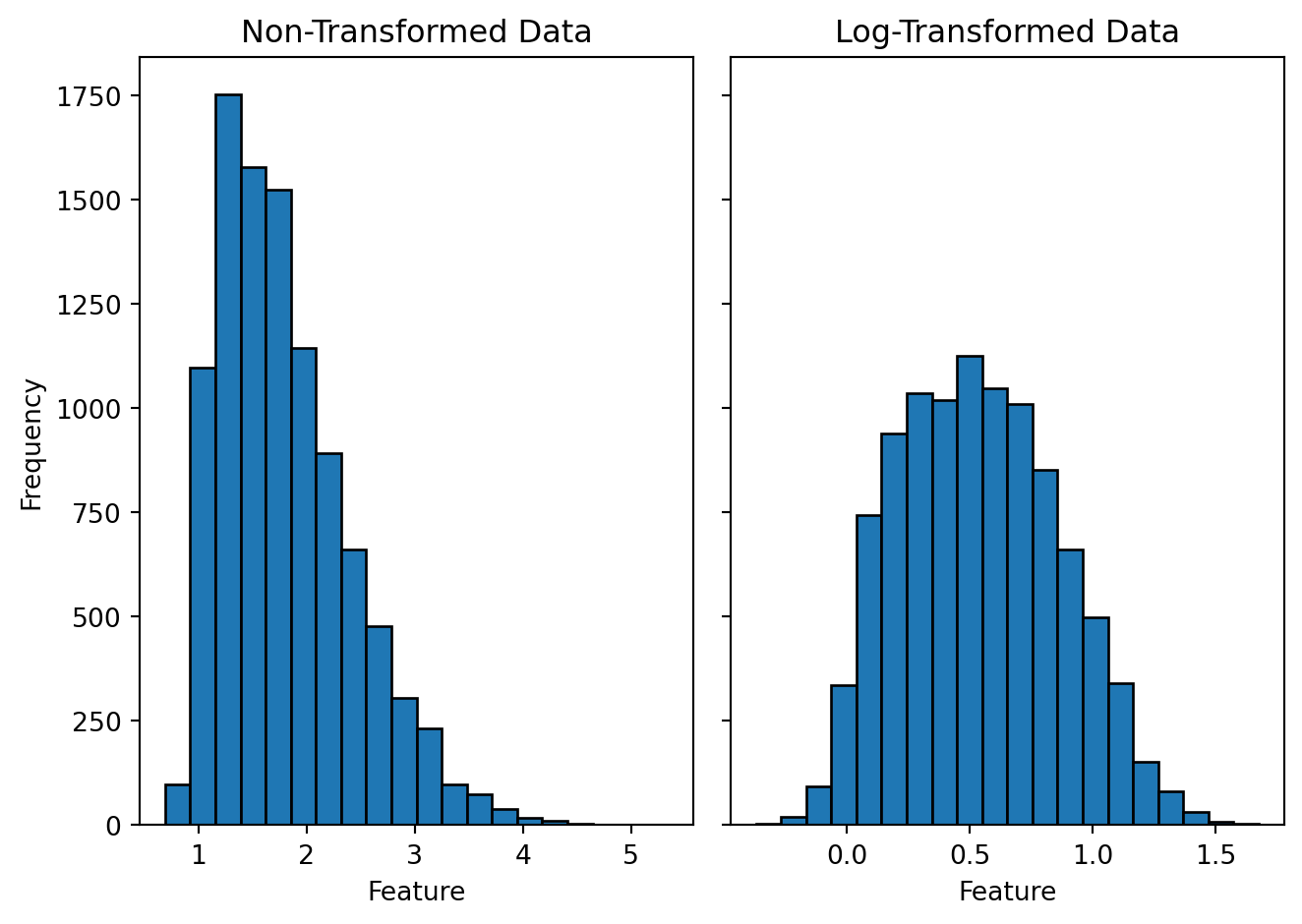

1. Log Transformation

Used to reduce skewness and stabilize variance in highly skewed data.

Example: Income Data

Many income datasets have a right-skewed distribution where most values are low, but a few values are extremely high. Applying a log transformation makes the data more normal:

2. Polynomial Features

Adding polynomial terms (squared, cubic) to capture non-linear relationships.

Example: House Price Prediction

Instead of using Size as a single feature, we can include Size^2 and Size^3 to better fit non-linear patterns.

from sklearn.preprocessing import PolynomialFeatures

import numpy as np

X = np.array([[1000], [1500], [2000], [2500]]) # House sizes

poly = PolynomialFeatures(degree=2)

X_poly = poly.fit_transform(X)

print(X_poly)

3. Interaction Features

Creating new features based on interactions between existing ones.

Example: Combining Features

Instead of using Height and Weight separately for a health model, create a new BMI feature:

def calculate_bmi(height, weight):

return weight / (height ** 2)

height = np.array([1.65, 1.75, 1.80]) # Heights in meters

weight = np.array([65, 80, 90]) # Weights in kg

bmi = calculate_bmi(height, weight)

print(bmi)

This allows the model to understand health risks better than using height and weight separately.

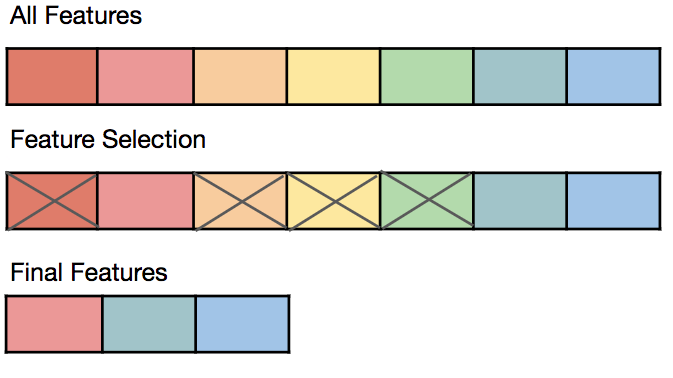

Feature Selection

Feature selection involves identifying the most relevant features for a model while removing unnecessary or redundant ones. This improves model performance and reduces computational complexity.

1. Unnecessary Features

Not all features contribute equally to model performance. Some may be irrelevant or redundant, leading to overfitting and increased computational cost. Examples of unnecessary features include:

- ID columns: Unique identifiers that do not provide predictive value.

- Highly correlated features: Features that contain similar information.

- Constant or near-constant features: Features with little to no variation.

2. Correlation Analysis

Correlation analysis helps detect multicollinearity, where two or more features are highly correlated. If two features provide similar information, one of them can be removed.

Example: Finding Highly Correlated Features

import pandas as pd

import numpy as np

# Sample dataset

data = {

'Feature1': [1, 2, 3, 4, 5],

'Feature2': [2, 4, 6, 8, 10],

'Feature3': [5, 3, 6, 9, 2]

}

df = pd.DataFrame(data)

# Compute correlation matrix

correlation_matrix = df.corr()

print(correlation_matrix)

Features with a correlation coefficient close to ±1 can be considered redundant and removed.

3. Statistical Feature Selection Methods

Feature selection techniques can be used to rank the importance of different features based on statistical tests or model-based importance measures.

At this stage it is enough to learn superficially !

Common Methods:

- Chi-Square Test: Measures dependency between categorical features and the target variable.

- Mutual Information: Evaluates how much information a feature contributes.

- Recursive Feature Elimination (RFE): Iteratively removes less important features based on model performance.

- Feature Importance from Tree-Based Models: Decision trees and random forests provide feature importance scores.

Feature selection ensures that only the most valuable features are used in the final model, improving efficiency and predictive power.

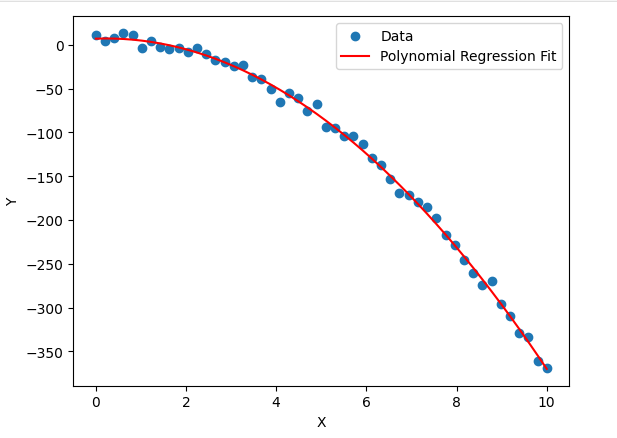

Polynomial Regression

Introduction to Polynomial Regression

Polynomial Regression is an extension of Linear Regression that models non-linear relationships between input features and the target variable. While Linear Regression assumes a straight-line relationship, Polynomial Regression captures curves and more complex patterns.

Why Use Polynomial Regression?

- Handles Non-Linearity: Unlike Linear Regression, which assumes a direct relationship, Polynomial Regression models curved trends.

- Better Fit for Real-World Data: Many real-world phenomena, such as population growth, economic trends, and physics-based models, exhibit non-linear behavior.

- Feature Engineering Alternative: Instead of manually creating interaction terms, Polynomial Regression provides an automatic way to capture complex dependencies.

Example: Predicting House Prices

Consider a dataset where house prices do not increase linearly with size. Instead, they follow a non-linear trend due to factors like demand, location, and infrastructure. A Polynomial Regression model can better capture this pattern.

For instance:

- Linear Model:

- Polynomial Model:

This quadratic term helps model the curved price trend more accurately.

Mathematical Representation and Implementation

Polynomial regression extends linear regression by adding polynomial terms to the feature set. The hypothesis function is represented as:

where:

- is the input feature,

- are the parameters (weights),

- represents higher-degree polynomial terms.

This allows the model to capture non-linear relationships in the data.

Classification with Logistic Regression

- 1. Introduction to Classification

- 2. Logistic Regression

- 3. Cost Function for Logistic Regression

- 4. Gradient Descent for Logistic Regression

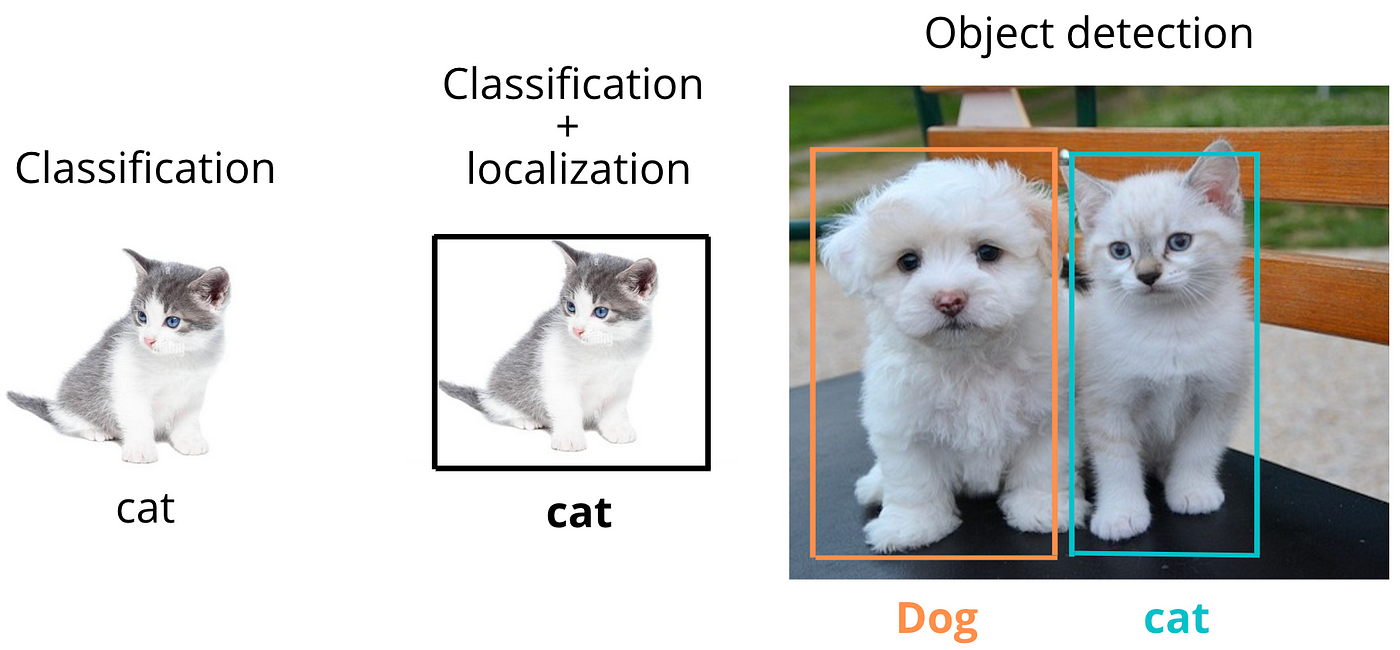

1. Introduction to Classification

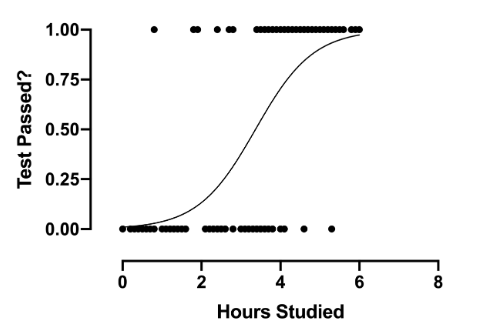

Classification is a supervised learning problem where the goal is to predict discrete categories instead of continuous values. Unlike regression, which predicts numerical values, classification assigns data points to labels or classes.

Classification vs. Regression

| Feature | Regression | Classification |

|---|---|---|

| Output Type | Continuous | Discrete |

| Example | Predicting house prices | Email spam detection |

| Algorithm Example | Linear Regression | Logistic Regression |

Examples of Classification Problems

- Email Spam Detection: Classify emails as "spam" or "not spam".

- Medical Diagnosis: Identify whether a patient has a disease (yes/no).

- Credit Card Fraud Detection: Determine if a transaction is fraudulent or legitimate.

- Image Recognition: Classifying images as "cat" or "dog".

Classification models can be:

- Binary Classification: Only two possible outcomes (e.g., spam or not spam).

- Multi-class Classification: More than two possible outcomes (e.g., classifying handwritten digits 0-9).

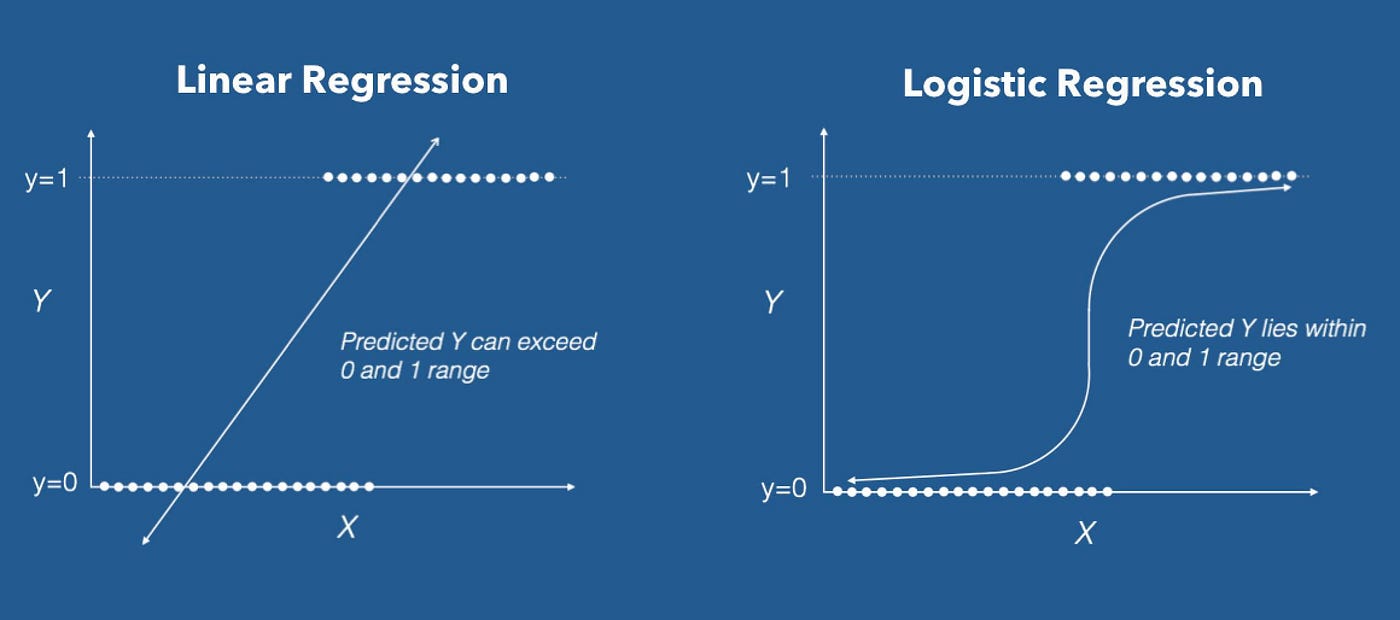

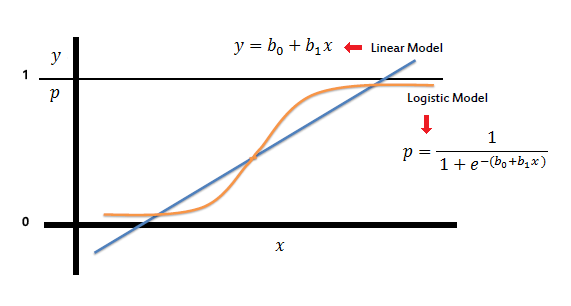

2. Logistic Regression

Introduction to Logistic Regression

Logistic regression is a statistical model used for binary classification problems. Unlike linear regression, which predicts continuous values, logistic regression predicts probabilities that map to discrete class labels.

Linear regression might seem like a reasonable approach for classification, but it has major limitations:

- Unbounded Output: Linear regression produces outputs that can take any real value, meaning predictions could be negative or greater than 1, which makes no sense for probability-based classification.

- Poor Decision Boundaries: If we use a linear function for classification, extreme values in the dataset can distort the decision boundary, leading to incorrect classifications.

To solve these issues, we use logistic regression, which applies the sigmoid function to transform outputs into a probability range between 0 and 1.

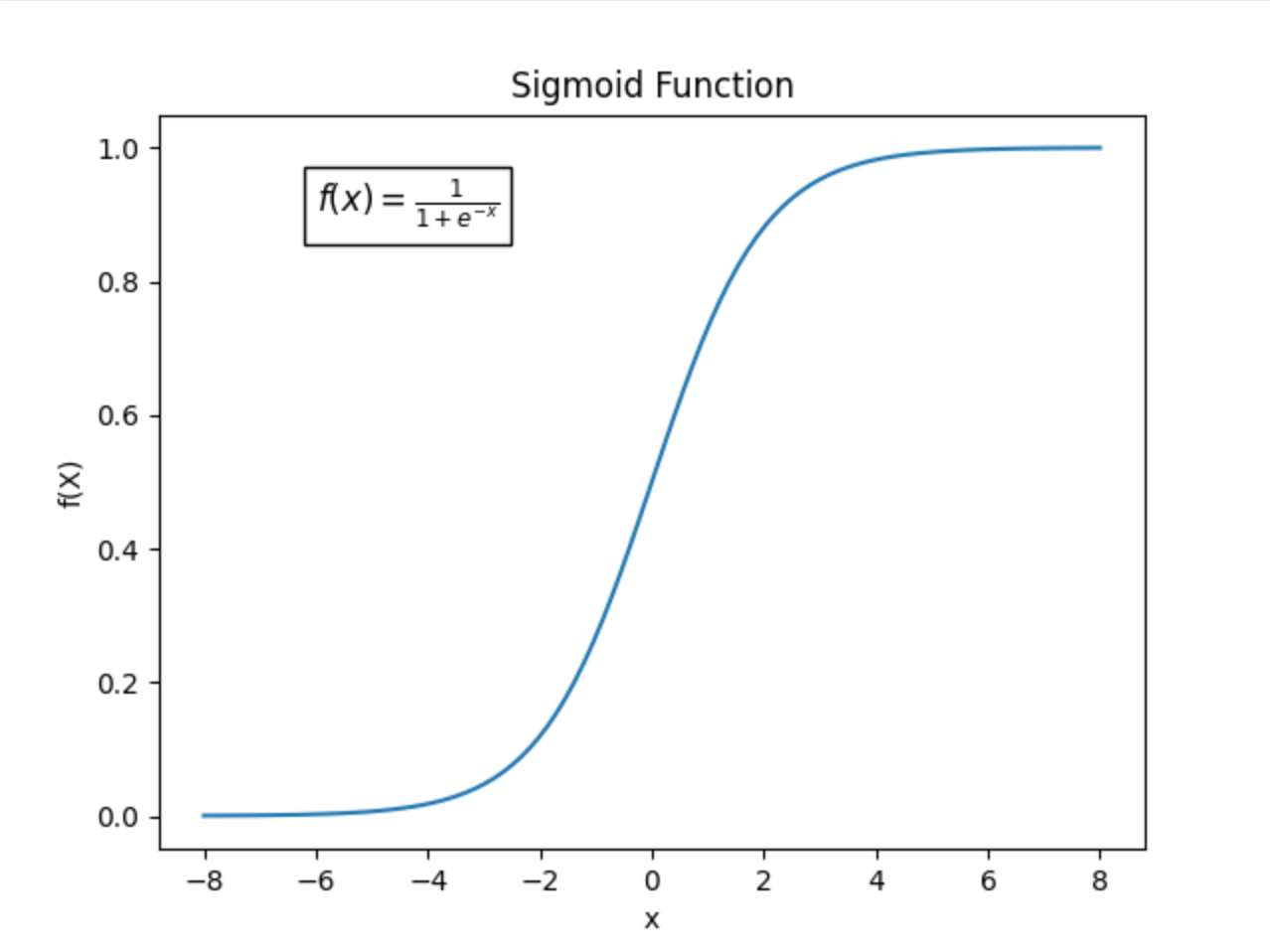

Why Do We Need the Sigmoid Function?

The sigmoid function is a key component of logistic regression. It ensures that outputs always remain between 0 and 1, making them interpretable as probabilities.

Consider a fraud detection system that predicts whether a transaction is fraudulent (1) or legitimate (0) based on customer behavior. Suppose we use a linear model:

For some transactions, the output might be y = 7.5 or y = -3.2, which do not make sense as probability values. Instead, we use the sigmoid function to squash any real number into a valid probability range:

This function maps:

- Large positive values to probabilities close to 1 (fraudulent transaction).

- Large negative values to probabilities close to 0 (legitimate transaction).

- Values near 0 to probabilities near 0.5 (uncertain classification).

Sigmoid Function and Probability Interpretation

The output of the sigmoid function can be interpreted as:

- → The model predicts Class 1 (e.g., spam email, fraudulent transaction).

- → The model predicts Class 0 (e.g., not spam email, legitimate transaction).

For a final classification decision, we apply a threshold (typically 0.5):

This means:

- If the probability is ≥ 0.5, we classify the input as 1 (positive class).

- If the probability is < 0.5, we classify it as 0 (negative class).

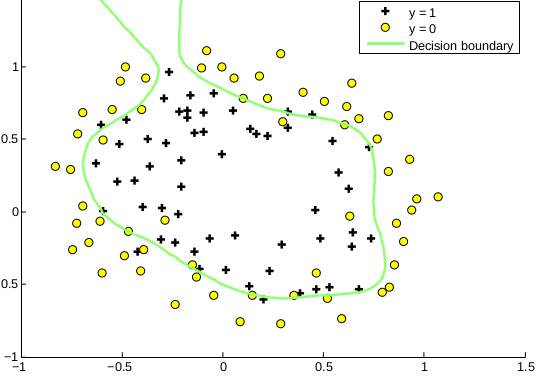

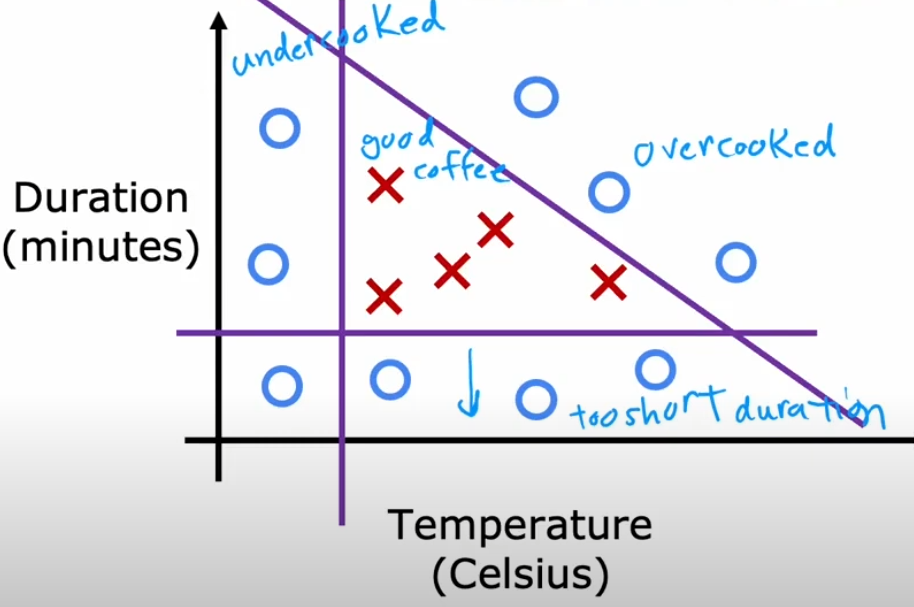

Decision Boundary

The decision boundary is the surface that separates different classes in logistic regression. It is the point at which the model predicts a probability of 0.5, meaning the model is equally uncertain about the classification.

Since logistic regression produces probabilities using the sigmoid function, we define the decision boundary mathematically as:

Taking the inverse of the sigmoid function, we get:

This equation defines the decision boundary as a linear function in the feature space.

Understanding the Decision Boundary with Examples

1. Single Feature Case (1D)

If we have only one feature , the model equation is:

Solving for :

This means that when crosses this threshold, the model switches from predicting Class 0 to Class 1.

Example: Imagine predicting whether a student passes or fails based on study hours ():

- If hours → Fail (Class 0).

- If hours → Pass (Class 1).

The decision boundary in this case is simply .

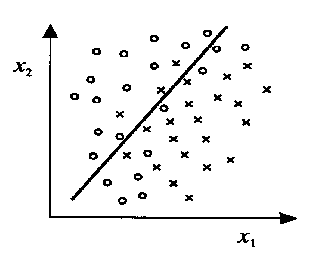

2. Two Features Case (2D)

For two features and , the decision boundary equation becomes:

Rearranging:

This represents a straight line separating the two classes in a 2D plane.

Example: Suppose we classify students as passing (1) or failing (0) based on study hours () and sleep hours ():

- The decision boundary could be:

- If is above the line, classify as pass.

- If is below the line, classify as fail.

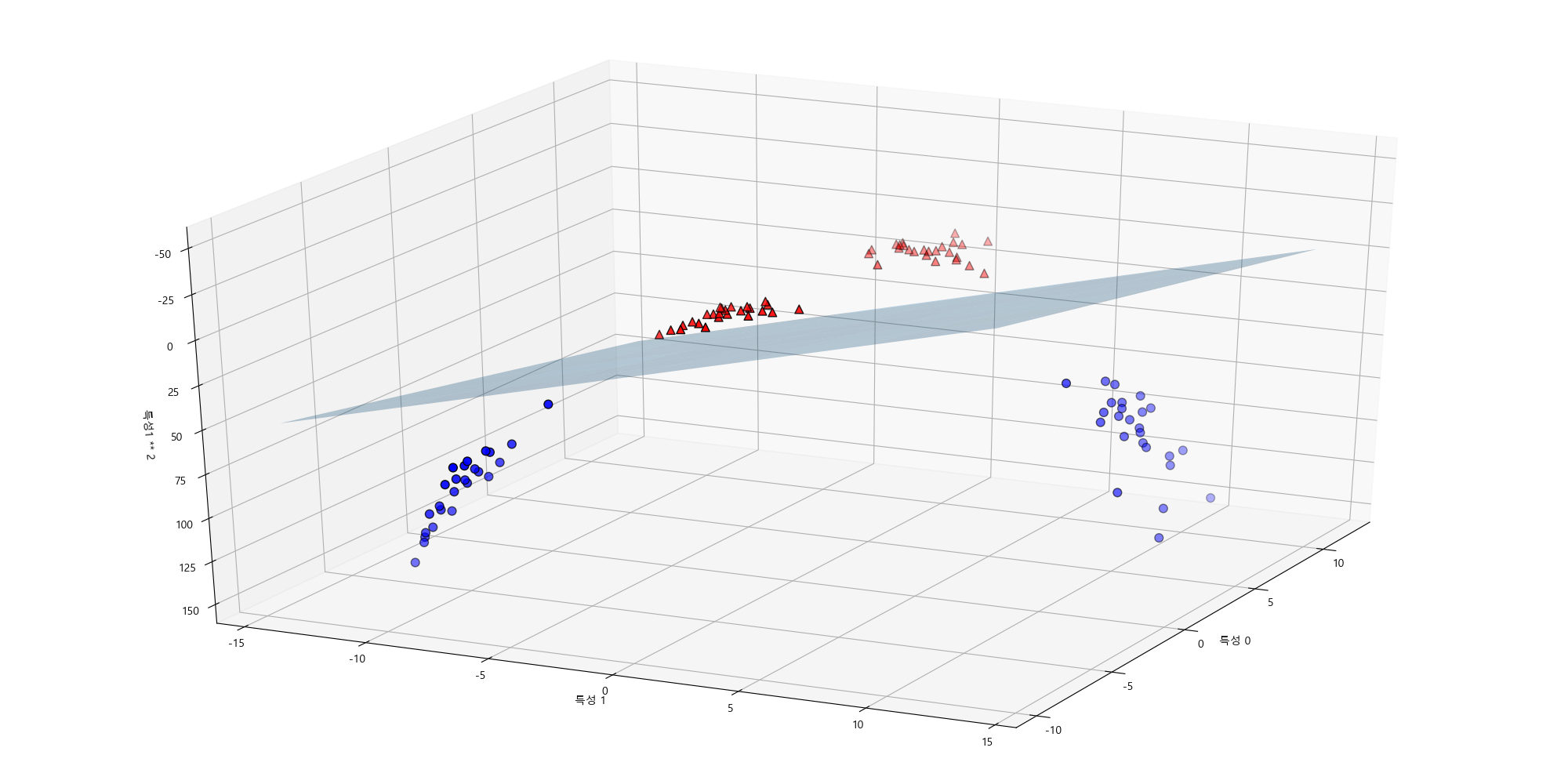

3. Two Features Case (3D)

When we move to three features , , and , the decision boundary becomes a plane in three-dimensional space:

Rearranging for :

This equation represents a flat plane dividing the 3D space into two regions, one for Class 1 and the other for Class 0.

Example:

Imagine predicting whether a company will be profitable (1) or not (0) based on:

- Marketing Budget ()

- R&D Investment ()

- Number of Employees ()

The decision boundary would be a plane in 3D space, separating profitable and non-profitable companies.

In general, for n features, the decision boundary is a hyperplane in an n-dimensional space.

4. Non-Linear Decision Boundaries in Depth

So far, we have seen that logistic regression creates linear decision boundaries. However, many real-world problems have non-linear relationships. In such cases, a straight line (or plane) is not sufficient to separate classes.

To capture complex decision boundaries, we introduce polynomial features or feature transformations.

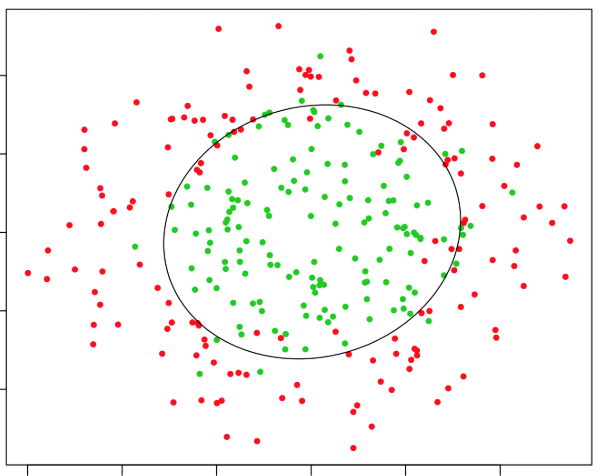

Example 1: Circular Decision Boundary

If the data requires a circular boundary, we can use quadratic terms:

This represents a circle in 2D space.

For example:

-

If and are the coordinates of points, a decision boundary like:

would classify points inside a radius-2 circle as Class 1 and outside as Class 0.

Example 2: Elliptical Decision Boundary

A more general quadratic equation:

This allows for elliptical decision boundaries.

Example 3: Complex Non-Linear Boundaries

For even more complex boundaries, we can include higher-order polynomial features, such as:

This enables twists and curves in the decision boundary, allowing logistic regression to model highly non-linear patterns.

Feature Engineering for Non-Linear Boundaries

- Instead of adding polynomial terms manually, we can transform features using basis functions (e.g., Gaussian kernels or radial basis functions).

- Feature maps can convert non-linearly separable data into a higher-dimensional space where a linear decision boundary works.

Limitations of Logistic Regression for Non-Linear Boundaries

- Feature engineering is required: Unlike neural networks or decision trees, logistic regression cannot learn complex boundaries automatically.

- Higher-degree polynomials can lead to overfitting: Too many non-linear terms make the model sensitive to noise.

Key Takeaways

- In 3D, the decision boundary is a plane, and in higher dimensions, it becomes a hyperplane.

- Non-linear decision boundaries can be created using quadratic, cubic, or transformed features.

- Feature engineering is crucial to make logistic regression work well for non-linearly separable problems.

- Too many high-order polynomial terms can cause overfitting, so regularization is needed.

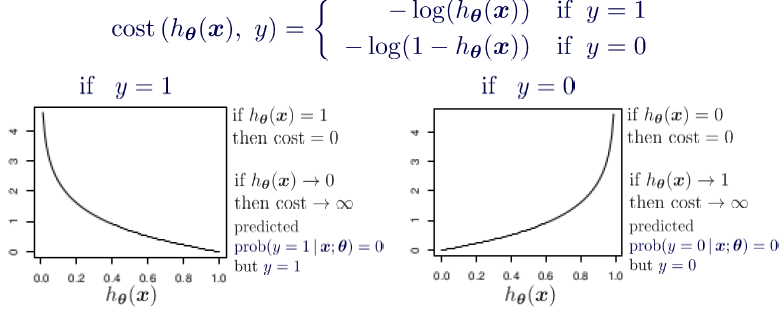

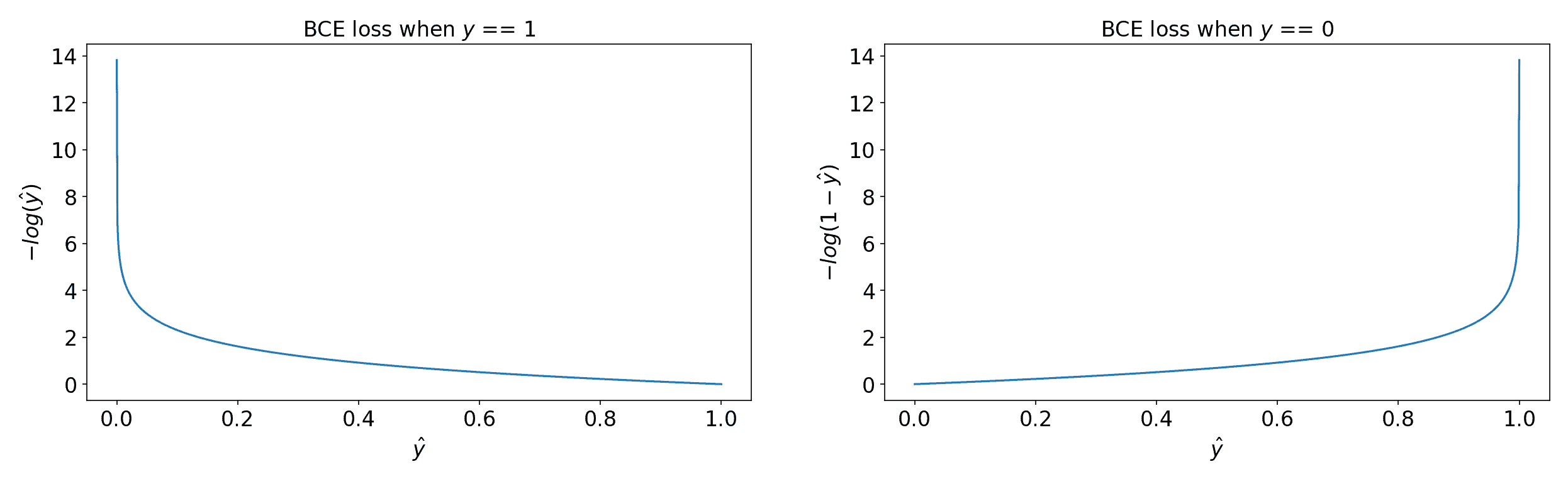

3. Cost Function for Logistic Regression

1. Why Do We Need a Cost Function?

In linear regression, we use the Mean Squared Error (MSE) as the cost function:

However, this cost function does not work well for logistic regression because:

- The hypothesis function in logistic regression is non-linear due to the sigmoid function.

- Using squared errors results in a non-convex function with multiple local minima, making optimization difficult.

We need a different cost function that:

✅ Works well with the sigmoid function.

✅ Is convex, so gradient descent can efficiently minimize it.

2. Simplified Cost Function for Logistic Regression

Instead of using squared errors, we use a log loss function:

Where:

- is the true label (0 or 1).

- is the predicted probability from the sigmoid function.

This function ensures:

- If → The first term dominates: , which is close to 0 if (correct prediction).

- If → The second term dominates: , which is close to 0 if .

✅ Interpretation: The function penalizes incorrect predictions heavily while rewarding correct predictions.

3. Intuition Behind the Cost Function

Let’s break it down:

-

When , the cost function simplifies to:

This means:

- If (correct prediction), → No penalty.

- If (wrong prediction), → High penalty!

-

When , the cost function simplifies to:

This means:

- If (correct prediction), → No penalty.

- If (wrong prediction), → High penalty!

✅ Key Takeaway:

The function assigns very high penalties for incorrect predictions, encouraging the model to learn correct classifications.

4. Gradient Descent for Logistic Regression

1. Why Do We Need Gradient Descent?

In logistic regression, our goal is to find the best parameters that minimize the cost function:

Since there is no closed-form solution like in linear regression, we use gradient descent to iteratively update until we reach the minimum cost.

2. Gradient Descent Algorithm

Gradient descent updates the parameters using the rule:

Where:

- is the learning rate (step size).

- is the gradient (direction of steepest increase).

For logistic regression, the derivative of the cost function is:

Thus, the update rule becomes:

✅ Key Insight:

- We compute the error: .

- Multiply it by the feature .

- Average over all training examples.

- Scale by and update .

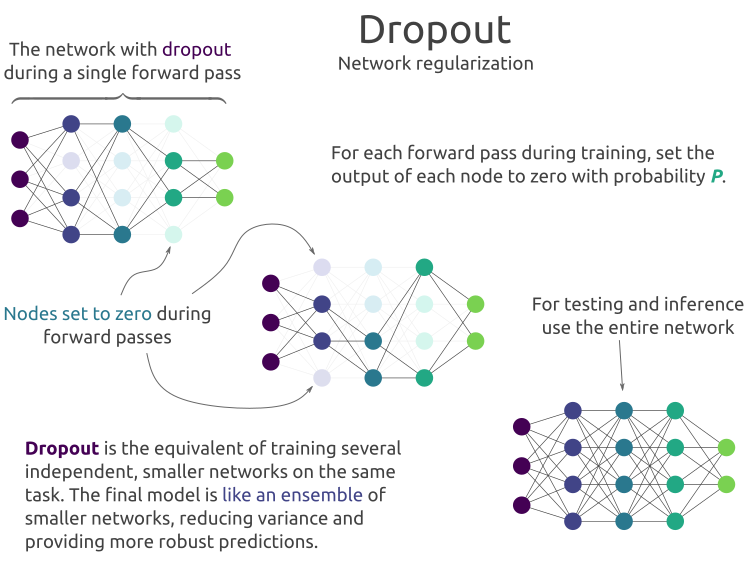

Overfitting and Regularization

- 1. The Problem of Overfitting

- 2. Addressing Overfitting

- 3. Regularized Cost Function

- 4. Regularized Linear Regression

- 5. Regularized Logistic Regression

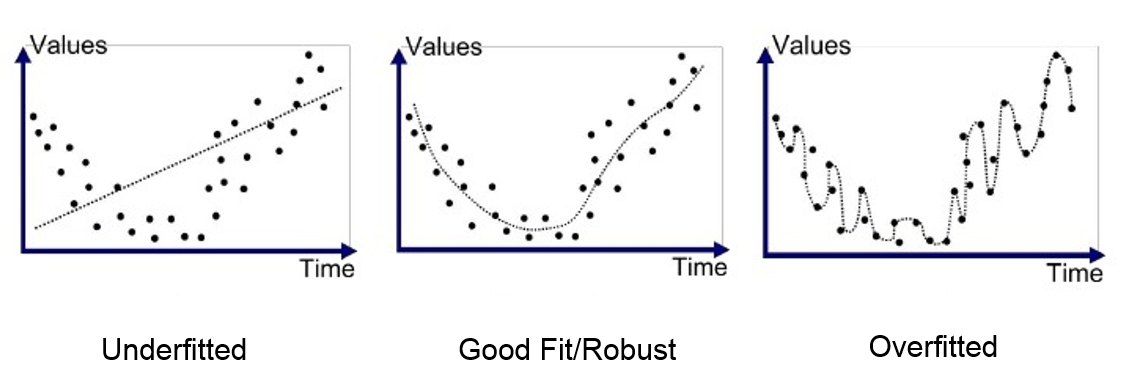

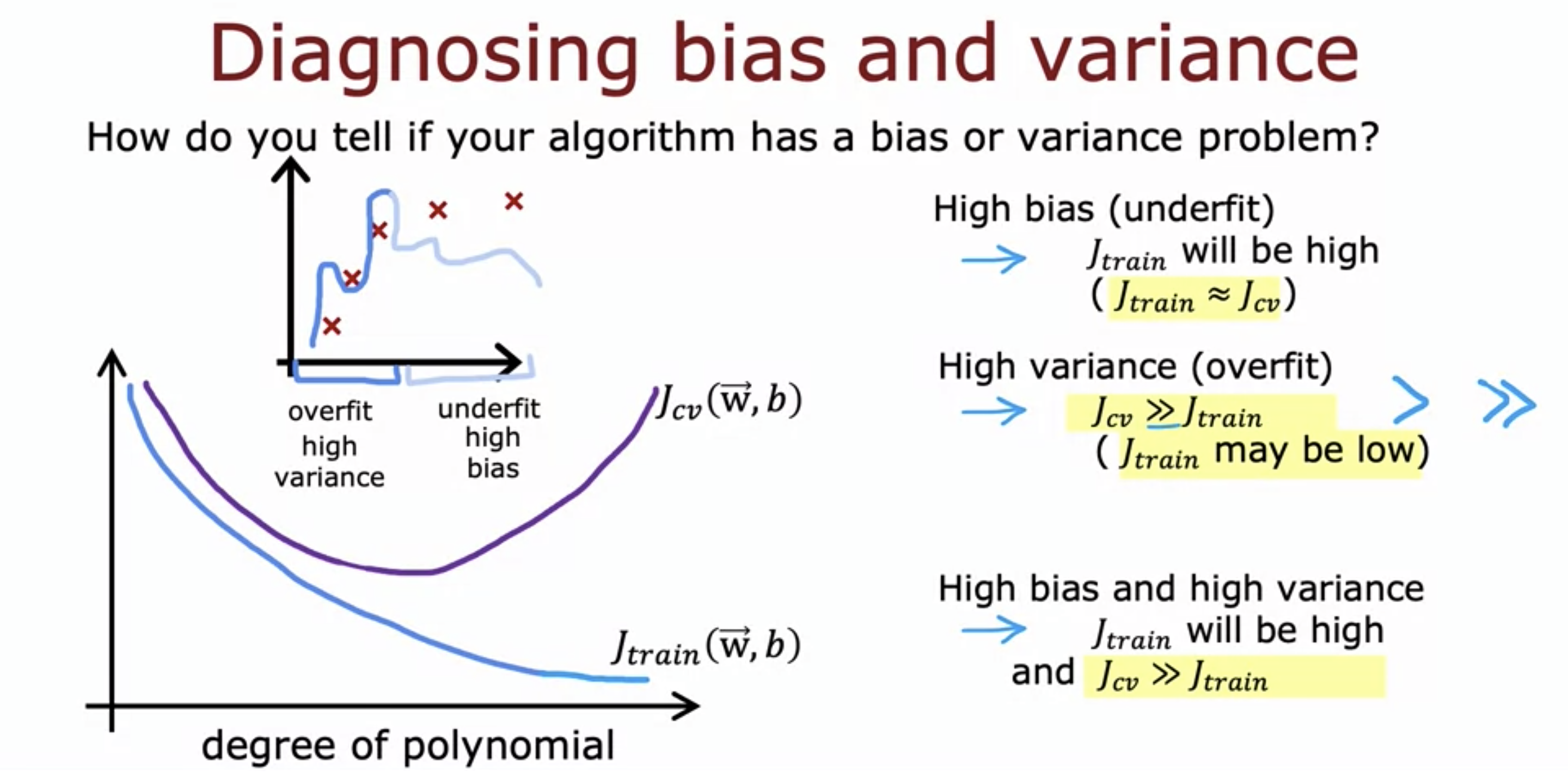

1. The Problem of Overfitting

What is Overfitting?

Overfitting occurs when a machine learning model learns the training data too well, capturing noise and random fluctuations rather than the underlying pattern. As a result, the model performs well on training data but generalizes poorly to unseen data.

Symptoms of Overfitting

- High training accuracy but low test accuracy (poor generalization).

- Complex decision boundaries that fit training data too closely.

- Large model parameters (high magnitude weights), leading to excessive sensitivity to small changes in input data.

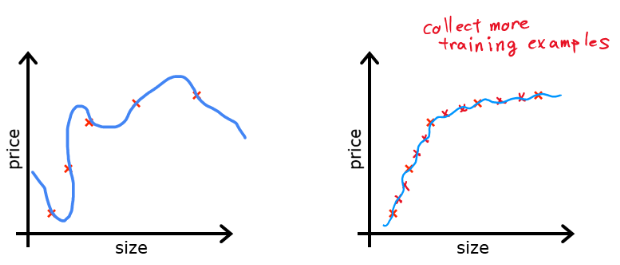

Example of Overfitting in Regression

Consider a polynomial regression model. If we fit a high-degree polynomial to data, the model may pass through all training points perfectly but fail to predict new data correctly.

Overfitting vs. Underfitting

| Model Complexity | Training Error | Test Error | Generalization |

|---|---|---|---|

| Underfitting (High Bias) | High | High | Poor |

| Good Fit | Low | Low | Good |

| Overfitting (High Variance) | Very Low | High | Poor |

Visualization of Overfitting

- Left (Underfitting): The model is too simple and cannot capture the trend.

- Middle (Good Fit): The model captures the pattern without overcomplicating.

- Right (Overfitting): The model follows the training data too closely, failing on new inputs.

2. Addressing Overfitting

Overfitting occurs when a model learns noise instead of the underlying pattern in the data. To address overfitting, we can apply several strategies to improve the model’s ability to generalize to unseen data.

1. Collecting More Data

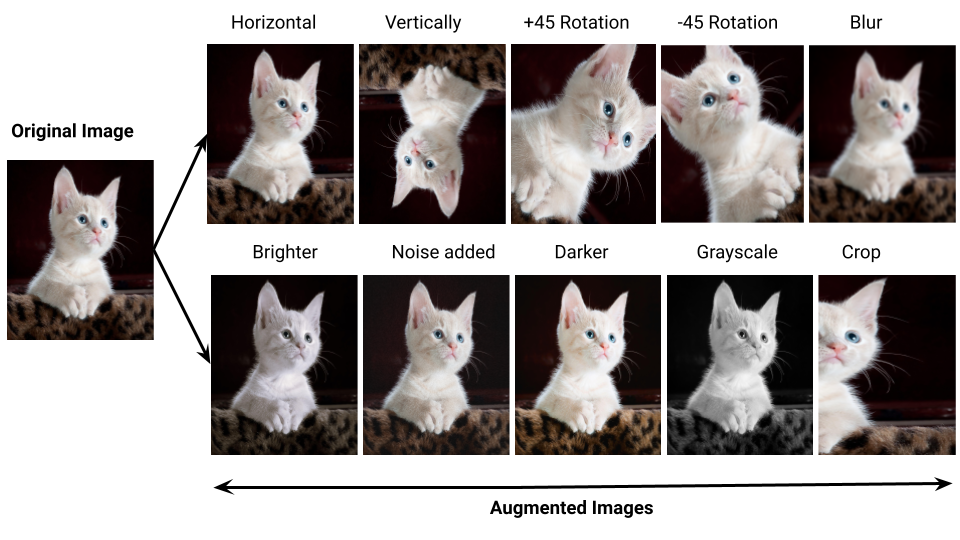

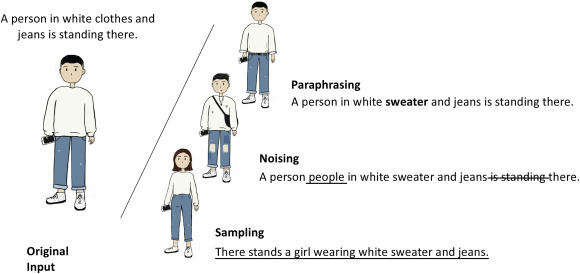

- More training data helps the model capture real patterns rather than memorizing noise.

- Especially effective for deep learning models, where small datasets tend to overfit quickly.

- Not always feasible, but can be supplemented with data augmentation techniques.

2. Feature Selection & Engineering

- Removing irrelevant or redundant features reduces model complexity.

- Techniques like Principal Component Analysis (PCA) help reduce dimensionality.

- Engineering new features (e.g., creating polynomial features or interaction terms) can improve generalization.

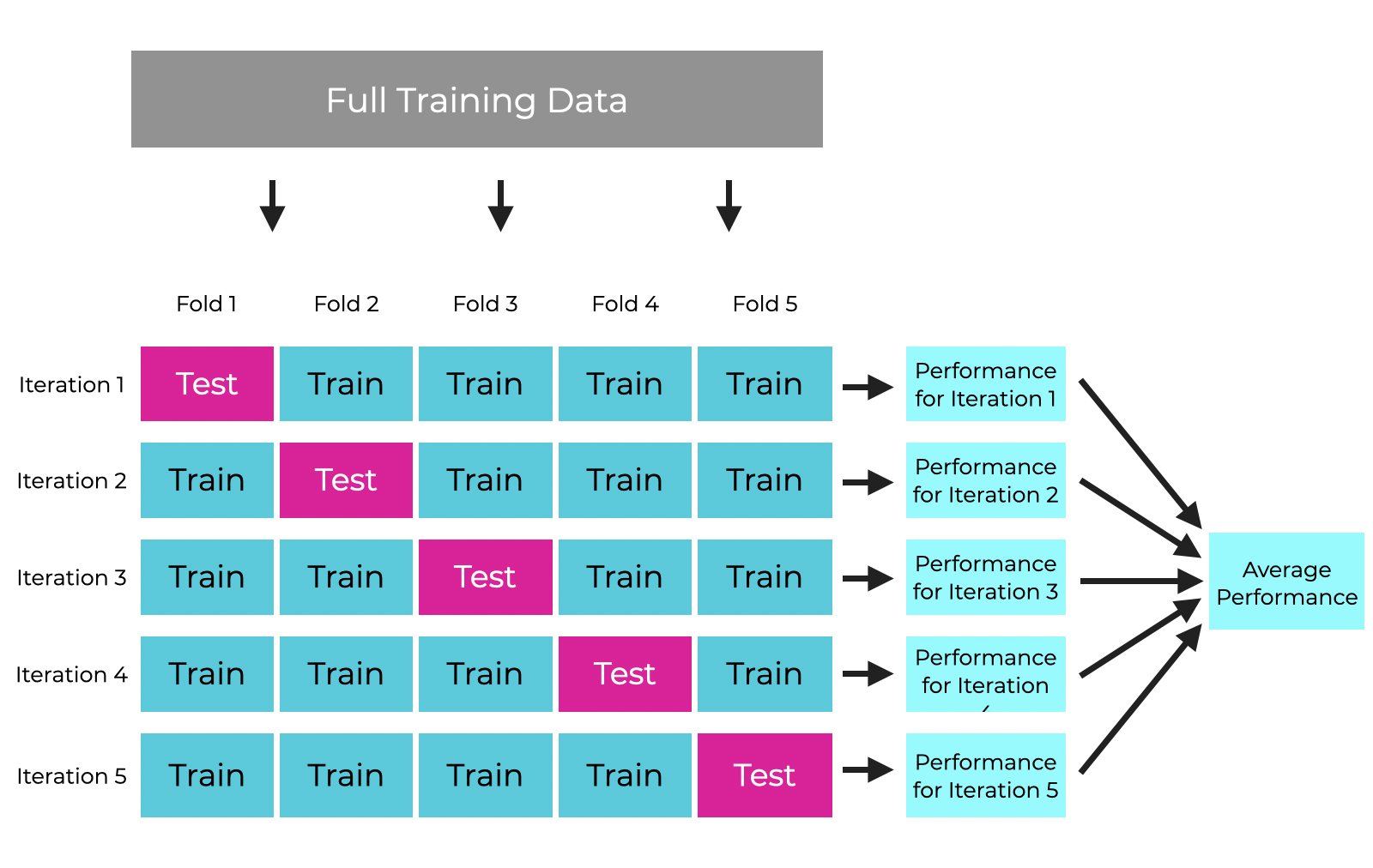

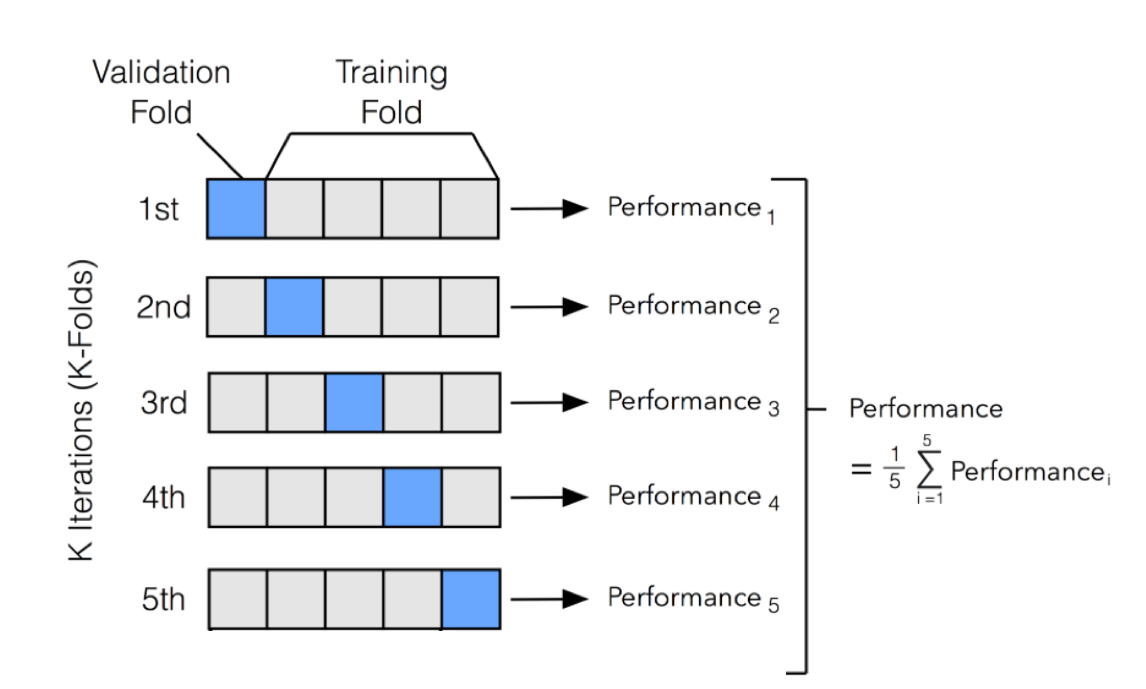

3. Cross-Validation

- k-fold cross-validation ensures that the model performs well on different data splits.

- Helps detect overfitting early by testing the model on multiple subsets of data.

- Leave-one-out cross-validation (LOOCV) is another approach, especially useful for small datasets.

4. Regularization as a Solution

- Regularization techniques add constraints to the model to prevent excessive complexity.

- L1 (Lasso) and L2 (Ridge) Regularization introduce penalties for large coefficients.

- We will explore regularized cost functions in the next section.

By applying these techniques, we control model complexity and improve generalization performance. In the next section, we will dive deeper into regularization and its role in the cost function.

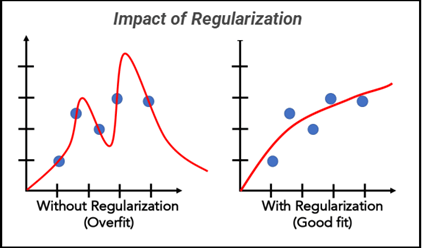

3. Regularized Cost Function

Overfitting often occurs when a model learns excessive complexity, leading to poor generalization. One way to control this is by modifying the cost function to penalize overly complex models.

1. Why Modify the Cost Function?

The standard cost function in regression or classification only minimizes the error on training data, which can result in large coefficients (weights) that overfit the data.

By adding a regularization term, we discourage large weights, making the model simpler and reducing overfitting.

2. Adding Regularization Term

Regularization adds a penalty term to the cost function that shrinks the model parameters. The two most common types of regularization are:

L2 Regularization (Ridge Regression)

In L2 regularization, we add the sum of squared weights to the cost function:

- (regularization parameter) controls how much regularization is applied.

- Higher values force the model to reduce the magnitude of parameters, preventing overfitting.

- L2 regularization keeps all features but reduces their impact.

L1 Regularization (Lasso Regression)

In L1 regularization, we add the absolute values of weights:

- L1 regularization pushes some coefficients to zero, effectively performing feature selection.

- It results in sparser models, which are useful when many features are irrelevant.

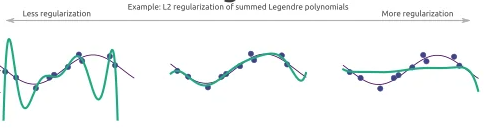

3. Effect of Regularization on Model Complexity

Regularization controls model complexity by restricting parameter values:

- No Regularization () → The model fits the training data too closely (overfitting).

- Small → The model is still flexible but generalizes better.

- Large → The model becomes too simple (underfitting), losing important patterns.

Visualization of Regularization Effects

- Left (No Regularization): The model overfits training data.

- Middle (Moderate Regularization): The model generalizes well.

- Right (Strong Regularization): The model underfits the data.

4. Regularized Linear Regression

Linear regression without regularization can suffer from overfitting, especially when the model has too many features or when training data is limited. Regularization helps by constraining the model's parameters, preventing extreme values that lead to high variance.

1. Linear Regression Cost Function (Without Regularization)

The standard cost function for linear regression is:

where:

- is the hypothesis (predicted value),

- is the number of training examples.

This function minimizes the sum of squared errors but does not impose any restrictions on the parameter values, which can lead to overfitting.

2. Regularized Cost Function for Linear Regression

To prevent overfitting, we add an L2 regularization term (also known as Ridge Regression) to penalize large parameter values:

where:

- is the regularization parameter that controls the penalty,

- The term penalizes large values of ,

- (bias term) is not regularized.

3. Effect of Regularization in Gradient Descent

Regularization modifies the gradient descent update rule:

- The additional term shrinks the parameter values over time.

- When is too large, the model underfits (too simple).

- When is too small, the model overfits (too complex).

Effect of Regularization on Parameters

- If : Regularization is off → Overfitting risk.

- If is too high: Model is too simple → Underfitting.

- If is optimal: Good generalization → Balanced model.

4. Normal Equation with Regularization

For linear regression, we can solve for using the Normal Equation, which avoids gradient descent:

where:

- is the identity matrix (except is not regularized).

- Adding ensures is invertible, reducing multicollinearity issues.

5. Summary

✅ Regularization reduces overfitting by penalizing large weights.

✅ L2 regularization (Ridge Regression) modifies cost function by adding .

✅ Gradient Descent and Normal Equation both adjust to include regularization.

✅ Choosing is critical: too high → underfitting, too low → overfitting.

5. Regularized Logistic Regression

Logistic regression is commonly used for classification tasks, but like linear regression, it can overfit when there are too many features or limited data. Regularization helps control overfitting by penalizing large parameter values.

1. Logistic Regression Cost Function (Without Regularization)

The standard cost function for logistic regression is:

where:

- is the sigmoid function,

- is the actual class label ( or ),

- is the number of training examples.

This cost function does not include regularization, meaning the model may assign large weights to some features, leading to overfitting.

2. Regularized Cost Function for Logistic Regression

To reduce overfitting, we add an L2 regularization term, similar to regularized linear regression:

where:

- is the regularization parameter (controls penalty),

- The term discourages large parameter values,

- (bias term) is NOT regularized.

✅ Effect of Regularization

- Small → Model may overfit (complex decision boundary).

- Large → Model may underfit (too simple, missing important features).

- Optimal → Model generalizes well.

3. Effect of Regularization in Gradient Descent

Regularization modifies the gradient descent update rule:

- The regularization term shrinks the weight values over time.

- Helps avoid models that memorize training data instead of learning patterns.

4. Decision Boundary and Regularization

Regularization also affects decision boundaries:

- Without regularization (): Complex boundaries that fit noise.

- With moderate : Simpler boundaries that generalize better.

- With very high : Too simplistic boundaries that underfit.

5. Summary

✅ Regularization in logistic regression prevents overfitting by controlling parameter sizes.

✅ L2 regularization (Ridge Regression) adds to cost function.

✅ Gradient Descent is adjusted to shrink large weights.

✅ Choosing is critical for a well-generalized model.

Scikit-learn: Practical Applications

- 1. Introduction to Scikit-Learn

- 2. Linear Regression with Scikit-Learn

- 3. Multiple Linear Regression with Scikit-Learn

- 4. Polynomial Regression with Scikit-Learn

- 5. Binary Classification with Logistic Regression

- 6. Multi-Class Classification with Logistic Regression

1. Introduction to Scikit-Learn

Scikit-Learn is one of the most popular and powerful Python libraries for machine learning. It provides efficient implementations of various machine learning algorithms and tools for data preprocessing, model selection, and evaluation. It is built on top of NumPy, SciPy, and Matplotlib, making it highly compatible with the scientific computing ecosystem in Python.

Why Use Scikit-Learn?

- Easy to Use: Provides a simple and consistent API for machine learning models.

- Comprehensive: Includes a wide range of algorithms, including regression, classification, clustering, and dimensionality reduction.

- Efficient: Implements fast and optimized versions of ML algorithms.

- Integration: Works well with other libraries like Pandas, NumPy, and Matplotlib.

Loading Built-in Datasets in Scikit-Learn

Scikit-Learn provides several built-in datasets that can be used for practice and experimentation. Some common datasets include:

- Iris Dataset (

load_iris): Classification dataset for flower species. - Boston Housing Dataset (

load_boston) (Deprecated): Regression dataset for predicting house prices. - Digits Dataset (

load_digits): Handwritten digit classification. - Wine Dataset (

load_wine): Classification dataset for different types of wine. - Breast Cancer Dataset (

load_breast_cancer): Binary classification dataset for cancer diagnosis.

Example: Loading and Exploring the Iris Dataset

from sklearn.datasets import load_iris

import pandas as pd

# Load the dataset

iris = load_iris()

# Convert to DataFrame

iris_df = pd.DataFrame(iris.data, columns=iris.feature_names)

# Add target labels

iris_df['target'] = iris.target

# Display first few rows

print(iris_df.head())

Splitting Data: Train-Test Split

To evaluate a machine learning model, we need to split the data into a training set and a test set. This ensures that we can measure the model’s performance on unseen data.

Scikit-Learn provides train_test_split for this purpose:

Example: Splitting the Iris Dataset

from sklearn.model_selection import train_test_split

# Features and target variable

X = iris.data

y = iris.target

# Split into 80% training and 20% testing

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

print(f"Training samples: {len(X_train)}, Testing samples: {len(X_test)}")

test_size=0.2means 20% of the data is reserved for testing.random_state=42ensures reproducibility.

By following these steps, we have successfully loaded a dataset and prepared it for machine learning. In the next section, we will explore how to apply Linear Regression using Scikit-Learn.

Train-Test Split and Why It Matters

When training a machine learning model, we must evaluate its performance on unseen data to ensure it generalizes well. This is done by splitting the dataset into training and test sets.

Why Not Use 100% of Data for Training?

If we train the model using all available data, we won’t have any independent data to check how well it performs on new inputs. This leads to overfitting, where the model memorizes the training data instead of learning general patterns.

Why Not Use 90% or More for Testing?

While a large test set gives a better estimate of real-world performance, it reduces the amount of data available for training. A model trained on very little data may suffer from underfitting—it won’t have enough information to learn meaningful patterns.

What’s the Ideal Train-Test Split?

A commonly used ratio is 80% for training, 20% for testing. However, this depends on:

- Dataset Size: If data is limited, we may use a 90/10 split to keep more training data.

- Model Complexity: Simpler models may work with less training data, but deep learning models require more.

- Use Case: In critical applications (e.g., medical diagnosis), a larger test set (e.g., 30%) is preferred for reliable evaluation.

Key Takeaways

✅ 80/20 is a good starting point, but can vary based on dataset size and model needs.

✅ Too small a test set → Unreliable performance evaluation.

✅ Too large a test set → Model may not have enough training data to learn properly.

✅ Always shuffle the data before splitting to avoid biased results.

2. Linear Regression with Scikit-Learn

1. Introduction to Linear Regression

Linear regression is a fundamental supervised learning algorithm used to model the relationship between a dependent variable (target) and one or more independent variables (features). It assumes a linear relationship between input features and the output.

The mathematical form of a simple linear regression model is:

Where:

- is the predicted output.

- is the input feature.

- is the intercept (bias).

- is the coefficient (weight) of the feature.

Now, let's implement a simple linear regression model using Scikit-Learn.

2. Importing Required Libraries

First, we import necessary libraries for handling data, building the model, and evaluating its performance.

import numpy as np

import matplotlib.pyplot as plt

import pandas as pd

from sklearn.model_selection import train_test_split

from sklearn.linear_model import LinearRegression

from sklearn.metrics import mean_squared_error, r2_score

3. Creating a Sample Dataset

We will generate a synthetic dataset to train and test our linear regression model.

# Generate random data

np.random.seed(42) # Ensures reproducibility

X = 2 * np.random.rand(100, 1) # 100 samples, single feature

y = 4 + 3 * X + np.random.randn(100, 1) # y = 4 + 3X + Gaussian noise

# Convert to a DataFrame for better visualization

df = pd.DataFrame(np.hstack((X, y)), columns=["Feature X", "Target y"])

df.head()

np.random.rand(100, 1): Generates random values between and .y = 4 + 3X + noise: Defines a linear relationship with some added noise.- We use

pd.DataFrameto display the first few samples.

4. Splitting Data into Training and Testing Sets

It is crucial to split the dataset into training and testing sets to evaluate model performance on unseen data.

# Splitting dataset into 80% training and 20% testing

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

print(f"Training set size: {X_train.shape[0]} samples")

print(f"Testing set size: {X_test.shape[0]} samples")

5. Training the Linear Regression Model

Now, we train a linear regression model using Scikit-Learn's LinearRegression() class.

# Create and train the model

model = LinearRegression()

model.fit(X_train, y_train)

# Print learned parameters

print(f"Intercept (theta_0): {model.intercept_[0]:.2f}")

print(f"Coefficient (theta_1): {model.coef_[0][0]:.2f}")

fit(X_train, y_train): Trains the model by finding the best-fitting line.model.intercept_: The learned bias term.model.coef_: The learned weight for the feature.

6. Making Predictions

After training, we make predictions on the test set.

# Predict on test data

y_pred = model.predict(X_test)

# Compare actual vs predicted values

comparison_df = pd.DataFrame({"Actual": y_test.flatten(), "Predicted": y_pred.flatten()})

comparison_df.head()

model.predict(X_test): Generates predictions.- The DataFrame compares actual vs. predicted values.

7. Evaluating the Model

We use Mean Squared Error (MSE) and R² Score to evaluate model performance.

# Calculate Mean Squared Error (MSE)

mse = mean_squared_error(y_test, y_pred)

# Calculate R-squared score

r2 = r2_score(y_test, y_pred)

print(f"Mean Squared Error: {mse:.2f}")

print(f"R-squared Score: {r2:.2f}")

- MSE: Measures average squared differences between actual and predicted values (lower is better).

- R² Score: Measures how well the model explains the variance in the data (closer to 1 is better).

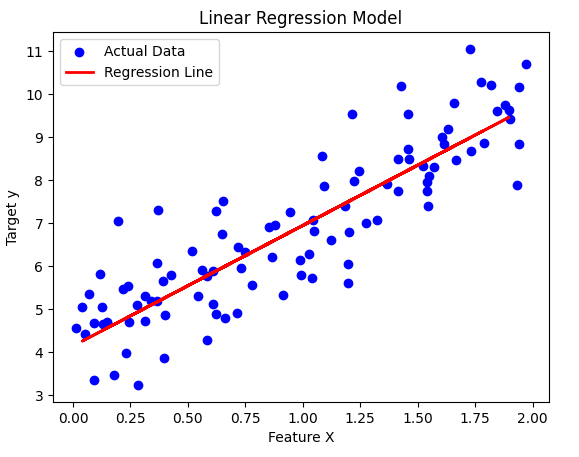

8. Visualizing the Results

Finally, let's plot the data and the regression line.

plt.scatter(X, y, color="blue", label="Actual Data")

plt.plot(X_test, y_pred, color="red", linewidth=2, label="Regression Line")

plt.xlabel("Feature X")

plt.ylabel("Target y")

plt.title("Linear Regression Model")

plt.legend()

plt.show()

This plot shows:

- Blue points → Actual test data

- Red line → Best-fit regression line

3. Multiple Linear Regression with Scikit-Learn

What is Multiple Linear Regression?

Multiple Linear Regression is an extension of simple linear regression where we predict a dependent variable () using multiple independent variables (). The general form of the equation is:

Where:

- = predicted output

- = independent variables (features)

- = intercept

- = coefficients (weights)

In this section, we will:

- Generate a synthetic dataset for a multiple linear regression model.

- Train a model using Scikit-Learn.

- Visualize the relationship in a 3D plot.

Step 1: Generate a Synthetic Dataset

First, let's create a dataset with two independent variables ( and ) and one dependent variable (). We'll add some noise to make it more realistic.

import numpy as np

import matplotlib.pyplot as plt

from mpl_toolkits.mplot3d import Axes3D

from sklearn.linear_model import LinearRegression

from sklearn.model_selection import train_test_split

# Set seed for reproducibility

np.random.seed(42)

# Generate random data for x1 and x2

x1 = np.random.uniform(0, 10, 100)

x2 = np.random.uniform(0, 10, 100)

# Define the true equation y = 3 + 2*x1 + 1.5*x2 + noise

y = 3 + 2*x1 + 1.5*x2 + np.random.normal(0, 2, 100)

# Reshape x1 and x2 for model training

X = np.column_stack((x1, x2))

Step 2: Train the Model

Now, we split the dataset into training and test sets and train a multiple linear regression model.

# Split data into training and test sets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# Create and train the model

model = LinearRegression()

model.fit(X_train, y_train)

# Get model parameters

theta0 = model.intercept_

theta1, theta2 = model.coef_

print(f"Model equation: y = {theta0:.2f} + {theta1:.2f}*x1 + {theta2:.2f}*x2")

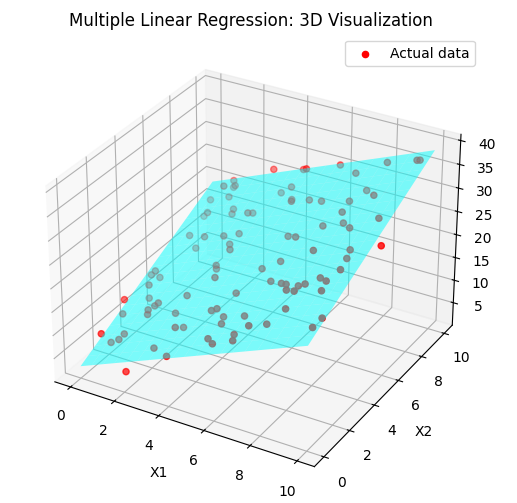

Step 3: Visualize the Regression Plane

Since we have two independent variables ( and ), we can plot the regression plane in 3D space.

# Generate grid for x1 and x2

x1_range = np.linspace(0, 10, 20)

x2_range = np.linspace(0, 10, 20)

x1_grid, x2_grid = np.meshgrid(x1_range, x2_range)

# Compute predicted y values

y_pred_grid = theta0 + theta1 * x1_grid + theta2 * x2_grid

# Create 3D plot

fig = plt.figure(figsize=(10, 6))

ax = fig.add_subplot(111, projection='3d')

# Scatter plot of real data

ax.scatter(x1, x2, y, color='red', label='Actual data')

# Regression plane

ax.plot_surface(x1_grid, x2_grid, y_pred_grid, alpha=0.5, color='cyan')

# Labels

ax.set_xlabel('X1')

ax.set_ylabel('X2')

ax.set_zlabel('Y')

ax.set_title('Multiple Linear Regression: 3D Visualization')

plt.legend()

plt.show()

Key Takeaways

- We generated a dataset with two independent variables and one dependent variable.

- We trained a Multiple Linear Regression model using Scikit-Learn.

- We visualized the regression plane in 3D, showing how and influence .

4. Polynomial Regression with Scikit-Learn

Polynomial Regression is an extension of Linear Regression, where we introduce polynomial terms to capture non-linear relationships in the data.

1. What is Polynomial Regression?

Linear regression models relationships using a straight line:

However, if the data follows a non-linear pattern, a straight line won't fit well. Instead, we can introduce polynomial terms:

This allows the model to capture curvature in the data.

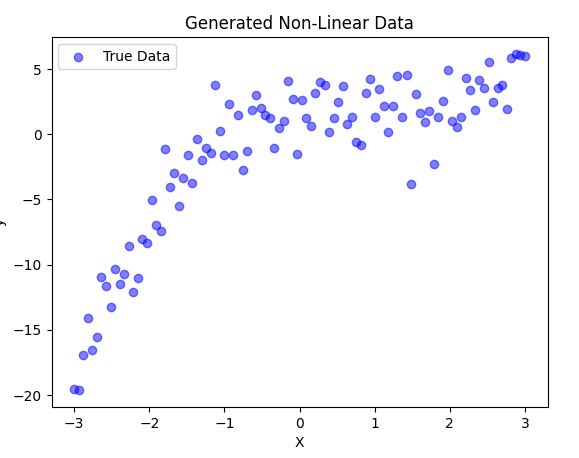

2. Generating Non-Linear Data

First, let's create a synthetic dataset with a non-linear relationship.

import numpy as np

import matplotlib.pyplot as plt

# Generate random x values between -3 and 3

np.random.seed(42)

X = np.linspace(-3, 3, 100).reshape(-1, 1)

# Generate a non-linear function with some noise

y = 0.5 * X**3 - X**2 + 2 + np.random.randn(100, 1) * 2

# Scatter plot of the data

plt.scatter(X, y, color='blue', alpha=0.5, label="True Data")

plt.xlabel("X")

plt.ylabel("y")

plt.title("Generated Non-Linear Data")

plt.legend()

plt.show()

- We create 100 random points between -3 and 3.

- The function we generate follows a cubic equation:

- with added noise.

- We visualize the data using a scatter plot.

3. Applying Polynomial Features

To transform our linear features into polynomial features, we use PolynomialFeatures from sklearn.preprocessing.

from sklearn.preprocessing import PolynomialFeatures

# Transform X into polynomial features (degree=3)

poly = PolynomialFeatures(degree=3)

X_poly = poly.fit_transform(X)

print(f"Original X shape: {X.shape}")

print(f"Transformed X shape: {X_poly.shape}")

print(f"First 5 rows of X_poly:\n{X_poly[:5]}")

- We use

PolynomialFeatures(degree=3)to add polynomial terms up to . - This converts each value into a feature vector .

- We print the new shape and first few transformed rows.

4. Training a Polynomial Regression Model

Now, we train a Linear Regression model using these polynomial features.

from sklearn.linear_model import LinearRegression

# Train polynomial regression model

model = LinearRegression()

model.fit(X_poly, y)

# Predictions

y_pred = model.predict(X_poly)

5. Visualizing the Results

Let's plot the polynomial regression model against the actual data.

plt.scatter(X, y, color='blue', alpha=0.5, label="True Data")

plt.plot(X, y_pred, color='red', linewidth=2, label="Polynomial Regression Fit")

plt.xlabel("X")

plt.ylabel("y")

plt.title("Polynomial Regression Model")

plt.legend()

plt.show()

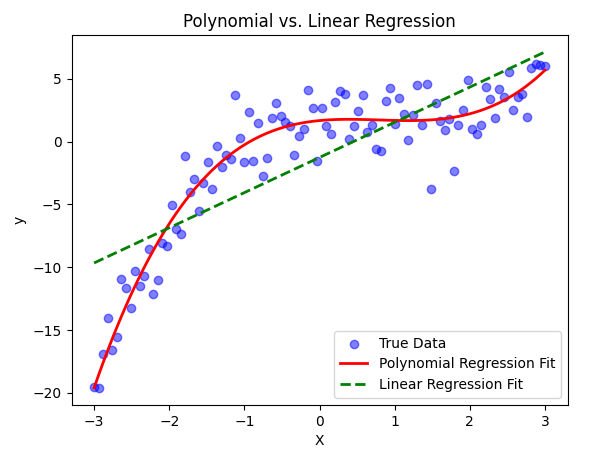

6. Comparing with Linear Regression

Now, let's compare Polynomial Regression with a simple Linear Regression model.

# Train a simple Linear Regression model

linear_model = LinearRegression()

linear_model.fit(X, y)

y_linear_pred = linear_model.predict(X)

# Plot both models

plt.scatter(X, y, color='blue', alpha=0.5, label="True Data")

plt.plot(X, y_pred, color='red', linewidth=2, label="Polynomial Regression Fit")

plt.plot(X, y_linear_pred, color='green', linestyle="dashed", linewidth=2, label="Linear Regression Fit")

plt.xlabel("X")

plt.ylabel("y")

plt.title("Polynomial vs. Linear Regression")

plt.legend()

plt.show()

5. Binary Classification with Logistic Regression

Logistic Regression is a fundamental algorithm used for binary classification problems. It estimates the probability that a given input belongs to a particular class using the sigmoid function.

1. What is Logistic Regression?

Unlike Linear Regression, which predicts continuous values, Logistic Regression predicts probabilities and then maps them to class labels (0 or 1). The model is defined as:

Where:

- represents the model parameters (weights and bias).

- represents the input features.

- The output is a probability between 0 and 1.

2. Generating a Synthetic Dataset (Spam Detection Example)

We'll create a synthetic dataset where emails are classified as spam (1) or not spam (0) based on two features:

- Number of suspicious words

- Email length

import numpy as np

import matplotlib.pyplot as plt

from sklearn.model_selection import train_test_split

from sklearn.linear_model import LogisticRegression

from sklearn.metrics import accuracy_score

# Generating synthetic data

np.random.seed(42)

num_samples = 200

# Feature 1: Number of suspicious words (randomly chosen values)

suspicious_words = np.random.randint(0, 20, num_samples)

# Feature 2: Email length (short emails tend to be spammy)

email_length = np.random.randint(20, 300, num_samples)

# Labels: Spam (1) or Not Spam (0)

labels = (suspicious_words + email_length / 50 > 10).astype(int)

# Creating feature matrix

X = np.column_stack((suspicious_words, email_length))

y = labels

# Splitting into training and testing sets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

3. Training the Logistic Regression Model

Now, we train a Logistic Regression model on our dataset.

# Training the model

model = LogisticRegression()

model.fit(X_train, y_train)

# Making predictions

y_pred = model.predict(X_test)

# Evaluating the model

accuracy = accuracy_score(y_test, y_pred)

print(f"Model Accuracy: {accuracy:.2f}")

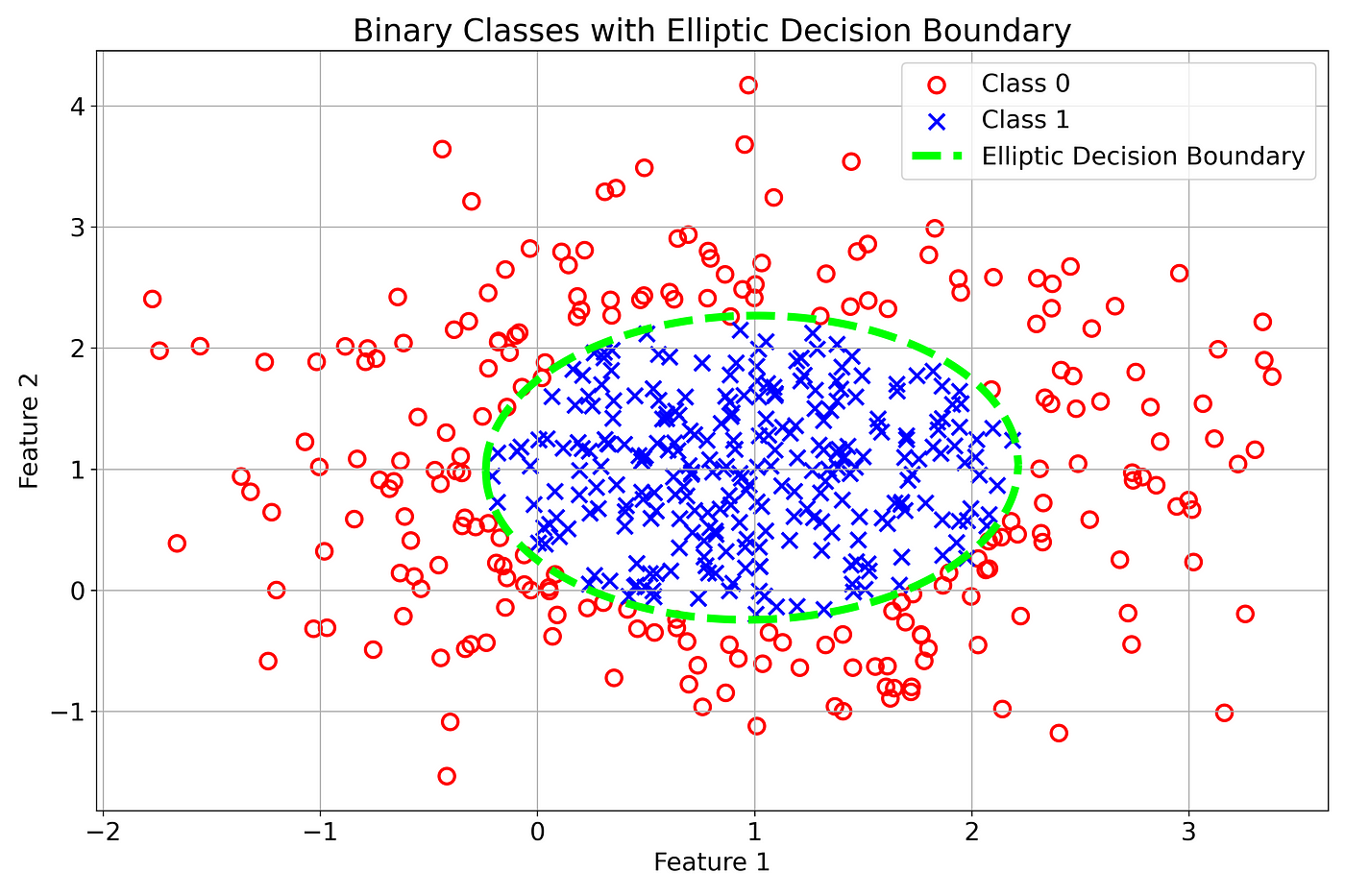

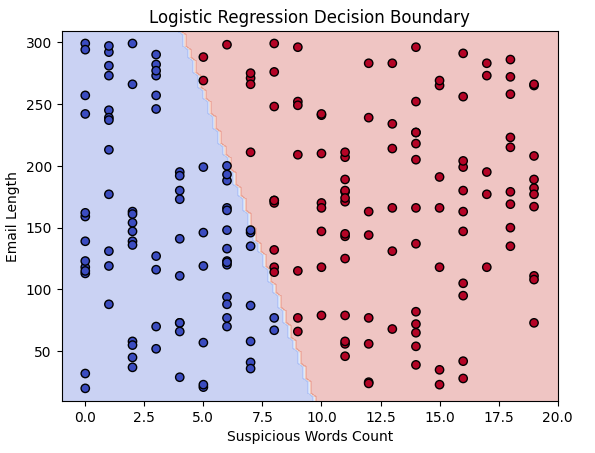

4. Visualizing Decision Boundary

The decision boundary helps us see how the model separates spam from non-spam emails. We plot the boundary in 2D.

# Function to plot decision boundary

def plot_decision_boundary(model, X, y):

x_min, x_max = X[:, 0].min() - 1, X[:, 0].max() + 1

y_min, y_max = X[:, 1].min() - 10, X[:, 1].max() + 10

xx, yy = np.meshgrid(np.linspace(x_min, x_max, 100),

np.linspace(y_min, y_max, 100))

Z = model.predict(np.c_[xx.ravel(), yy.ravel()])

Z = Z.reshape(xx.shape)

plt.contourf(xx, yy, Z, alpha=0.3, cmap=plt.cm.coolwarm)

plt.scatter(X[:, 0], X[:, 1], c=y, edgecolors='k', cmap=plt.cm.coolwarm)

plt.xlabel("Suspicious Words Count")

plt.ylabel("Email Length")

plt.title("Logistic Regression Decision Boundary")

plt.show()

# Plotting the decision boundary

plot_decision_boundary(model, X, y)

This plot shows how the model separates spam and non-spam emails using our two features.

Key Takeaways

- Logistic Regression is used for binary classification.

- It estimates probabilities using the sigmoid function.

- We generated a synthetic dataset mimicking spam detection.

- We trained and evaluated a Logistic Regression model.

- Decision boundaries help visualize how the model classifies data.

6. Multi-Class Classification with Logistic Regression

In this section, we will implement a Multi-Class Classification model using Logistic Regression. Instead of a binary classification problem, we will classify data points into three distinct categories.

This project predicts a student's success level based on study hours and past grades using Logistic Regression.

We classify students into three categories:

- Fail (0)

- Pass (1)

- High Pass (2)

Step 1: Import Libraries

We start by importing necessary libraries for:

- Data generation

- Visualization

- Model training

import numpy as np

import matplotlib.pyplot as plt

from sklearn.datasets import make_classification

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import StandardScaler

from sklearn.linear_model import LogisticRegression

from sklearn.metrics import ConfusionMatrixDisplay, classification_report

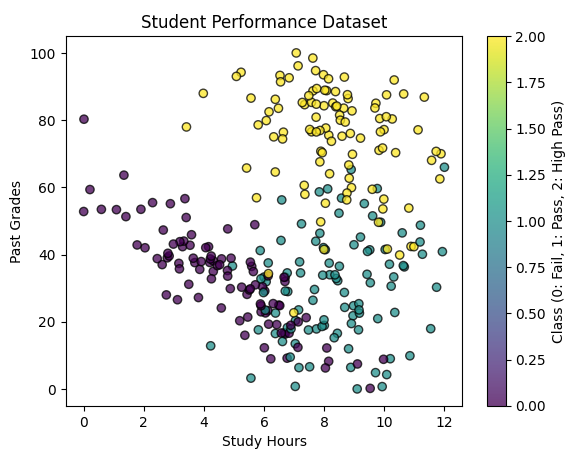

Step 2: Generate Synthetic Data

We create artificial student data using make_classification.

Each student has:

- Past Grades (0-100)

- Study Hours (non-negative)

We set random_state = 457897 to ensure reproducibility.

# Generate a classification dataset

X, y = make_classification(n_samples=300,

n_features=2,

n_classes=3,

n_clusters_per_class=1,

n_informative=2,

n_redundant=0,

random_state=457897) # Ensures consistent results

# Normalize Study Hours to be non-negative & scale Past Grades (0-100)

X[:, 0] = X[:, 0] * 12

X[:, 1] = X[:, 1] * 100

# Scatter plot of generated data

plt.figure(figsize=(7, 5))

plt.scatter(X[:, 0], X[:, 1], c=y, cmap='viridis', edgecolors='k', alpha=0.75)

plt.xlabel("Study Hours")

plt.ylabel("Past Grades")

plt.title("Student Performance Dataset")

plt.colorbar(label="Class (0: Fail, 1: Pass, 2: High Pass)")

plt.show()

Step 3: Split the Data

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=457897, stratify=y)

# Standardizing features for better model performance

scaler = StandardScaler()

X_train = scaler.fit_transform(X_train)

X_test = scaler.transform(X_test)

Step 4: Train Logistic Regression Model

from sklearn.multiclass import OneVsRestClassifier

# Define and train the model

model = OneVsRestClassifier(LogisticRegression(solver='lbfgs'))

model.fit(X_train, y_train)

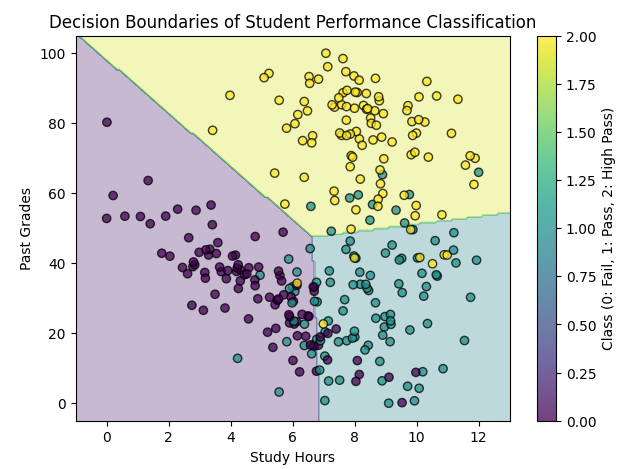

Step 5: Visualizing Decision Boundaries

# Define a mesh grid for visualization

x_min, x_max = X[:, 0].min() - 1, X[:, 0].max() + 1

y_min, y_max = X[:, 1].min() - 5, X[:, 1].max() + 5

xx, yy = np.meshgrid(np.linspace(x_min, x_max, 200),

np.linspace(y_min, y_max, 200))

# Predict on the mesh grid

Z = model.predict(scaler.transform(np.c_[xx.ravel(), yy.ravel()]))

Z = Z.reshape(xx.shape)

# Plot decision boundary

plt.figure(figsize=(7, 5))

plt.contourf(xx, yy, Z, alpha=0.3, cmap="viridis")

plt.scatter(X[:, 0], X[:, 1], c=y, cmap="viridis", edgecolors='k', alpha=0.75)

plt.xlabel("Study Hours")

plt.ylabel("Past Grades")

plt.title("Decision Boundaries of Student Performance Classification")

plt.colorbar(label="Class (0: Fail, 1: Pass, 2: High Pass)")

plt.show()

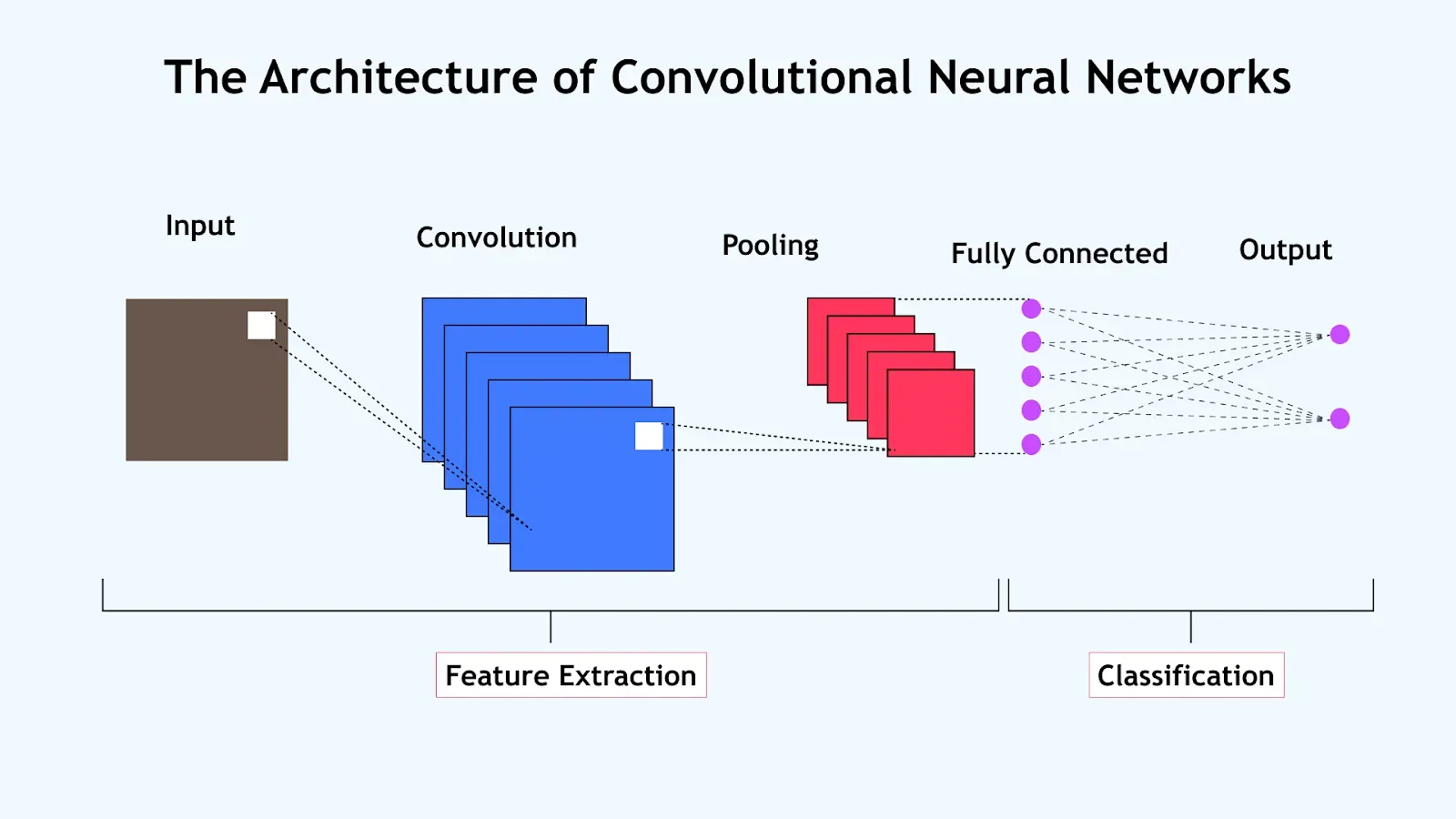

Neural Networks: Intuition and Model

- Understanding Neural Networks

- Biological Inspiration: The Brain and Synapses

- Importance of Layers in Neural Networks

- Face Recognition Example: Layer-by-Layer Processing

- Mathematical Representation of a Neural Network

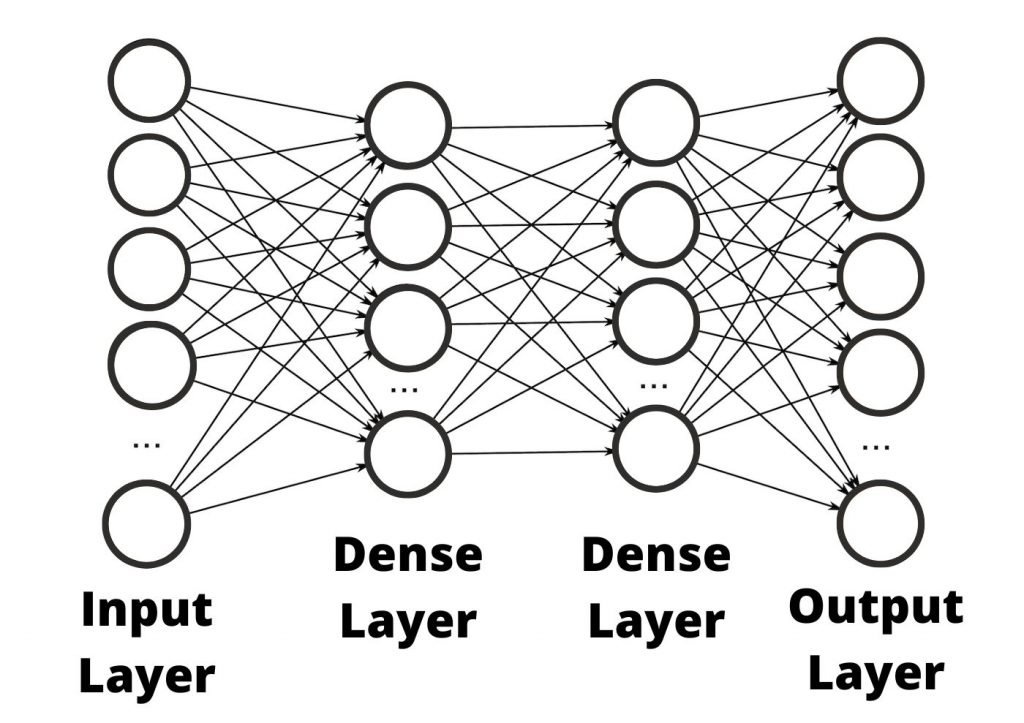

Understanding Neural Networks

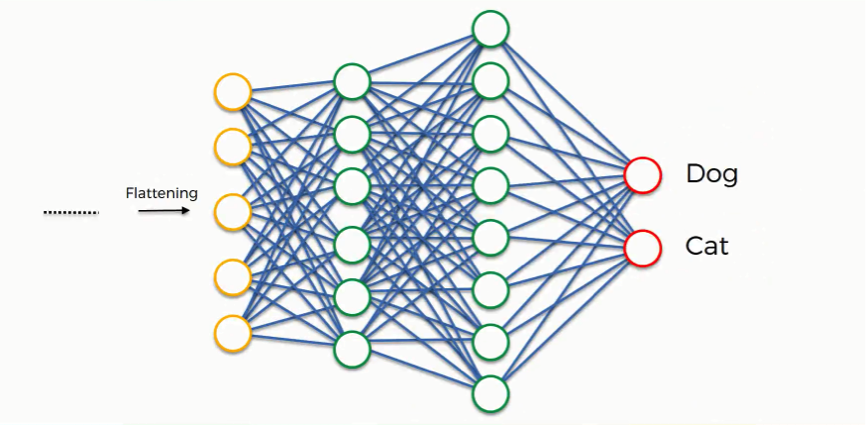

Neural networks are a fundamental concept in deep learning, inspired by the way the human brain processes information. They consist of layers of artificial neurons that transform input data into meaningful outputs. At the core of a neural network is a simple mathematical operation: each neuron receives inputs, applies a weighted sum, adds a bias term, and passes the result through an activation function. This process allows the network to learn patterns and make predictions.

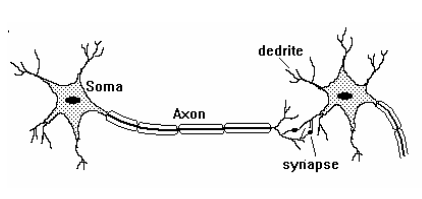

Biological Inspiration: The Brain and Synapses

Artificial neural networks (ANNs) are designed based on the biological structure of the human brain. The brain consists of billions of neurons, interconnected through structures called synapses. Neurons communicate with each other by transmitting electrical and chemical signals, which play a critical role in learning, memory, and decision-making processes.

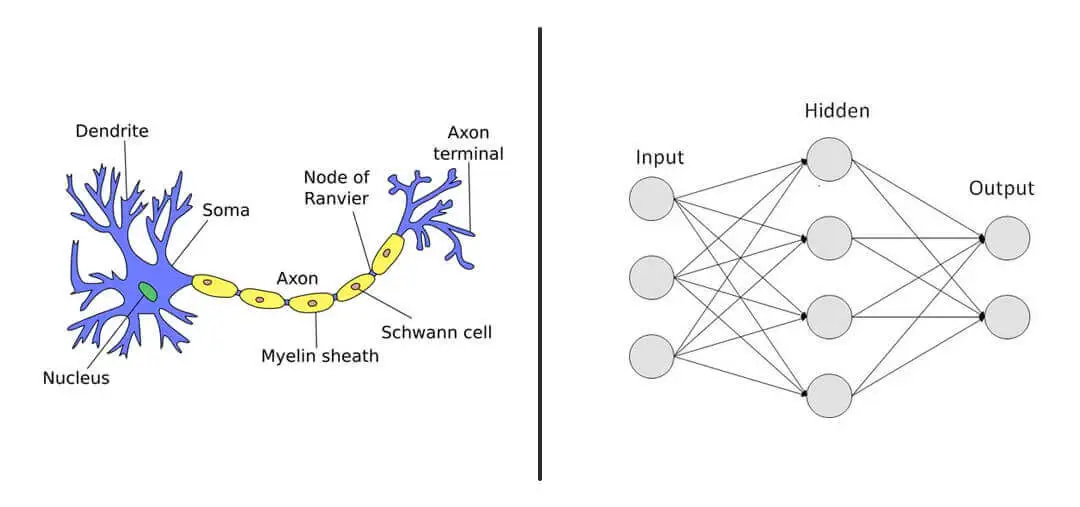

Structure of a Biological Neuron

Each biological neuron consists of several key components:

- Dendrites: Receive input signals from other neurons.

- Cell Body (Soma): Processes the received signals and determines whether the neuron should be activated.

- Axon: Transmits the output signal to other neurons.

- Synapses: Junctions between neurons where chemical neurotransmitters facilitate communication.

Artificial Neural Networks vs. Biological Networks

In artificial neural networks:

- Neurons function as computational units.

- Weights correspond to synaptic strengths, determining how influential an input is.

- Bias terms help shift the activation threshold.

- Activation functions mimic the way biological neurons fire only when certain thresholds are exceeded.

Importance of Layers in Neural Networks

Neural networks are composed of multiple layers, each responsible for extracting and processing features from input data. The more layers a network has, the deeper it becomes, allowing it to learn complex hierarchical patterns.

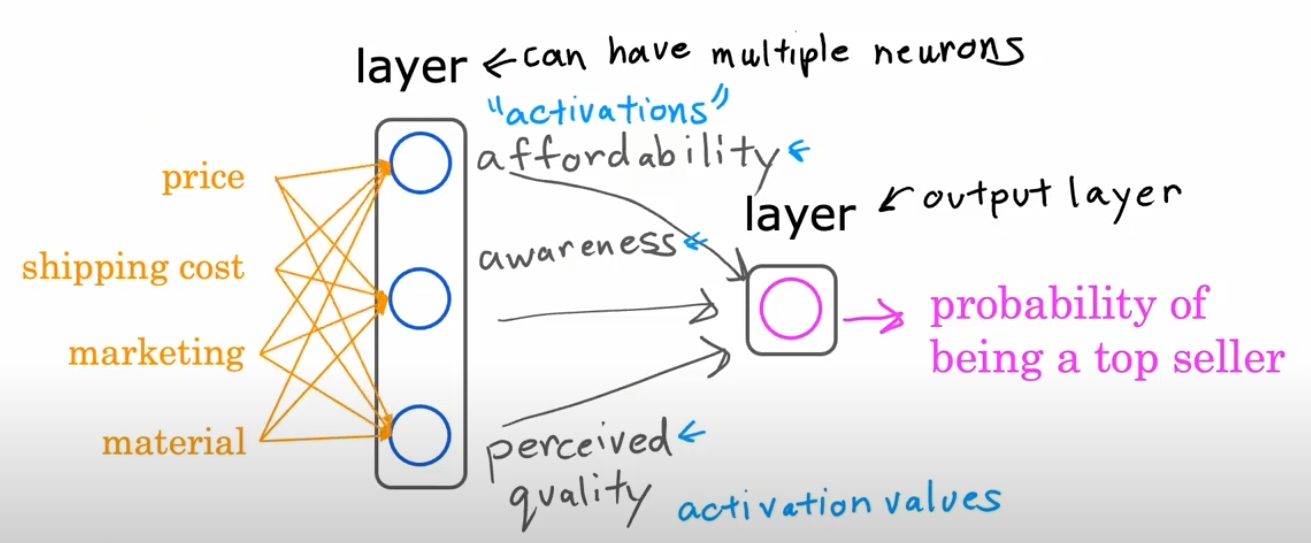

Example: Predicting a T-shirt's Top-Seller Status

Consider an online clothing store that wants to predict whether a new T-shirt will become a top-seller. Several factors influence this outcome, which serve as inputs to our neural network:

- Price ()

- Shipping Cost ()

- Marketing ()

- Material ()

These inputs are fed into the first layer of the network, which extracts meaningful features. A possible hidden layer structure could be:

- Hidden Layer 1: Contains a few activations functions like: affordability , awareness, perceived quality.

- Output Layer: Aggregates information from the previous layers to make a final prediction.

The output layer applies a sigmoid activation function:

where is a weighted sum of the previous layer’s outputs. If , we classify the T-shirt as a top-seller; otherwise, it is not.

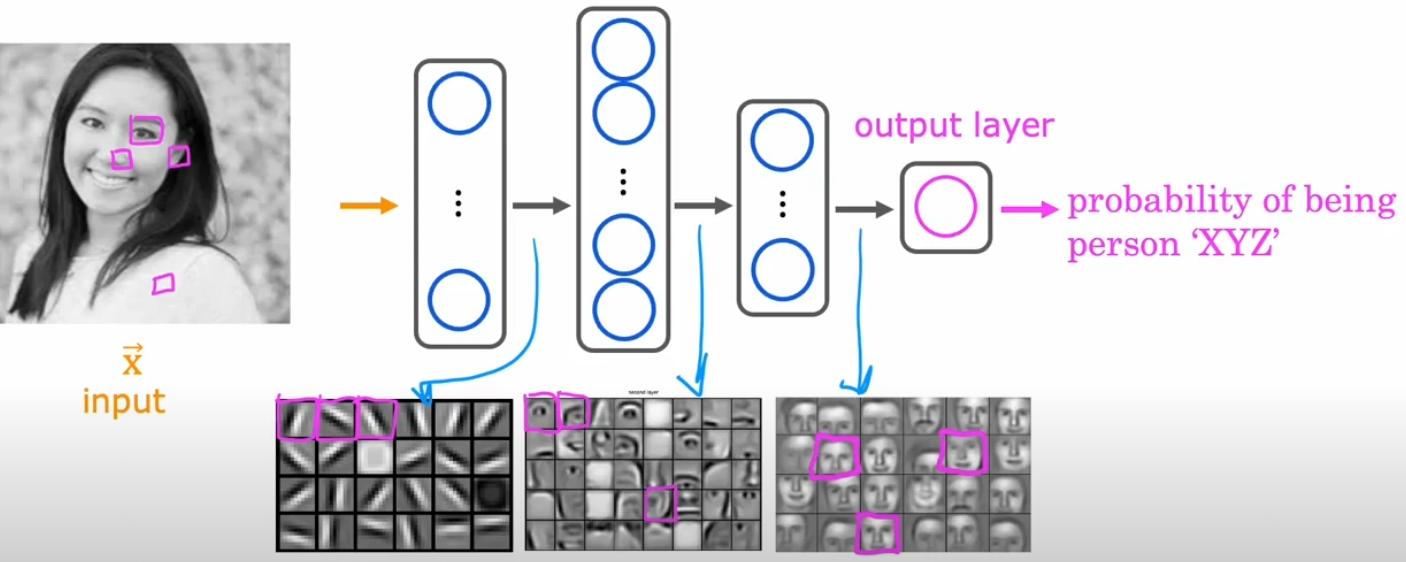

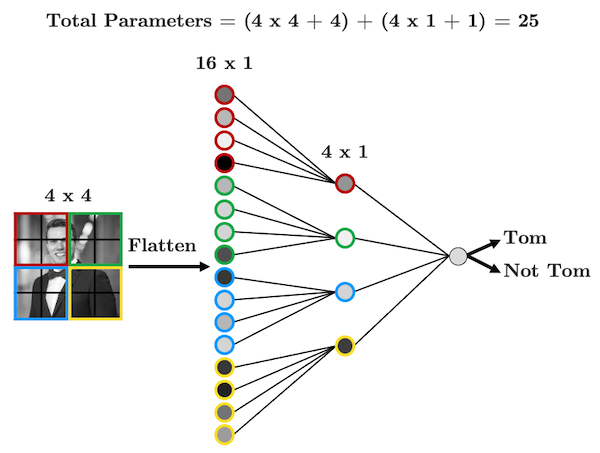

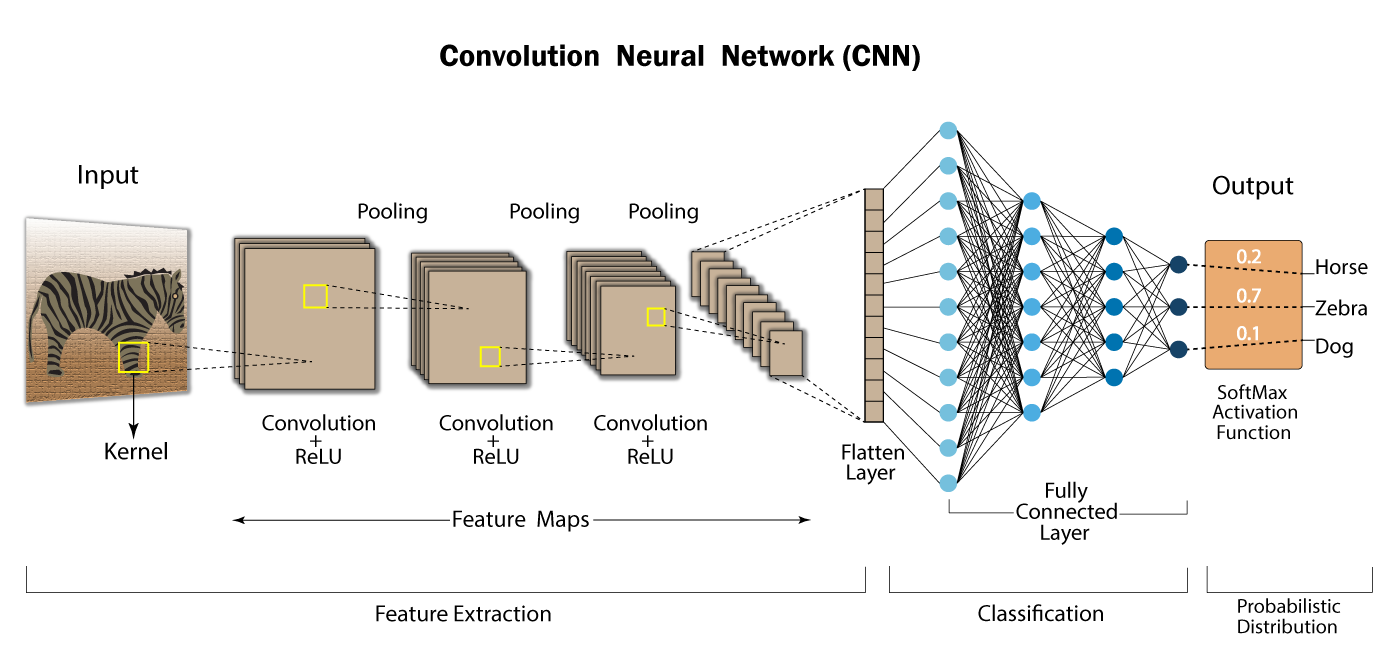

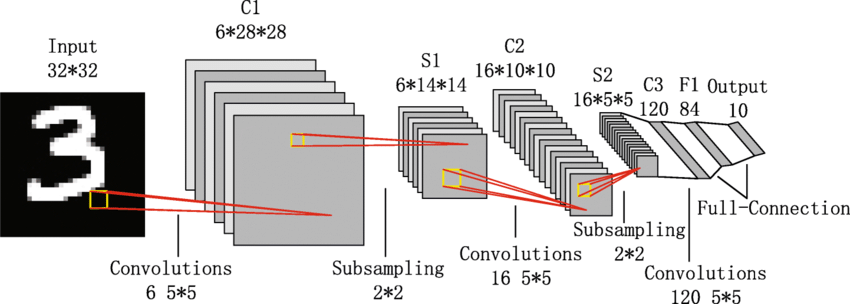

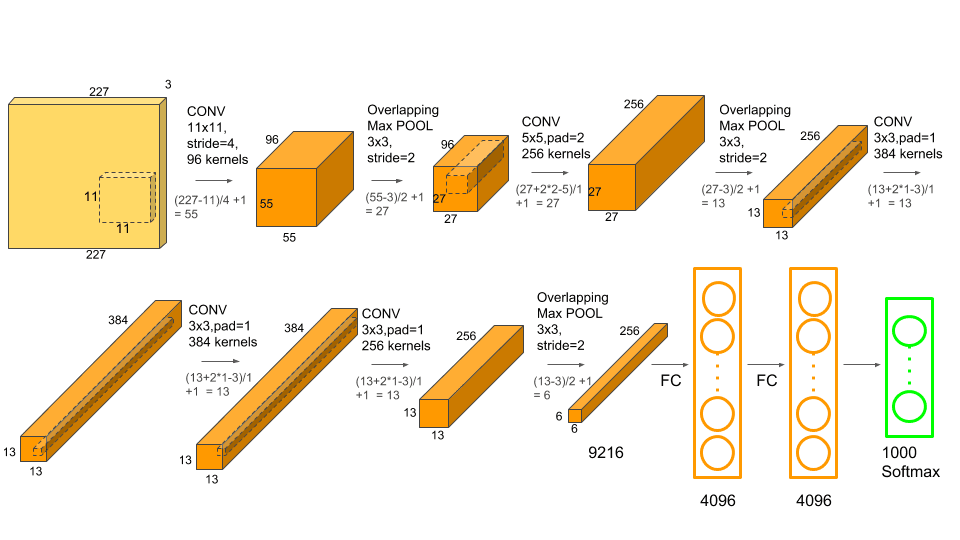

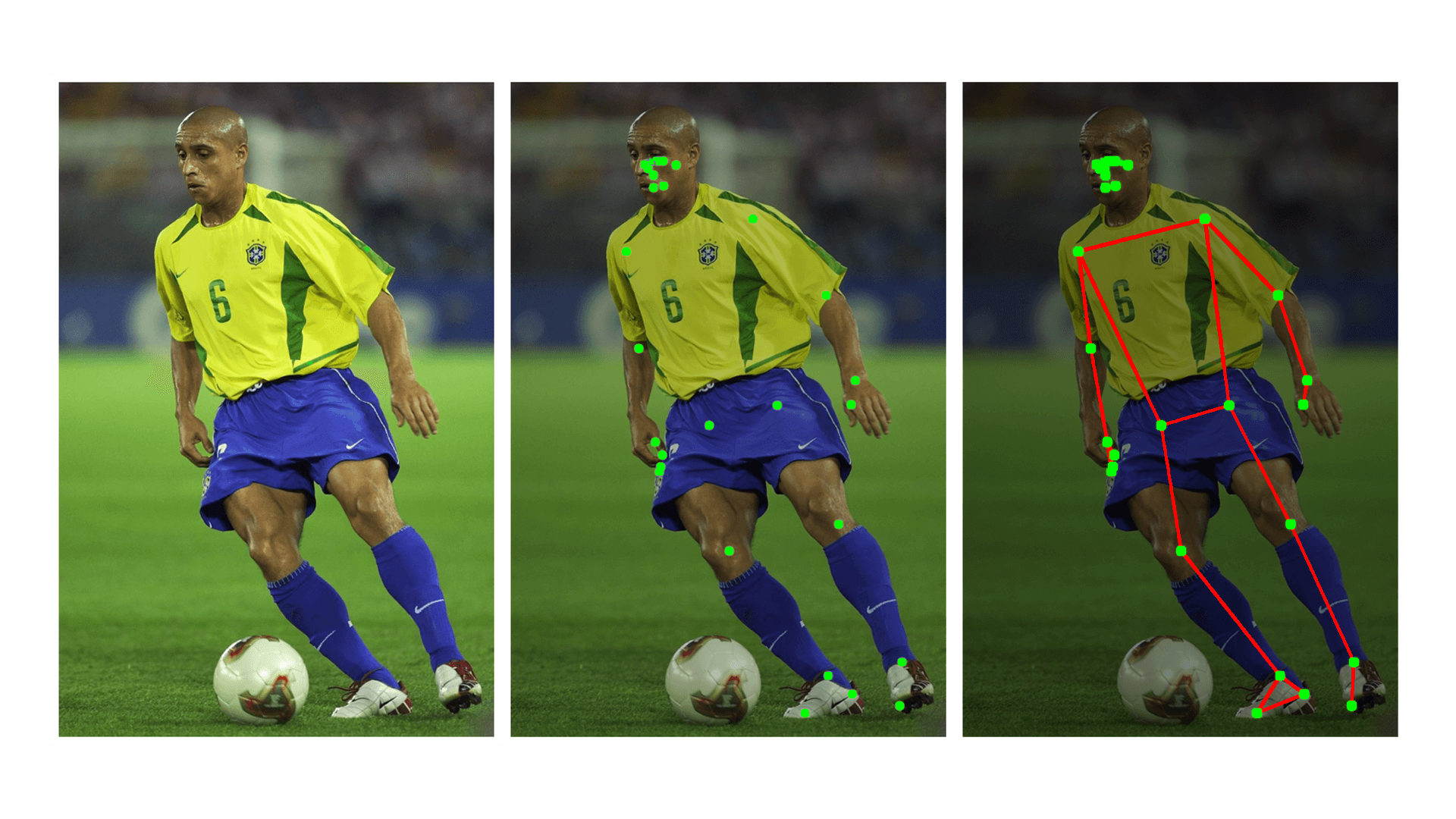

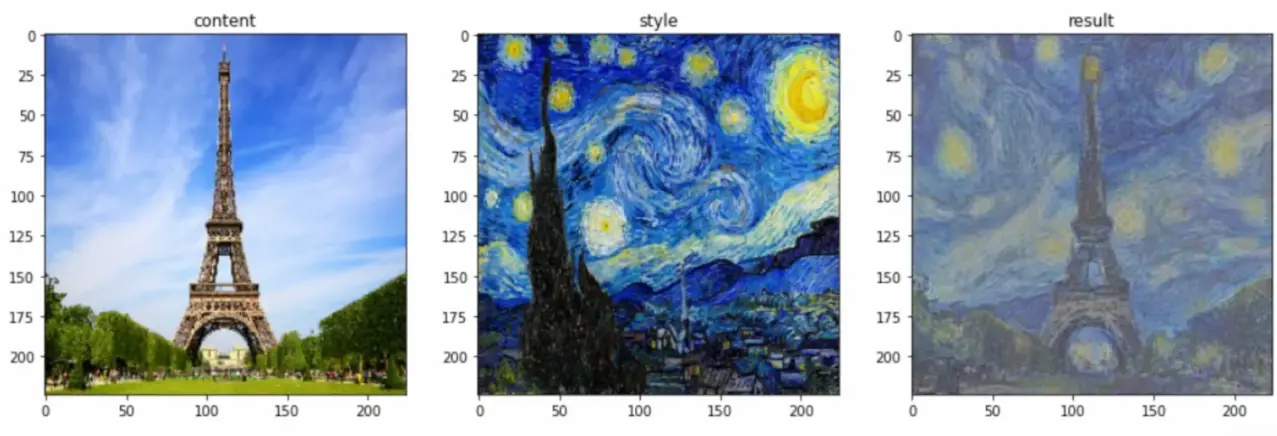

Face Recognition Example: Layer-by-Layer Processing

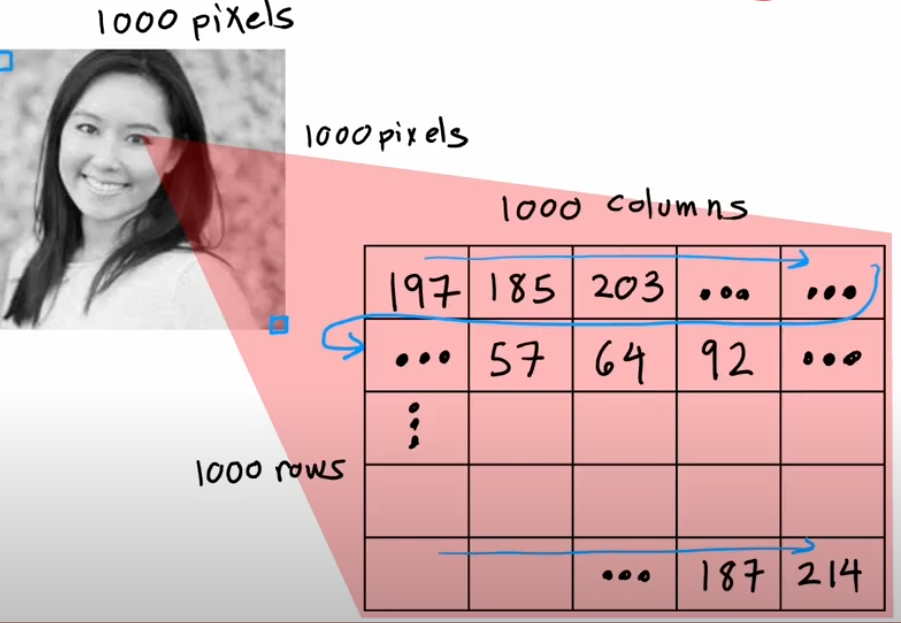

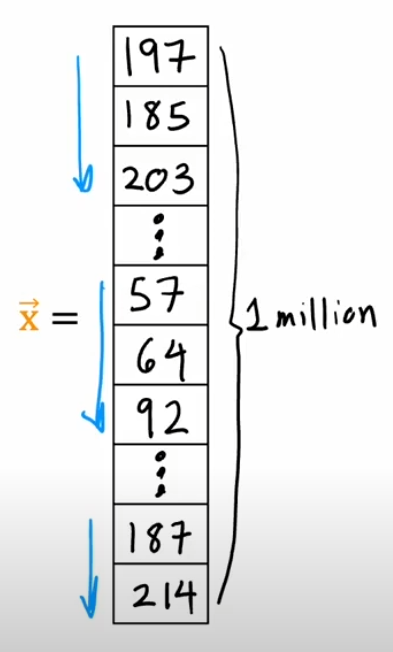

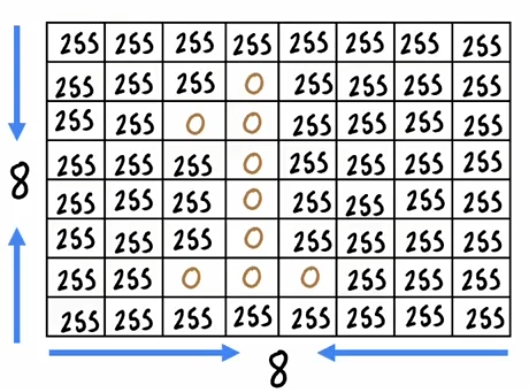

Face recognition is a real-world example where neural networks excel. Let's consider a deep neural network designed for face recognition, breaking down the processing step by step:

- Input Layer: An image of a face is converted into pixel values (e.g., a 100x100 grayscale image would be represented as a vector of 10,000 pixel values).

- First Hidden Layer: Detects basic edges and corners in the image by applying simple filters.

- Second Hidden Layer: Identifies facial features like eyes, noses, and mouths by combining edge and corner information.

- Third Hidden Layer: Recognizes entire facial structures and relationships between features.

- Output Layer: Determines whether the face matches a known identity by producing a probability score.

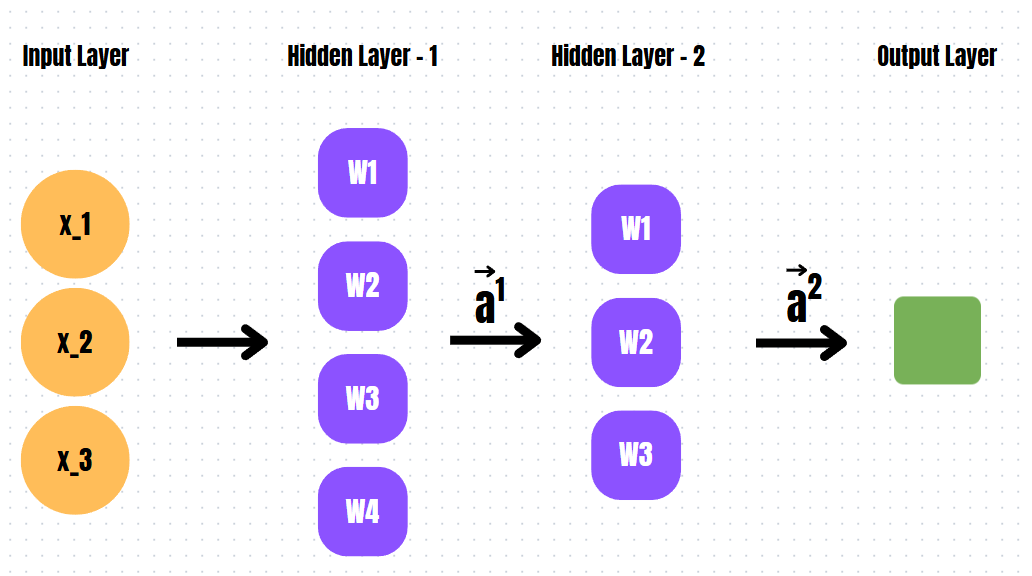

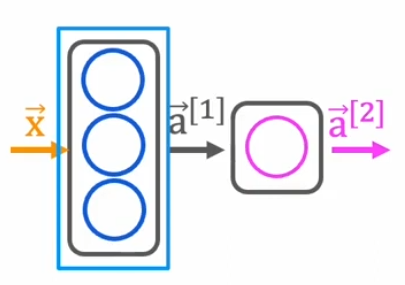

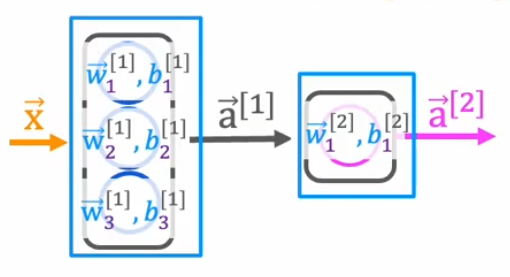

Mathematical Representation of a Neural Network

To efficiently compute activations in a neural network, we use matrix notation. The general formula for forward propagation is:

where:

- is the activation from the previous layer,

- is the weight matrix of the current layer,

- is the bias vector,

- is the linear combination of inputs before applying the activation function.

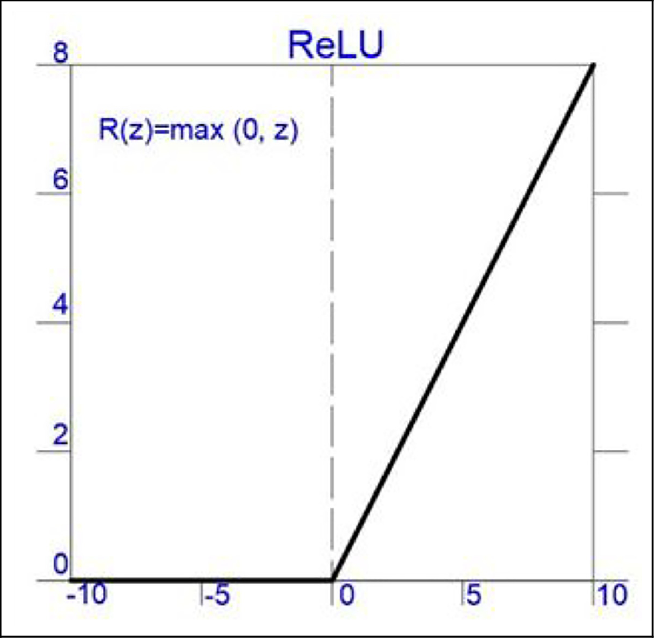

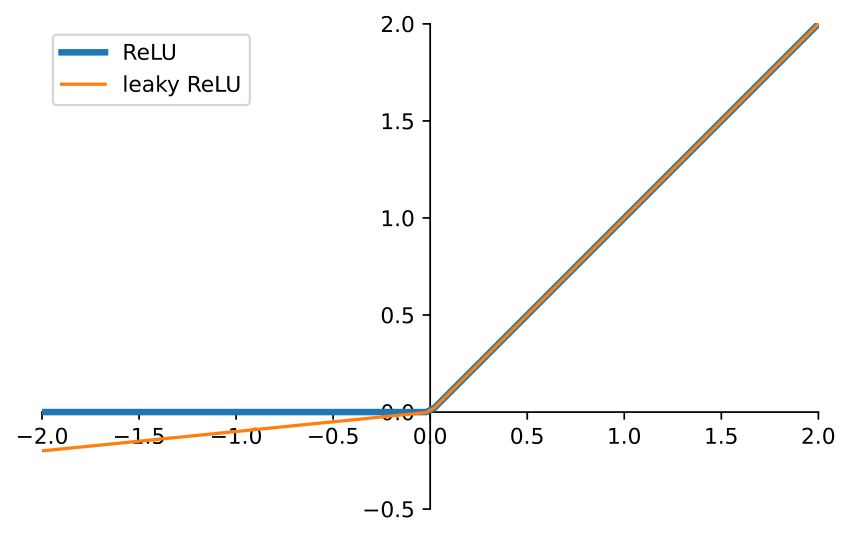

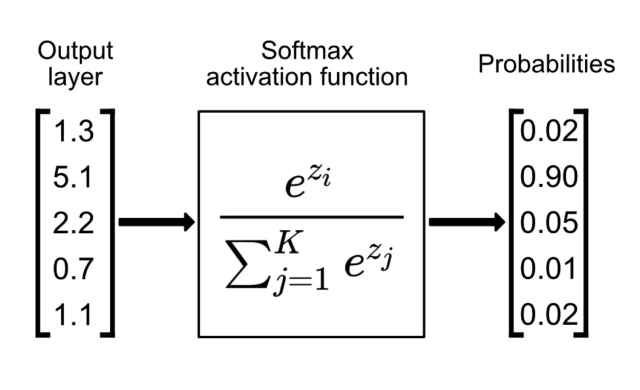

The activation function is applied as:

where is typically a sigmoid, ReLU, or softmax function.

Example Calculation

Suppose we have a single-layer neural network with three inputs and one neuron. We define the inputs as:

The corresponding weight matrix and bias term are given by:

The weighted sum (Z) is calculated as:

Applying the sigmoid activation function:

Since the output is above 0.5, we classify this case as positive.

Two Hidden Layer Neural Network Calculation

Now, let's consider a neural network with two hidden layers.

Network Structure

- Input Layer: 3 input values

- First Hidden Layer: 4 neurons

- Second Hidden Layer: 3 neurons

- Output Layer: 1 neuron

First Hidden Layer Calculation

Given input vector:

Weight matrix for the first hidden layer:

Bias vector:

Computing the weighted sum:

Applying the sigmoid activation function:

Second Hidden Layer Calculation

Weight matrix:

Bias vector:

Computing the weighted sum:

Applying the sigmoid activation function:

Output Layer Calculation

Weight matrix:

Bias:

Computing the final weighted sum:

Applying the sigmoid activation function:

If , the output is classified as positive.

Conclusion

- The first hidden layer extracts basic features.

- The second hidden layer learns more abstract representations.