Neural Networks: Intuition and Model

- Understanding Neural Networks

- Biological Inspiration: The Brain and Synapses

- Importance of Layers in Neural Networks

- Face Recognition Example: Layer-by-Layer Processing

- Mathematical Representation of a Neural Network

Understanding Neural Networks

Neural networks are a fundamental concept in deep learning, inspired by the way the human brain processes information. They consist of layers of artificial neurons that transform input data into meaningful outputs. At the core of a neural network is a simple mathematical operation: each neuron receives inputs, applies a weighted sum, adds a bias term, and passes the result through an activation function. This process allows the network to learn patterns and make predictions.

Biological Inspiration: The Brain and Synapses

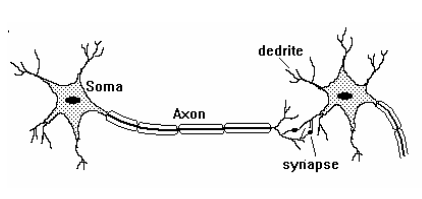

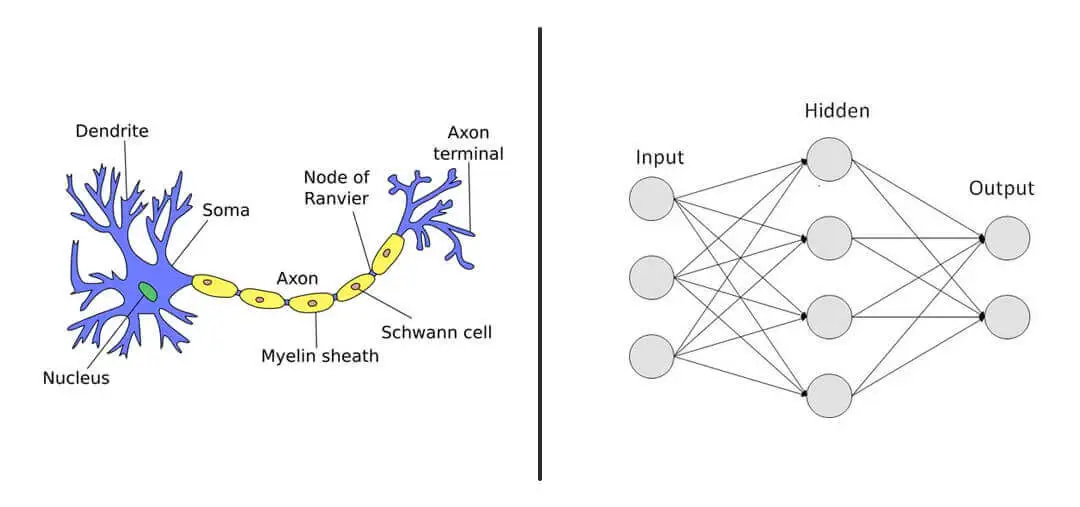

Artificial neural networks (ANNs) are designed based on the biological structure of the human brain. The brain consists of billions of neurons, interconnected through structures called synapses. Neurons communicate with each other by transmitting electrical and chemical signals, which play a critical role in learning, memory, and decision-making processes.

Structure of a Biological Neuron

Each biological neuron consists of several key components:

- Dendrites: Receive input signals from other neurons.

- Cell Body (Soma): Processes the received signals and determines whether the neuron should be activated.

- Axon: Transmits the output signal to other neurons.

- Synapses: Junctions between neurons where chemical neurotransmitters facilitate communication.

Artificial Neural Networks vs. Biological Networks

In artificial neural networks:

- Neurons function as computational units.

- Weights correspond to synaptic strengths, determining how influential an input is.

- Bias terms help shift the activation threshold.

- Activation functions mimic the way biological neurons fire only when certain thresholds are exceeded.

Importance of Layers in Neural Networks

Neural networks are composed of multiple layers, each responsible for extracting and processing features from input data. The more layers a network has, the deeper it becomes, allowing it to learn complex hierarchical patterns.

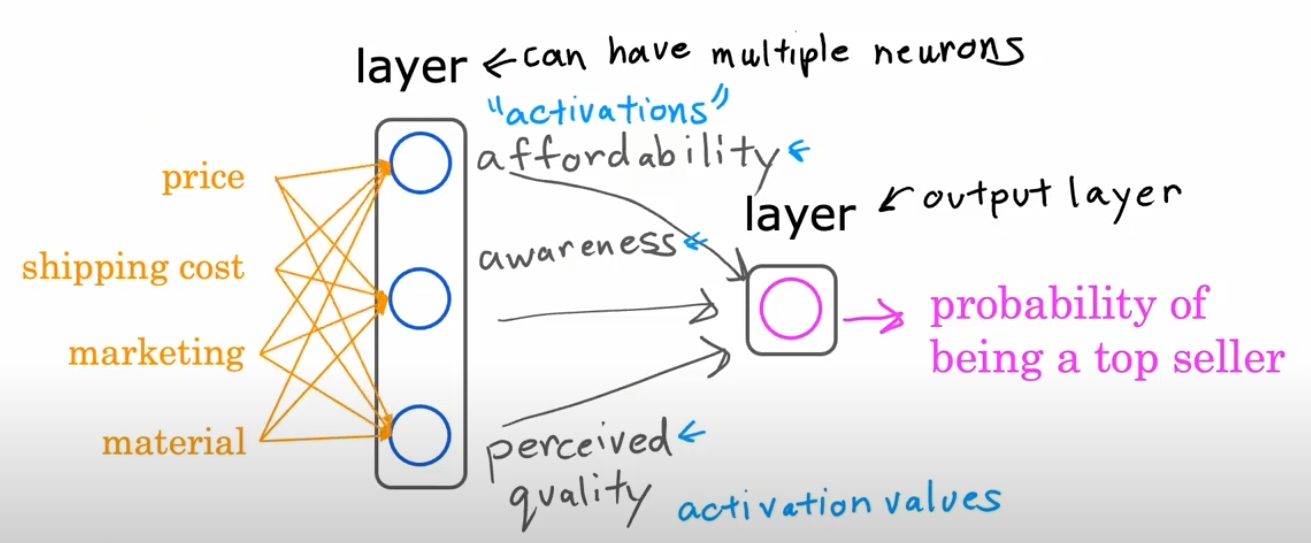

Example: Predicting a T-shirt's Top-Seller Status

Consider an online clothing store that wants to predict whether a new T-shirt will become a top-seller. Several factors influence this outcome, which serve as inputs to our neural network:

- Price ()

- Shipping Cost ()

- Marketing ()

- Material ()

These inputs are fed into the first layer of the network, which extracts meaningful features. A possible hidden layer structure could be:

- Hidden Layer 1: Contains a few activations functions like: affordability , awareness, perceived quality.

- Output Layer: Aggregates information from the previous layers to make a final prediction.

The output layer applies a sigmoid activation function:

where is a weighted sum of the previous layer’s outputs. If , we classify the T-shirt as a top-seller; otherwise, it is not.

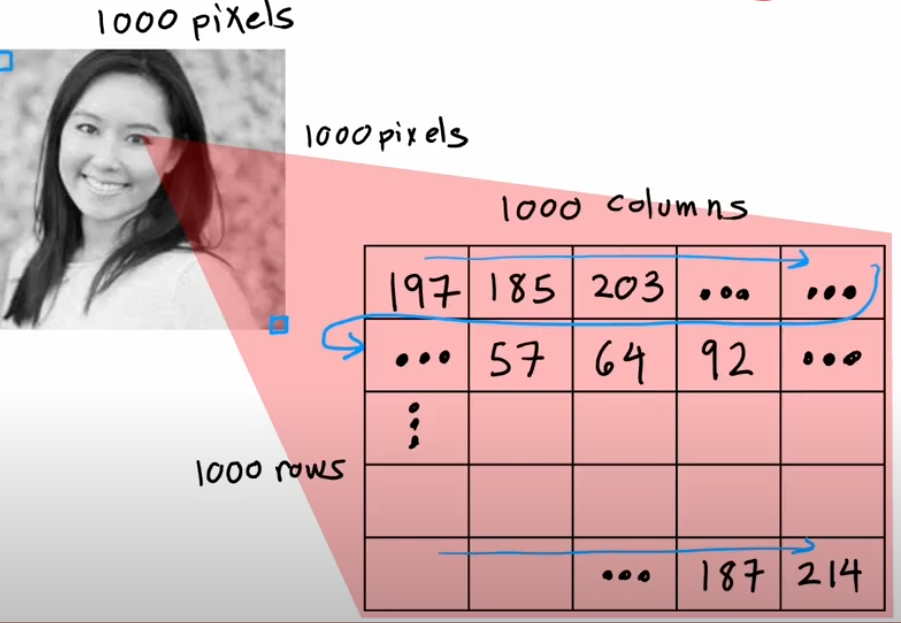

Face Recognition Example: Layer-by-Layer Processing

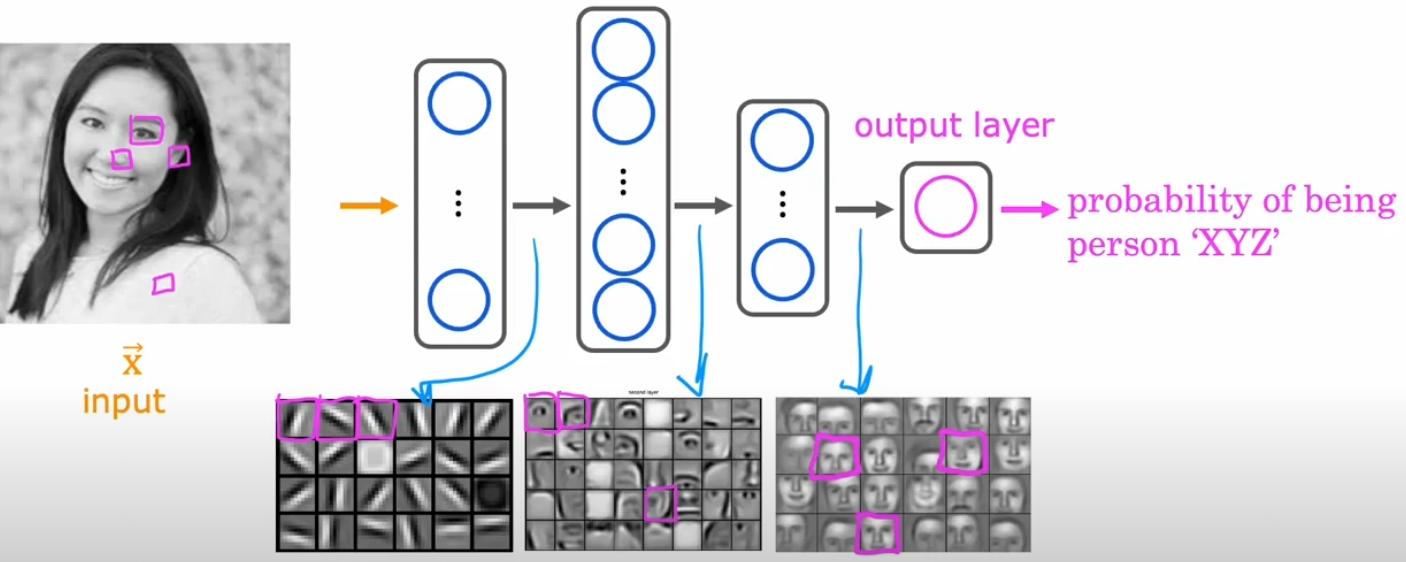

Face recognition is a real-world example where neural networks excel. Let's consider a deep neural network designed for face recognition, breaking down the processing step by step:

- Input Layer: An image of a face is converted into pixel values (e.g., a 100x100 grayscale image would be represented as a vector of 10,000 pixel values).

- First Hidden Layer: Detects basic edges and corners in the image by applying simple filters.

- Second Hidden Layer: Identifies facial features like eyes, noses, and mouths by combining edge and corner information.

- Third Hidden Layer: Recognizes entire facial structures and relationships between features.

- Output Layer: Determines whether the face matches a known identity by producing a probability score.

Mathematical Representation of a Neural Network

To efficiently compute activations in a neural network, we use matrix notation. The general formula for forward propagation is:

where:

- is the activation from the previous layer,

- is the weight matrix of the current layer,

- is the bias vector,

- is the linear combination of inputs before applying the activation function.

The activation function is applied as:

where is typically a sigmoid, ReLU, or softmax function.

Example Calculation

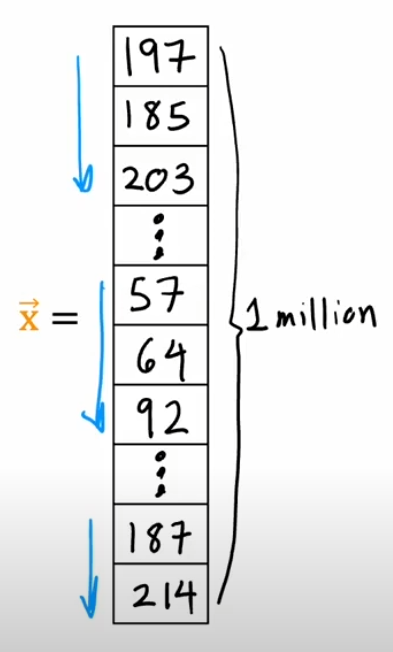

Suppose we have a single-layer neural network with three inputs and one neuron. We define the inputs as:

The corresponding weight matrix and bias term are given by:

The weighted sum (Z) is calculated as:

Applying the sigmoid activation function:

Since the output is above 0.5, we classify this case as positive.

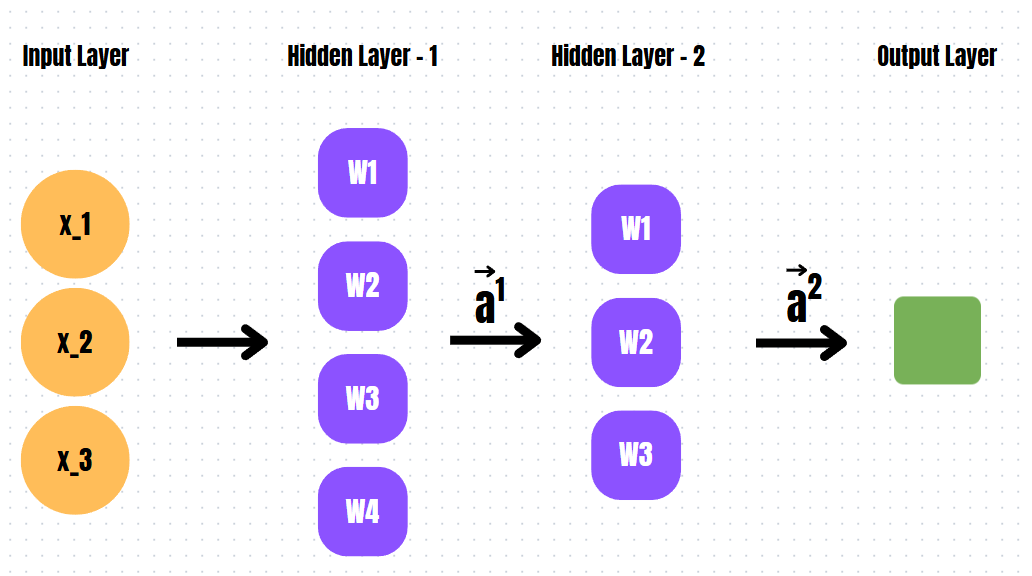

Two Hidden Layer Neural Network Calculation

Now, let's consider a neural network with two hidden layers.

Network Structure

- Input Layer: 3 input values

- First Hidden Layer: 4 neurons

- Second Hidden Layer: 3 neurons

- Output Layer: 1 neuron

First Hidden Layer Calculation

Given input vector:

Weight matrix for the first hidden layer:

Bias vector:

Computing the weighted sum:

Applying the sigmoid activation function:

Second Hidden Layer Calculation

Weight matrix:

Bias vector:

Computing the weighted sum:

Applying the sigmoid activation function:

Output Layer Calculation

Weight matrix:

Bias:

Computing the final weighted sum:

Applying the sigmoid activation function:

If , the output is classified as positive.

Conclusion

- The first hidden layer extracts basic features.

- The second hidden layer learns more abstract representations.

- The output layer makes the final classification decision.

This demonstrates how a multi-layer neural network processes information in a hierarchical manner.

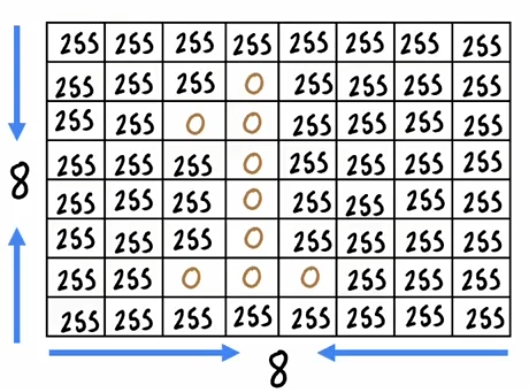

Handwritten Digit Recognition Using Two Layers

A classic application of neural networks is handwritten digit recognition. Let's consider recognizing the digit '1' from an 8x8 pixel grid using a simple neural network with two layers.

First Layer: Feature Extraction

- The 8x8 image is flattened into a 64-dimensional input vector.

- This vector is processed by neurons in the first hidden layer.

- The neurons identify edges, curves, and simple shapes using learned weights.

- Mathematically, the output of the first layer can be represented as:

Second Layer: Pattern Recognition

- The first layer's output is passed to a second hidden layer.

- This layer detects digit-specific features, such as the vertical stroke characteristic of '1'.

- The transformation at this stage follows:

Output Layer: Classification

- The final layer has 10 neurons, each representing a digit from 0 to 9.

- The neuron with the highest activation determines the predicted digit:

This structured approach demonstrates how neural networks model real-world problems, from binary classification to deep learning applications like face and handwriting recognition.